Roy H. Kwon

Generative AI-enhanced Sector-based Investment Portfolio Construction

Dec 31, 2025Abstract:This paper investigates how Large Language Models (LLMs) from leading providers (OpenAI, Google, Anthropic, DeepSeek, and xAI) can be applied to quantitative sector-based portfolio construction. We use LLMs to identify investable universes of stocks within S&P 500 sector indices and evaluate how their selections perform when combined with classical portfolio optimization methods. Each model was prompted to select and weight 20 stocks per sector, and the resulting portfolios were compared with their respective sector indices across two distinct out-of-sample periods: a stable market phase (January-March 2025) and a volatile phase (April-June 2025). Our results reveal a strong temporal dependence in LLM portfolio performance. During stable market conditions, LLM-weighted portfolios frequently outperformed sector indices on both cumulative return and risk-adjusted (Sharpe ratio) measures. However, during the volatile period, many LLM portfolios underperformed, suggesting that current models may struggle to adapt to regime shifts or high-volatility environments underrepresented in their training data. Importantly, when LLM-based stock selection is combined with traditional optimization techniques, portfolio outcomes improve in both performance and consistency. This study contributes one of the first multi-model, cross-provider evaluations of generative AI algorithms in investment management. It highlights that while LLMs can effectively complement quantitative finance by enhancing stock selection and interpretability, their reliability remains market-dependent. The findings underscore the potential of hybrid AI-quantitative frameworks, integrating LLM reasoning with established optimization techniques, to produce more robust and adaptive investment strategies.

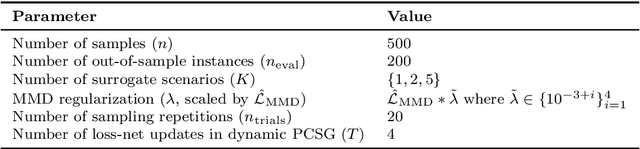

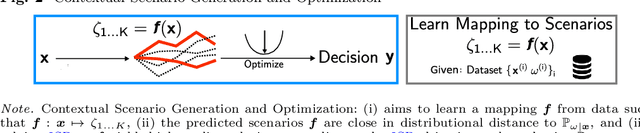

Contextual Scenario Generation for Two-Stage Stochastic Programming

Feb 07, 2025

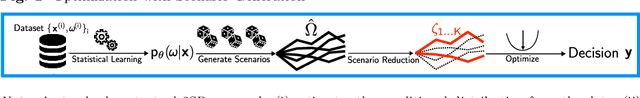

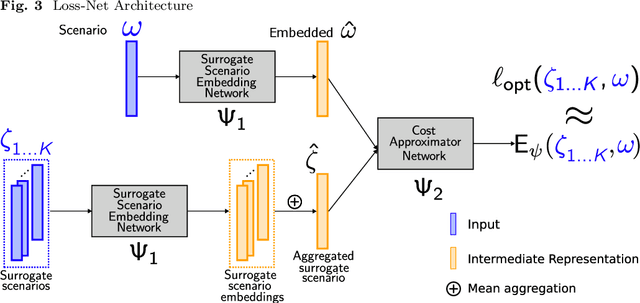

Abstract:Two-stage stochastic programs (2SPs) are important tools for making decisions under uncertainty. Decision-makers use contextual information to generate a set of scenarios to represent the true conditional distribution. However, the number of scenarios required is a barrier to implementing 2SPs, motivating the problem of generating a small set of surrogate scenarios that yield high-quality decisions when they represent uncertainty. Current scenario generation approaches do not leverage contextual information or do not address computational concerns. In response, we propose contextual scenario generation (CSG) to learn a mapping between the context and a set of surrogate scenarios of user-specified size. First, we propose a distributional approach that learns the mapping by minimizing a distributional distance between the predicted surrogate scenarios and the true contextual distribution. Second, we propose a task-based approach that aims to produce surrogate scenarios that yield high-quality decisions. The task-based approach uses neural architectures to approximate the downstream objective and leverages the approximation to search for the mapping. The proposed approaches apply to various problem structures and loosely only require efficient solving of the associated subproblems and 2SPs defined on the reduced scenario sets. Numerical experiments demonstrating the effectiveness of the proposed methods are presented.

ChatGPT-based Investment Portfolio Selection

Aug 11, 2023Abstract:In this paper, we explore potential uses of generative AI models, such as ChatGPT, for investment portfolio selection. Trusting investment advice from Generative Pre-Trained Transformer (GPT) models is a challenge due to model "hallucinations", necessitating careful verification and validation of the output. Therefore, we take an alternative approach. We use ChatGPT to obtain a universe of stocks from S&P500 market index that are potentially attractive for investing. Subsequently, we compared various portfolio optimization strategies that utilized this AI-generated trading universe, evaluating those against quantitative portfolio optimization models as well as comparing to some of the popular investment funds. Our findings indicate that ChatGPT is effective in stock selection but may not perform as well in assigning optimal weights to stocks within the portfolio. But when stocks selection by ChatGPT is combined with established portfolio optimization models, we achieve even better results. By blending strengths of AI-generated stock selection with advanced quantitative optimization techniques, we observed the potential for more robust and favorable investment outcomes, suggesting a hybrid approach for more effective and reliable investment decision-making in the future.

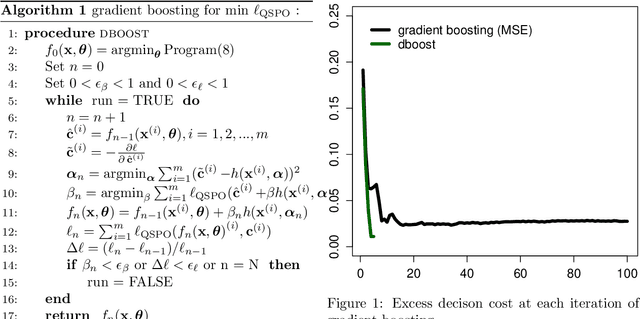

Gradient boosting for convex cone predict and optimize problems

Apr 14, 2022

Abstract:Many problems in engineering and statistics involve both predictive forecasting and decision-based optimization. Traditionally, predictive models are optimized independently from the final decision-based optimization problem. In contrast, a `smart, predict then optimize' (SPO) framework optimizes prediction models to explicitly minimize the final downstream decision loss. In this paper we present dboost, a gradient boosting algorithm for training prediction model ensembles to minimize decision regret. The dboost framework supports any convex optimization program that can be cast as convex quadratic cone program and gradient boosting is performed by implicit differentiation of a custom fixed-point mapping. To our knowledge, the dboost framework is the first general purpose implementation of gradient boosting to predict and optimize problems. Experimental results comparing with state-of-the-art SPO methods show that dboost can further reduce out-of-sample decision regret.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge