Rollin Omari

Bob

Adversarial Robustness on Image Classification with $k$-means

Dec 15, 2023

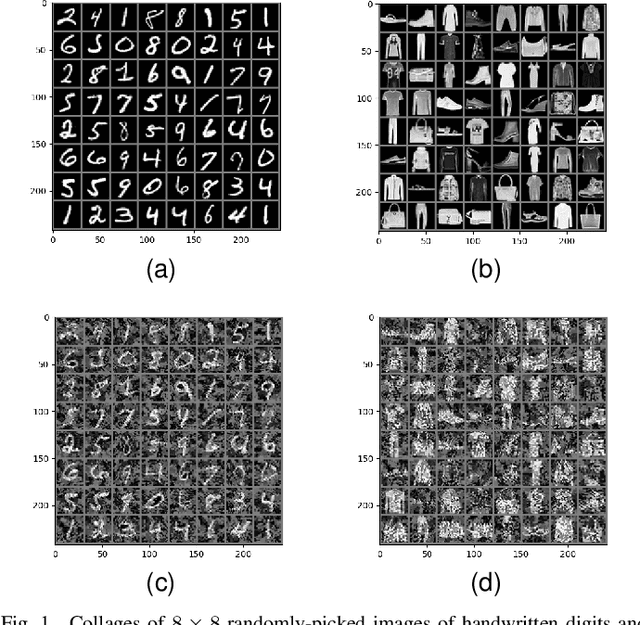

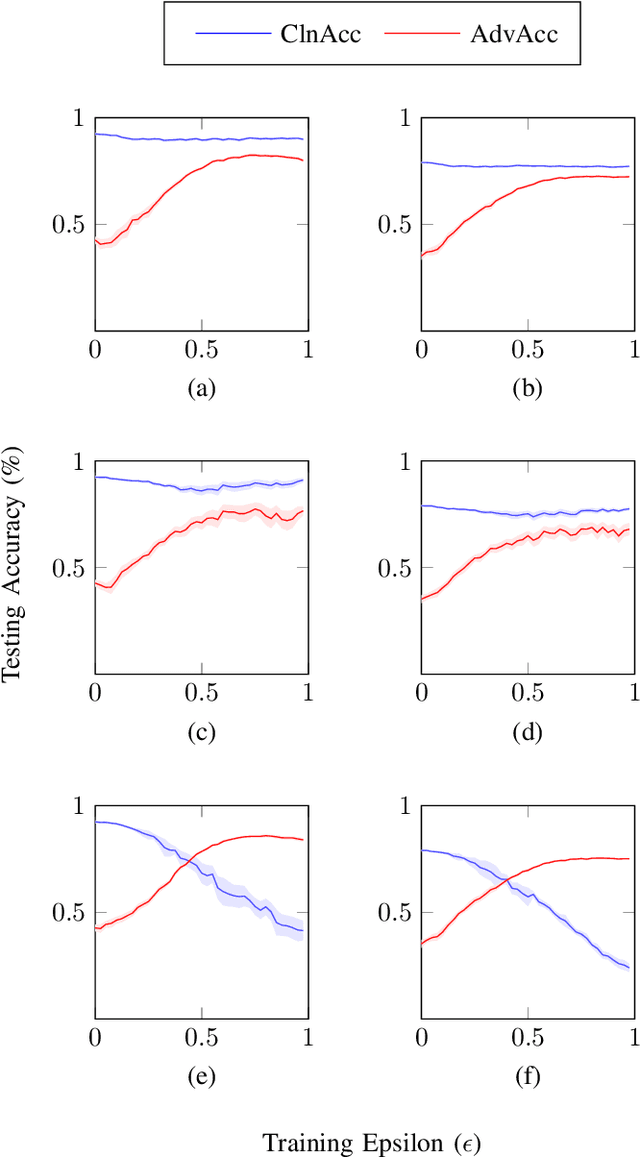

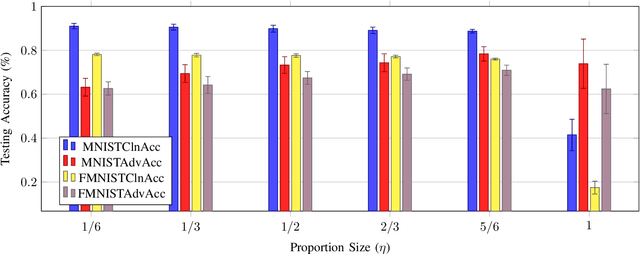

Abstract:In this paper we explore the challenges and strategies for enhancing the robustness of $k$-means clustering algorithms against adversarial manipulations. We evaluate the vulnerability of clustering algorithms to adversarial attacks, emphasising the associated security risks. Our study investigates the impact of incremental attack strength on training, introduces the concept of transferability between supervised and unsupervised models, and highlights the sensitivity of unsupervised models to sample distributions. We additionally introduce and evaluate an adversarial training method that improves testing performance in adversarial scenarios, and we highlight the importance of various parameters in the proposed training method, such as continuous learning, centroid initialisation, and adversarial step-count.

Analogical and Relational Reasoning with Spiking Neural Networks

Oct 14, 2020

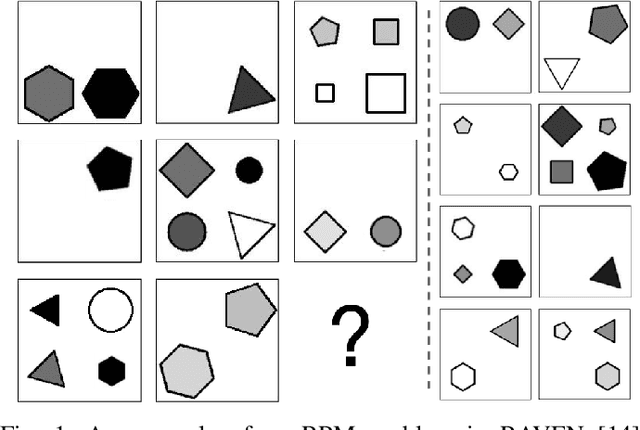

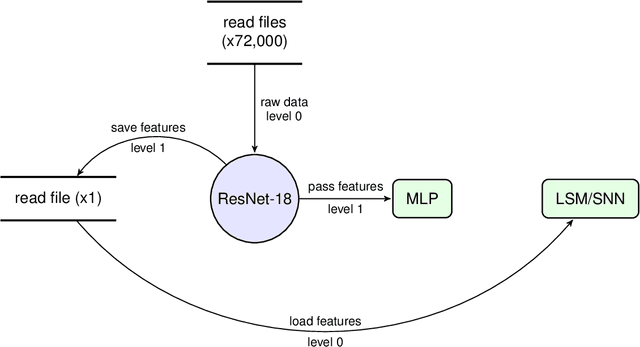

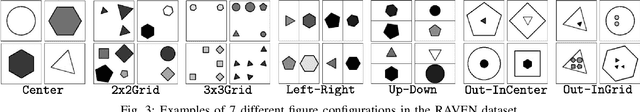

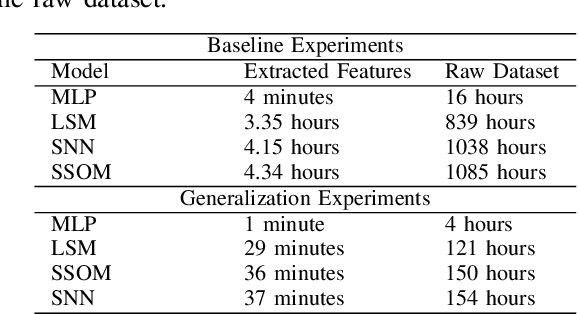

Abstract:Raven's Progressive Matrices have been widely used for measuring abstract reasoning and intelligence in humans. However for artificial learning systems, abstract reasoning remains a challenging problem. In this paper we investigate how neural networks augmented with biologically inspired spiking modules gain a significant advantage in solving this problem. To illustrate this, we first investigate the performance of our networks with supervised learning, then with unsupervised learning. Experiments on the RAVEN dataset show that the overall accuracy of our supervised networks surpass human-level performance, while our unsupervised networks significantly outperform existing unsupervised methods. Finally, our results from both supervised and unsupervised learning illustrate that, unlike their non-augmented counterparts, networks with spiking modules are able to extract and encode temporal features without any explicit instruction, do not heavily rely on training data, and generalise more readily to new problems. In summary, the results reported here indicate that artificial neural networks with spiking modules are well suited to solving abstract reasoning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge