Roland Perko

A real-time algorithm for human action recognition in RGB and thermal video

Apr 04, 2023Abstract:Monitoring the movement and actions of humans in video in real-time is an important task. We present a deep learning based algorithm for human action recognition for both RGB and thermal cameras. It is able to detect and track humans and recognize four basic actions (standing, walking, running, lying) in real-time on a notebook with a NVIDIA GPU. For this, it combines state of the art components for object detection (Scaled YoloV4), optical flow (RAFT) and pose estimation (EvoSkeleton). Qualitative experiments on a set of tunnel videos show that the proposed algorithm works robustly for both RGB and thermal video.

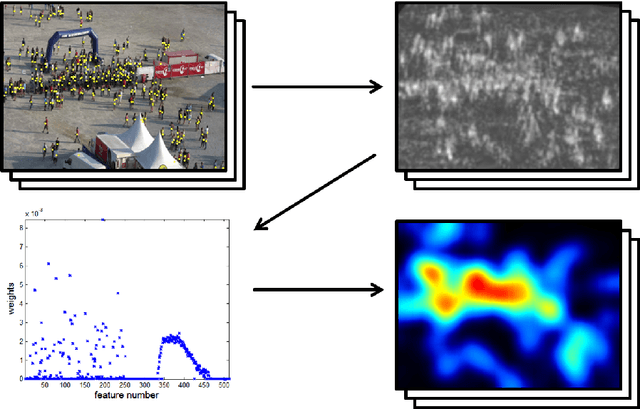

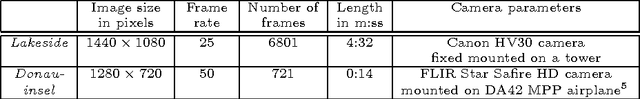

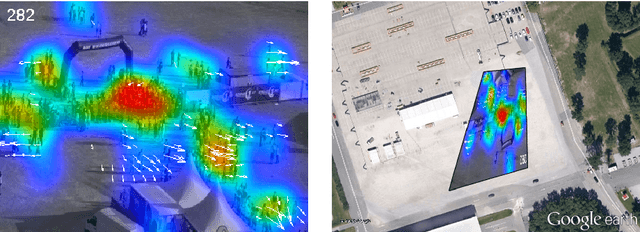

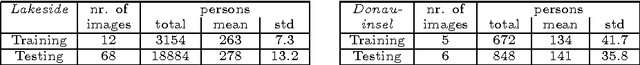

Counting people from above: Airborne video based crowd analysis

Apr 23, 2013

Abstract:Crowd monitoring and analysis in mass events are highly important technologies to support the security of attending persons. Proposed methods based on terrestrial or airborne image/video data often fail in achieving sufficiently accurate results to guarantee a robust service. We present a novel framework for estimating human count, density and motion from video data based on custom tailored object detection techniques, a regression based density estimate and a total variation based optical flow extraction. From the gathered features we present a detailed accuracy analysis versus ground truth measurements. In addition, all information is projected into world coordinates to enable a direct integration with existing geo-information systems. The resulting human counts demonstrate a mean error of 4% to 9% and thus represent a most efficient measure that can be robustly applied in security critical services.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge