Rogério de Paula

Making Sense of Knowledge Intensive Processes: an Oil & Gas Industry Scenario

Jan 31, 2024Abstract:Sensemaking is a constant and ongoing process by which people associate meaning to experiences. It can be an individual process, known as abduction, or a group process by which people give meaning to collective experiences. The sensemaking of a group is influenced by the abduction process of each person about the experience. Every collaborative process needs some level of sensemaking to show results. For a knowledge intensive process, sensemaking is central and related to most of its tasks. We present findings from a fieldwork executed in knowledge intensive process from the Oil and Gas industry. Our findings indicated that different types of knowledge can be combined to compose the result of a sensemaking process (e.g. decision, the need for more discussion, etc.). This paper presents an initial set of knowledge types that can be combined to compose the result of the sensemaking of a collaborative decision making process. We also discuss ideas for using systems powered by Artificial Intelligence to support sensemaking processes.

Towards a New Science of Disinformation

Mar 17, 2022

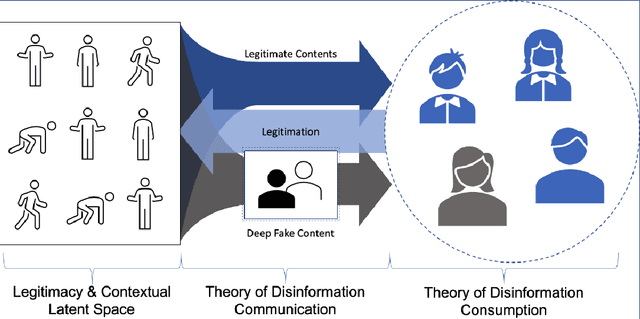

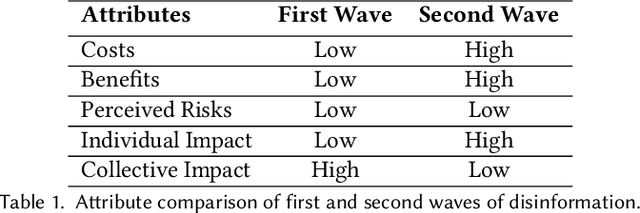

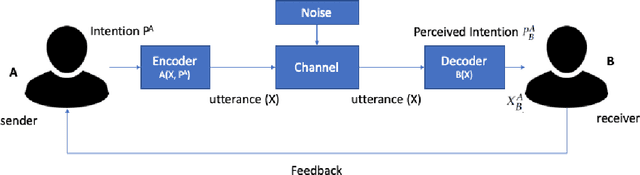

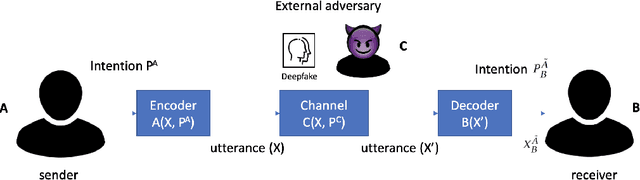

Abstract:How can we best address the dangerous impact that deep learning-generated fake audios, photographs, and videos (a.k.a. deepfakes) may have in personal and societal life? We foresee that the availability of cheap deepfake technology will create a second wave of disinformation where people will receive specific, personalized disinformation through different channels, making the current approaches to fight disinformation obsolete. We argue that fake media has to be seen as an upcoming cybersecurity problem, and we have to shift from combating its spread to a prevention and cure framework where users have available ways to verify, challenge, and argue against the veracity of each piece of media they are exposed to. To create the technologies behind this framework, we propose that a new Science of Disinformation is needed, one which creates a theoretical framework both for the processes of communication and consumption of false content. Key scientific and technological challenges facing this research agenda are listed and discussed in the light of state-of-art technologies for fake media generation and detection, argument finding and construction, and how to effectively engage users in the prevention and cure processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge