Robert Vogel

Unsupervised Evaluation and Weighted Aggregation of Ranked Predictions

Feb 13, 2018

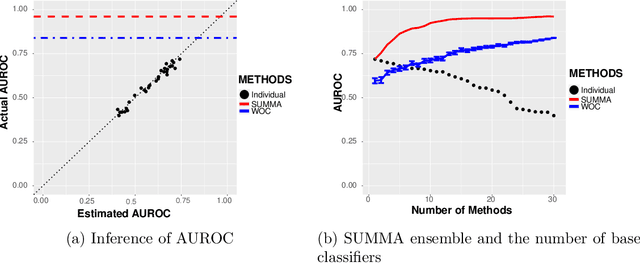

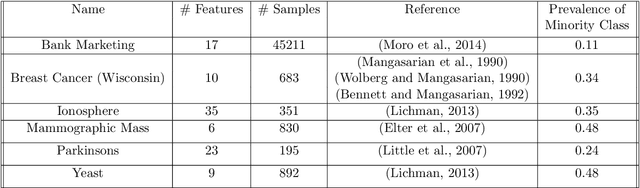

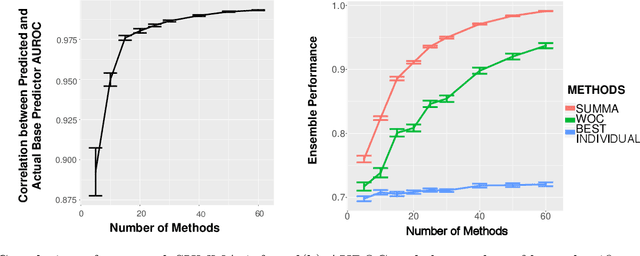

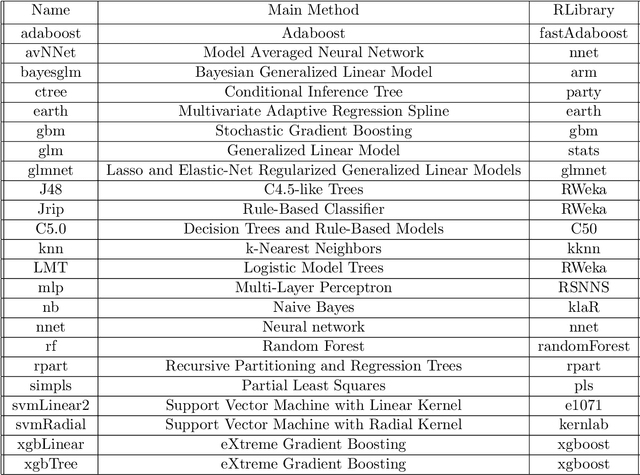

Abstract:Learning algorithms that aggregate predictions from an ensemble of diverse base classifiers consistently outperform individual methods. Many of these strategies have been developed in a supervised setting, where the accuracy of each base classifier can be empirically measured and this information is incorporated in the training process. However, the reliance on labeled data precludes the application of ensemble methods to many real world problems where labeled data has not been curated. To this end we developed a new theoretical framework for binary classification, the Strategy for Unsupervised Multiple Method Aggregation (SUMMA), to estimate the performances of base classifiers and an optimal strategy for ensemble learning from unlabeled data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge