Robert C. Gray

Improving Fairness in Adaptive Social Exergames via Shapley Bandits

Feb 21, 2023Abstract:Algorithmic fairness is an essential requirement as AI becomes integrated in society. In the case of social applications where AI distributes resources, algorithms often must make decisions that will benefit a subset of users, sometimes repeatedly or exclusively, while attempting to maximize specific outcomes. How should we design such systems to serve users more fairly? This paper explores this question in the case where a group of users works toward a shared goal in a social exergame called Step Heroes. We identify adverse outcomes in traditional multi-armed bandits (MABs) and formalize the Greedy Bandit Problem. We then propose a solution based on a new type of fairness-aware multi-armed bandit, Shapley Bandits. It uses the Shapley Value for increasing overall player participation and intervention adherence rather than the maximization of total group output, which is traditionally achieved by favoring only high-performing participants. We evaluate our approach via a user study (n=46). Our results indicate that our Shapley Bandits effectively mediates the Greedy Bandit Problem and achieves better user retention and motivation across the participants.

Personalization Paradox in Behavior Change Apps: Lessons from a Social Comparison-Based Personalized App for Physical Activity

Feb 11, 2021

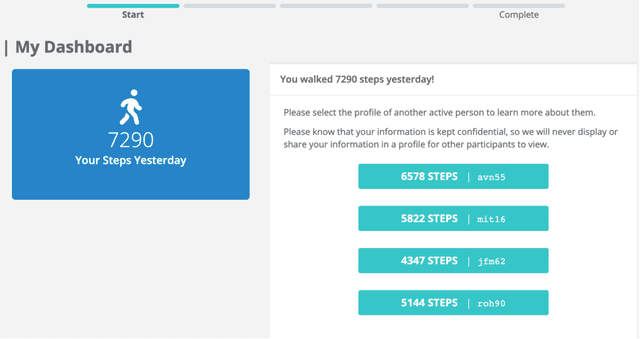

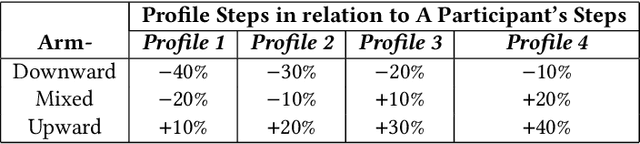

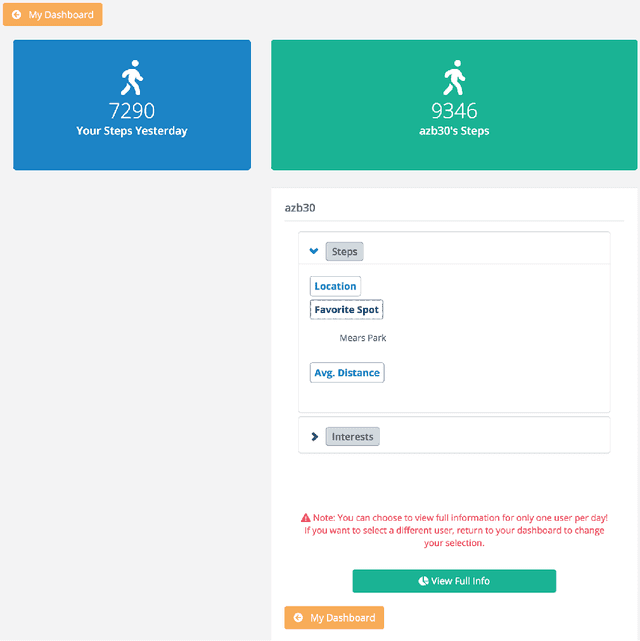

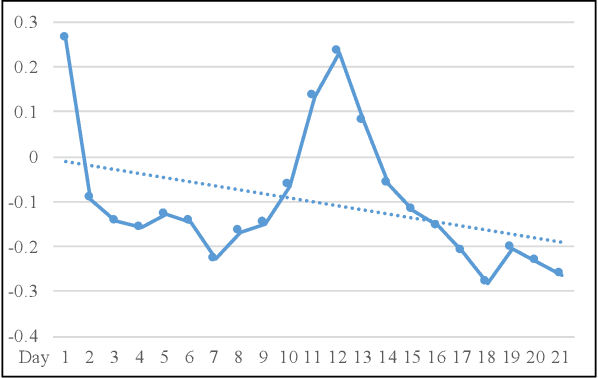

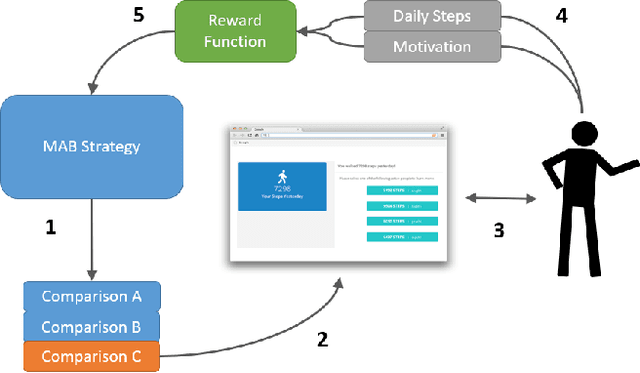

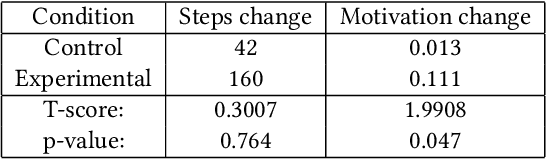

Abstract:Social comparison-based features are widely used in social computing apps. However, most existing apps are not grounded in social comparison theories and do not consider individual differences in social comparison preferences and reactions. This paper is among the first to automatically personalize social comparison targets. In the context of an m-health app for physical activity, we use artificial intelligence (AI) techniques of multi-armed bandits. Results from our user study (n=53) indicate that there is some evidence that motivation can be increased using the AI-based personalization of social comparison. The detected effects achieved small-to-moderate effect sizes, illustrating the real-world implications of the intervention for enhancing motivation and physical activity. In addition to design implications for social comparison features in social apps, this paper identified the personalization paradox, the conflict between user modeling and adaptation, as a key design challenge of personalized applications for behavior change. Additionally, we propose research directions to mitigate this Personalization Paradox.

Player Modeling via Multi-Armed Bandits

Feb 10, 2021

Abstract:This paper focuses on building personalized player models solely from player behavior in the context of adaptive games. We present two main contributions: The first is a novel approach to player modeling based on multi-armed bandits (MABs). This approach addresses, at the same time and in a principled way, both the problem of collecting data to model the characteristics of interest for the current player and the problem of adapting the interactive experience based on this model. Second, we present an approach to evaluating and fine-tuning these algorithms prior to generating data in a user study. This is an important problem, because conducting user studies is an expensive and labor-intensive process; therefore, an ability to evaluate the algorithms beforehand can save a significant amount of resources. We evaluate our approach in the context of modeling players' social comparison orientation (SCO) and present empirical results from both simulations and real players.

Regression Oracles and Exploration Strategies for Short-Horizon Multi-Armed Bandits

Feb 10, 2021

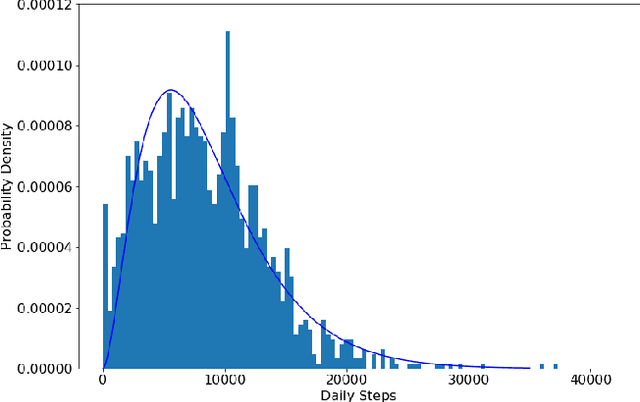

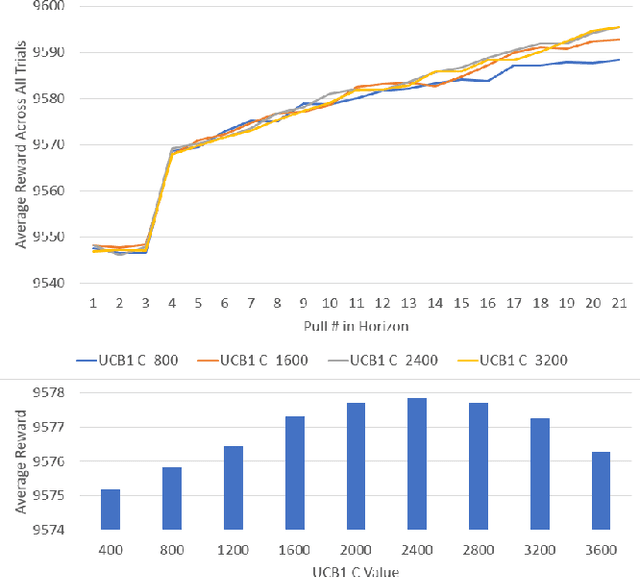

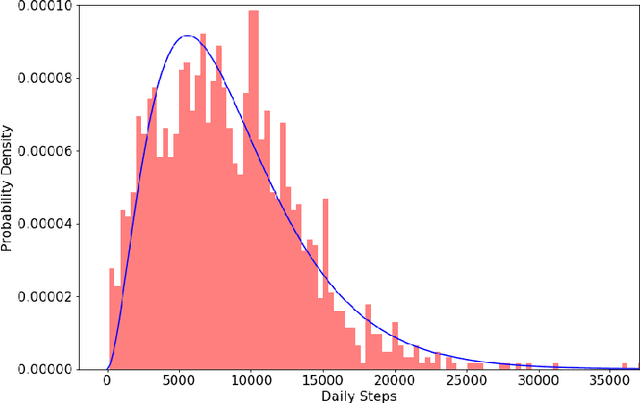

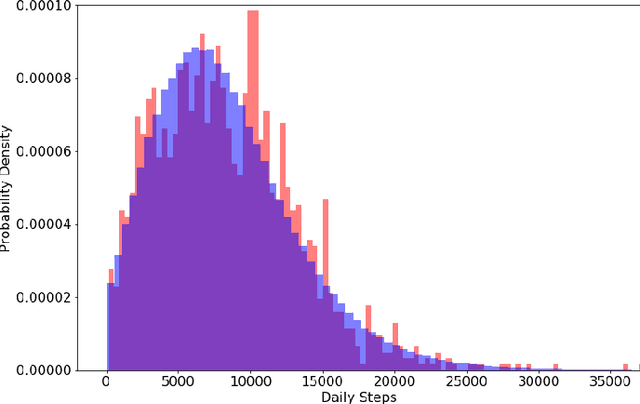

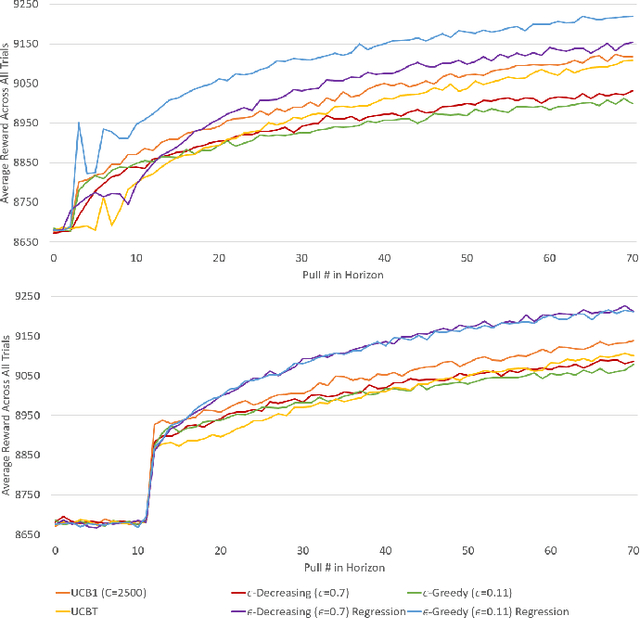

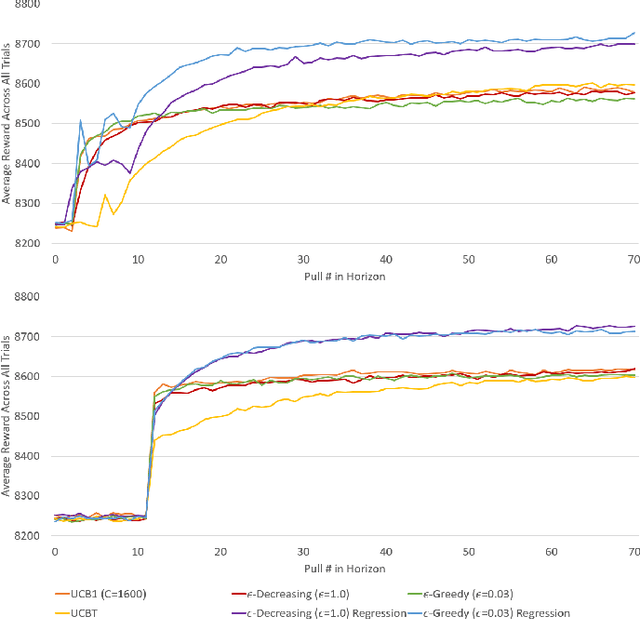

Abstract:This paper explores multi-armed bandit (MAB) strategies in very short horizon scenarios, i.e., when the bandit strategy is only allowed very few interactions with the environment. This is an understudied setting in the MAB literature with many applications in the context of games, such as player modeling. Specifically, we pursue three different ideas. First, we explore the use of regression oracles, which replace the simple average used in strategies such as epsilon-greedy with linear regression models. Second, we examine different exploration patterns such as forced exploration phases. Finally, we introduce a new variant of the UCB1 strategy called UCBT that has interesting properties and no tunable parameters. We present experimental results in a domain motivated by exergames, where the goal is to maximize a player's daily steps. Our results show that the combination of epsilon-greedy or epsilon-decreasing with regression oracles outperforms all other tested strategies in the short horizon setting.

* 8 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge