Robert Bain

A Rapid Adapting and Continual Learning Spiking Neural Network Path Planning Algorithm for Mobile Robots

Apr 23, 2024

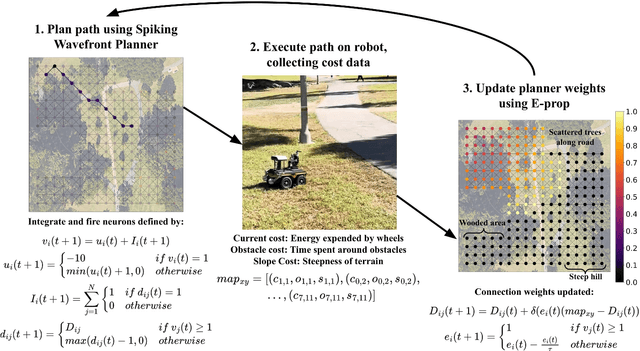

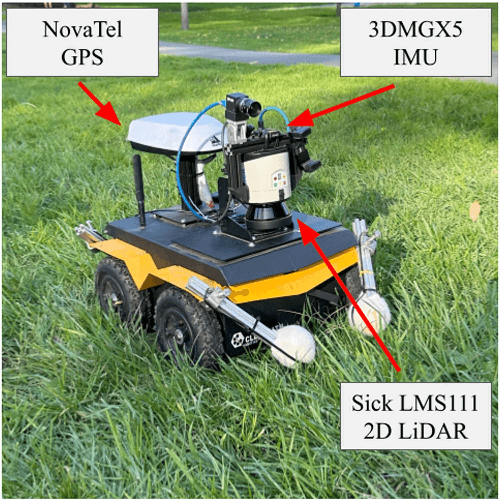

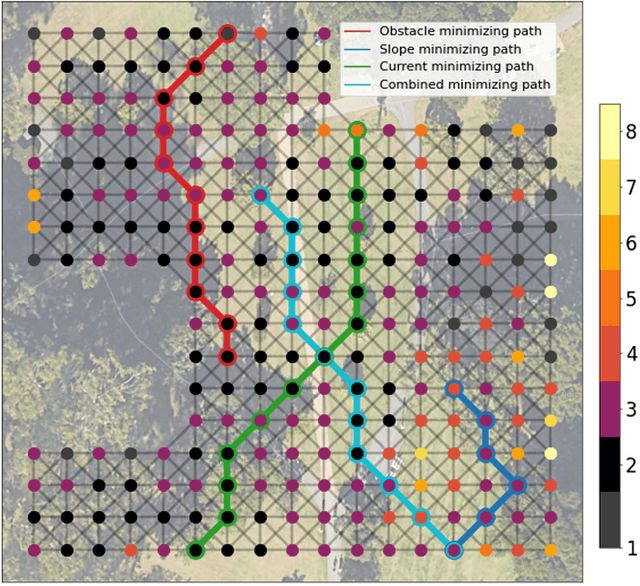

Abstract:Mapping traversal costs in an environment and planning paths based on this map are important for autonomous navigation. We present a neurobotic navigation system that utilizes a Spiking Neural Network Wavefront Planner and E-prop learning to concurrently map and plan paths in a large and complex environment. We incorporate a novel method for mapping which, when combined with the Spiking Wavefront Planner, allows for adaptive planning by selectively considering any combination of costs. The system is tested on a mobile robot platform in an outdoor environment with obstacles and varying terrain. Results indicate that the system is capable of discerning features in the environment using three measures of cost, (1) energy expenditure by the wheels, (2) time spent in the presence of obstacles, and (3) terrain slope. In just twelve hours of online training, E-prop learns and incorporates traversal costs into the path planning maps by updating the delays in the Spiking Wavefront Planner. On simulated paths, the Spiking Wavefront Planner plans significantly shorter and lower cost paths than A* and RRT*. The spiking wavefront planner is compatible with neuromorphic hardware and could be used for applications requiring low size, weight, and power.

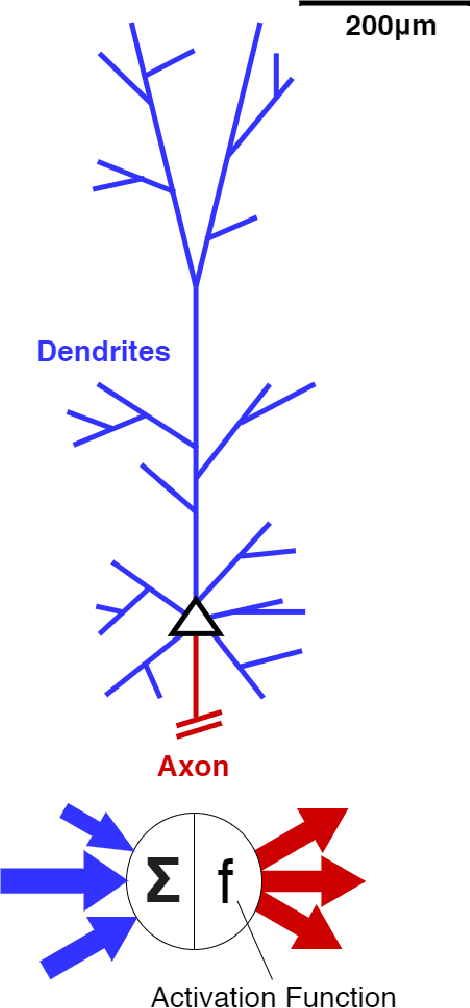

The Role Of Biology In Deep Learning

Sep 07, 2022

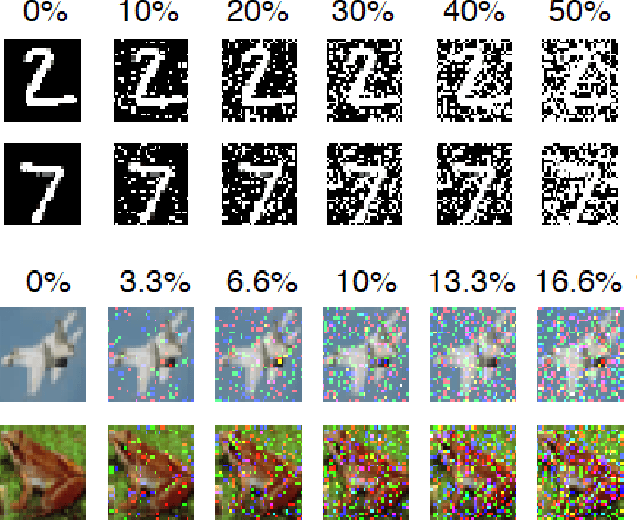

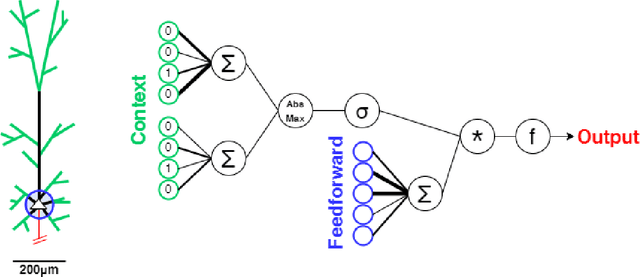

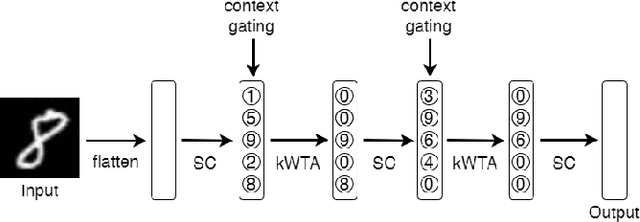

Abstract:Artificial neural networks took a lot of inspiration from their biological counterparts in becoming our best machine perceptual systems. This work summarizes some of that history and incorporates modern theoretical neuroscience into experiments with artificial neural networks from the field of deep learning. Specifically, iterative magnitude pruning is used to train sparsely connected networks with 33x fewer weights without loss in performance. These are used to test and ultimately reject the hypothesis that weight sparsity alone improves image noise robustness. Recent work mitigated catastrophic forgetting using weight sparsity, activation sparsity, and active dendrite modeling. This paper replicates those findings, and extends the method to train convolutional neural networks on a more challenging continual learning task. The code has been made publicly available.

Visualizing the Loss Landscape of Winning Lottery Tickets

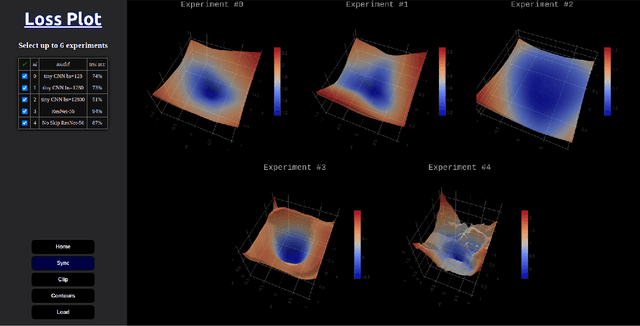

Dec 16, 2021

Abstract:The underlying loss landscapes of deep neural networks have a great impact on their training, but they have mainly been studied theoretically due to computational constraints. This work vastly reduces the time required to compute such loss landscapes, and uses them to study winning lottery tickets found via iterative magnitude pruning. We also share results that contradict previously claimed correlations between certain loss landscape projection methods and model trainability and generalization error.

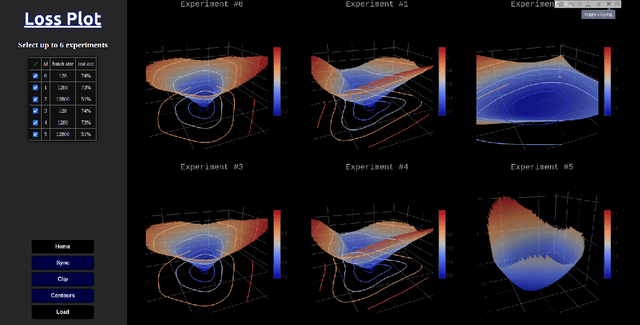

LossPlot: A Better Way to Visualize Loss Landscapes

Nov 30, 2021

Abstract:Investigations into the loss landscapes of deep neural networks are often laborious. This work documents our user-driven approach to create a platform for semi-automating this process. LossPlot accepts data in the form of a csv, and allows multiple trained minimizers of the loss function to be manipulated in sync. Other features include a simple yet intuitive checkbox UI, summary statistics, and the ability to control clipping which other methods do not offer.

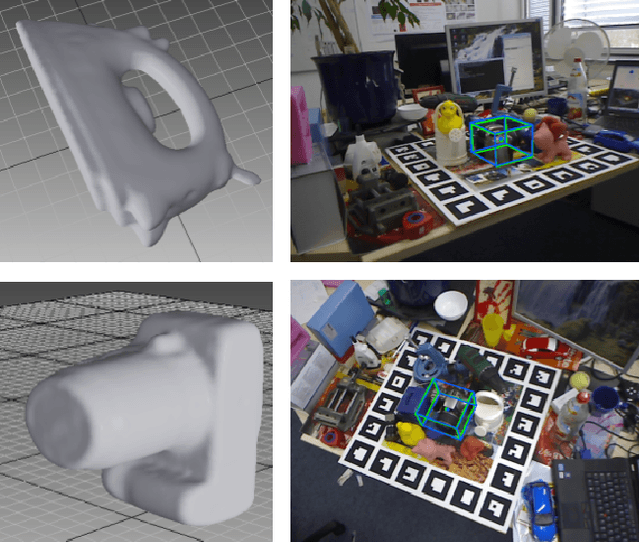

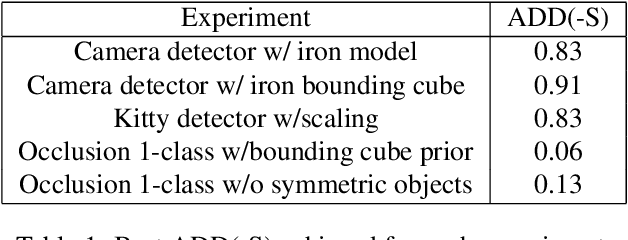

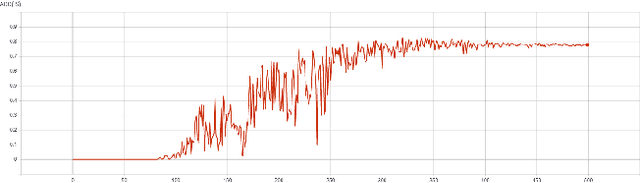

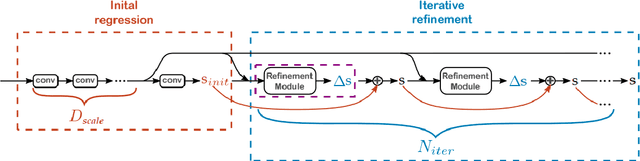

ePose: Let's Make EfficientPose More Generally Applicable

Nov 30, 2021

Abstract:EfficientPose is an impressive 3D object detection model. It has been demonstrated to be quick, scalable, and accurate, especially when considering that it uses only RGB inputs. In this paper we try to improve on EfficientPose by giving it the ability to infer an object's size, and by simplifying both the data collection and loss calculations. We evaluated ePose using the Linemod dataset and a new subset of it called "Occlusion 1-class". We also outline our current progress and thoughts about using ePose with the NuScenes and the 2017 KITTI 3D Object Detection datasets. The source code is available at https://github.com/tbd-clip/EfficientPose.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge