Rishika Singh

Driver Drowsiness Detection System: An Approach By Machine Learning Application

Mar 11, 2023Abstract:The majority of human deaths and injuries are caused by traffic accidents. A million people worldwide die each year due to traffic accident injuries, consistent with the World Health Organization. Drivers who do not receive enough sleep, rest, or who feel weary may fall asleep behind the wheel, endangering both themselves and other road users. The research on road accidents specified that major road accidents occur due to drowsiness while driving. These days, it is observed that tired driving is the main reason to occur drowsiness. Now, drowsiness becomes the main principle for to increase in the number of road accidents. This becomes a major issue in a world which is very important to resolve as soon as possible. The predominant goal of all devices is to improve the performance to detect drowsiness in real time. Many devices were developed to detect drowsiness, which depend on different artificial intelligence algorithms. So, our research is also related to driver drowsiness detection which can identify the drowsiness of a driver by identifying the face and then followed by eye tracking. The extracted eye image is matched with the dataset by the system. With the help of the dataset, the system detected that if eyes were close for a certain range, it could ring an alarm to alert the driver and if the eyes were open after the alert, then it could continue tracking. If the eyes were open then the score that we set decreased and if the eyes were closed then the score increased. This paper focus to resolve the problem of drowsiness detection with an accuracy of 80% and helps to reduce road accidents.

HyperFair: A Soft Approach to Integrating Fairness Criteria

Sep 05, 2020

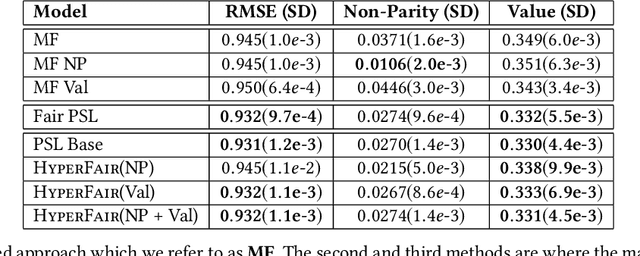

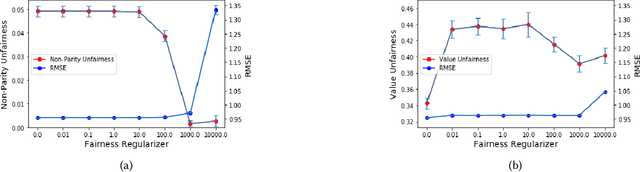

Abstract:Recommender systems are being employed across an increasingly diverse set of domains that can potentially make a significant social and individual impact. For this reason, considering fairness is a critical step in the design and evaluation of such systems. In this paper, we introduce HyperFair, a general framework for enforcing soft fairness constraints in a hybrid recommender system. HyperFair models integrate variations of fairness metrics as a regularization of a joint inference objective function. We implement our approach using probabilistic soft logic and show that it is particularly well-suited for this task as it is expressive and structural constraints can be added to the system in a concise and interpretable manner. We propose two ways to employ the methods we introduce: first as an extension of a probabilistic soft logic recommender system template; second as a fair retrofitting technique that can be used to improve the fairness of predictions from a black-box model. We empirically validate our approach by implementing multiple HyperFair hybrid recommenders and compare them to a state-of-the-art fair recommender. We also run experiments showing the effectiveness of our methods for the task of retrofitting a black-box model and the trade-off between the amount of fairness enforced and the prediction performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge