Richard Pymar

Joint Shapley values: a measure of joint feature importance

Jul 23, 2021

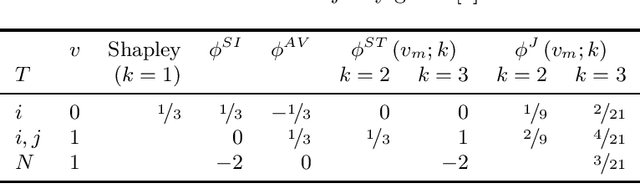

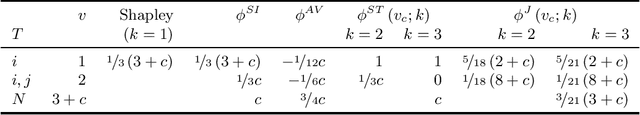

Abstract:The Shapley value is one of the most widely used model-agnostic measures of feature importance in explainable AI: it has clear axiomatic foundations, is guaranteed to uniquely exist, and has a clear interpretation as a feature's average effect on a model's prediction. We introduce joint Shapley values, which directly extend the Shapley axioms. This preserves the classic Shapley value's intuitions: joint Shapley values measure a set of features' average effect on a model's prediction. We prove the uniqueness of joint Shapley values, for any order of explanation. Results for games show that joint Shapley values present different insights from existing interaction indices, which assess the effect of a feature within a set of features. Deriving joint Shapley values in ML attribution problems thus gives us the first measure of the joint effect of sets of features on model predictions. In a dataset with binary features, we present a presence-adjusted method for calculating global values that retains the efficiency property.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge