Richard N. Zare

Optimization of Molecules via Deep Reinforcement Learning

Oct 23, 2018

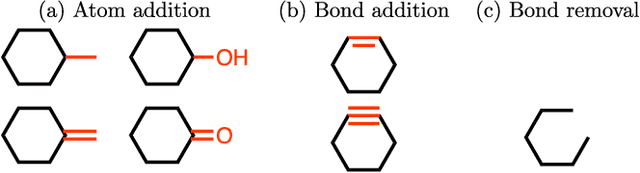

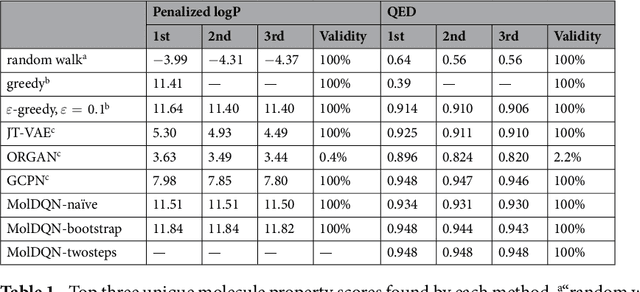

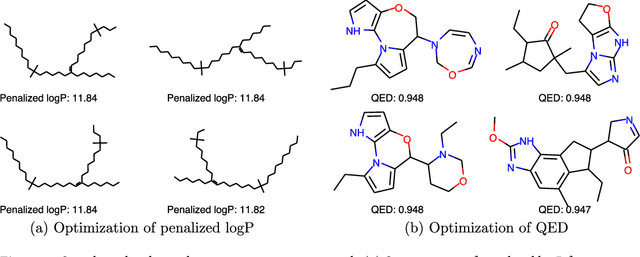

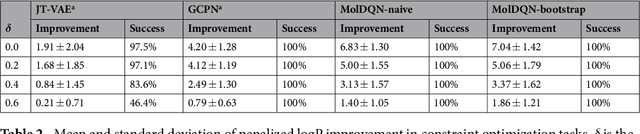

Abstract:We present a framework, which we call Molecule Deep $Q$-Networks (MolDQN), for molecule optimization by combining domain knowledge of chemistry and state-of-the-art reinforcement learning techniques (prioritized experience replay, double $Q$-learning, and randomized value functions). We directly define modifications on molecules, thereby ensuring 100% chemical validity. Further, we operate without pre-training on any dataset to avoid possible bias from the choice of that set. As a result, our model outperforms several other state-of-the-art algorithms by having a higher success rate of acquiring molecules with better properties. Inspired by problems faced during medicinal chemistry lead optimization, we extend our model with multi-objective reinforcement learning, which maximizes drug-likeness while maintaining similarity to the original molecule. We further show the path through chemical space to achieve optimization for a molecule to understand how the model works.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge