Richard Baker

Signal Injection Attacks against CCD Image Sensors

Aug 19, 2021

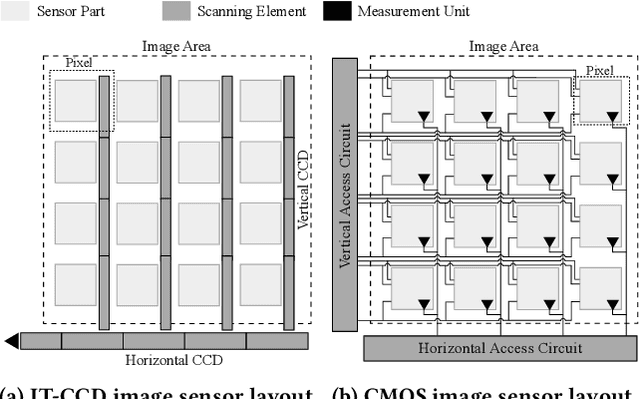

Abstract:Since cameras have become a crucial part in many safety-critical systems and applications, such as autonomous vehicles and surveillance, a large body of academic and non-academic work has shown attacks against their main component - the image sensor. However, these attacks are limited to coarse-grained and often suspicious injections because light is used as an attack vector. Furthermore, due to the nature of optical attacks, they require the line-of-sight between the adversary and the target camera. In this paper, we present a novel post-transducer signal injection attack against CCD image sensors, as they are used in professional, scientific, and even military settings. We show how electromagnetic emanation can be used to manipulate the image information captured by a CCD image sensor with the granularity down to the brightness of individual pixels. We study the feasibility of our attack and then demonstrate its effects in the scenario of automatic barcode scanning. Our results indicate that the injected distortion can disrupt automated vision-based intelligent systems.

They See Me Rollin': Inherent Vulnerability of the Rolling Shutter in CMOS Image Sensors

Jan 25, 2021

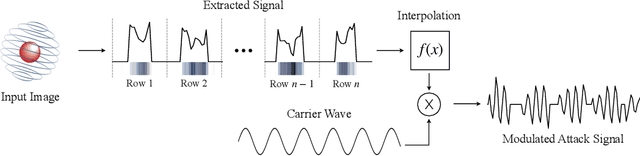

Abstract:Cameras have become a fundamental component of vision-based intelligent systems. As a balance between production costs and image quality, most modern cameras use Complementary Metal-Oxide Semiconductor image sensors that implement an electronic rolling shutter mechanism, where image rows are captured consecutively rather than all-at-once. In this paper, we describe how the electronic rolling shutter can be exploited using a bright, modulated light source (e.g., an inexpensive, off-the-shelf laser), to inject fine-grained image disruptions. These disruptions substantially affect camera-based computer vision systems, where high-frequency data is crucial in extracting informative features from objects. We study the fundamental factors affecting a rolling shutter attack, such as environmental conditions, angle of the incident light, laser to camera distance, and aiming precision. We demonstrate how these factors affect the intensity of the injected distortion and how an adversary can take them into account by modeling the properties of the camera. We introduce a general pipeline of a practical attack, which consists of: (i) profiling several properties of the target camera and (ii) partially simulating the attack to find distortions that satisfy the adversary's goal. Then, we instantiate the attack to the scenario of object detection, where the adversary's goal is to maximally disrupt the detection of objects in the image. We show that the adversary can modulate the laser to hide up to 75% of objects perceived by state-of-the-art detectors while controlling the amount of perturbation to keep the attack inconspicuous. Our results indicate that rolling shutter attacks can substantially reduce the performance and reliability of vision-based intelligent systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge