Ricardo Kleinlein

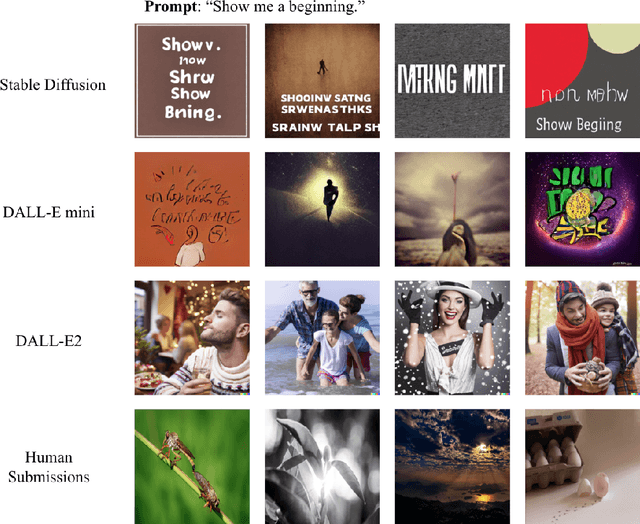

Language Does More Than Describe: On The Lack Of Figurative Speech in Text-To-Image Models

Oct 19, 2022

Abstract:The impressive capacity shown by recent text-to-image diffusion models to generate high-quality pictures from textual input prompts has leveraged the debate about the very definition of art. Nonetheless, these models have been trained using text data collected from content-based labelling protocols that focus on describing the items and actions in an image but neglect any subjective appraisal. Consequently, these automatic systems need rigorous descriptions of the elements and the pictorial style of the image to be generated, otherwise failing to deliver. As potential indicators of the actual artistic capabilities of current generative models, we characterise the sentimentality, objectiveness and degree of abstraction of publicly available text data used to train current text-to-image diffusion models. Considering the sharp difference observed between their language style and that typically employed in artistic contexts, we suggest generative models should incorporate additional sources of subjective information in their training in order to overcome (or at least to alleviate) some of their current limitations, thus effectively unleashing a truly artistic and creative generation.

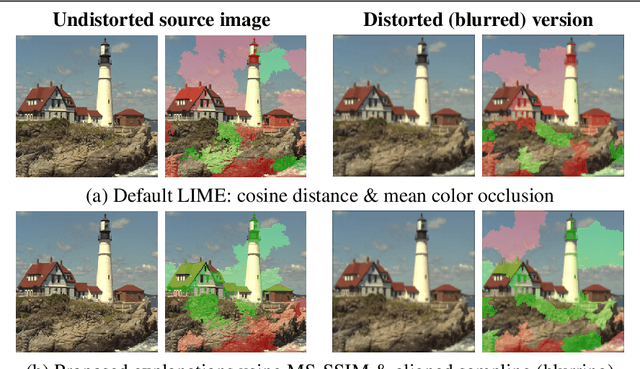

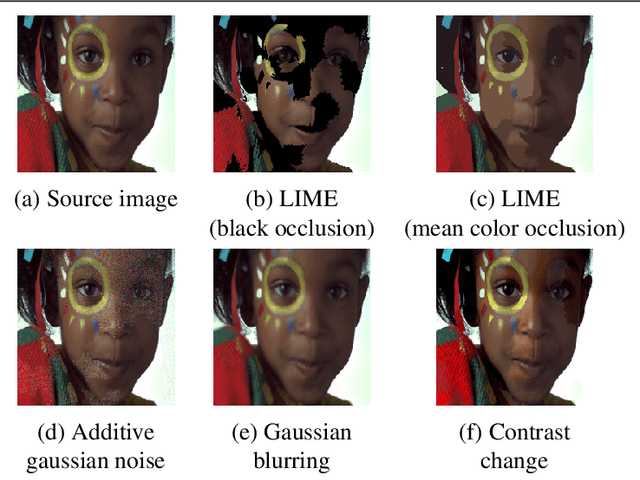

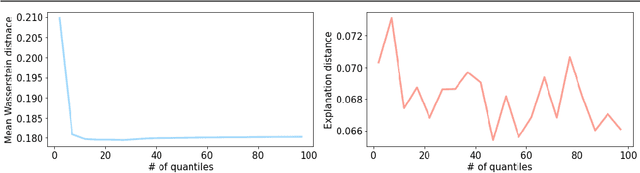

Sampling Based On Natural Image Statistics Improves Local Surrogate Explainers

Aug 08, 2022

Abstract:Many problems in computer vision have recently been tackled using models whose predictions cannot be easily interpreted, most commonly deep neural networks. Surrogate explainers are a popular post-hoc interpretability method to further understand how a model arrives at a particular prediction. By training a simple, more interpretable model to locally approximate the decision boundary of a non-interpretable system, we can estimate the relative importance of the input features on the prediction. Focusing on images, surrogate explainers, e.g., LIME, generate a local neighbourhood around a query image by sampling in an interpretable domain. However, these interpretable domains have traditionally been derived exclusively from the intrinsic features of the query image, not taking into consideration the manifold of the data the non-interpretable model has been exposed to in training (or more generally, the manifold of real images). This leads to suboptimal surrogates trained on potentially low probability images. We address this limitation by aligning the local neighbourhood on which the surrogate is trained with the original training data distribution, even when this distribution is not accessible. We propose two approaches to do so, namely (1) altering the method for sampling the local neighbourhood and (2) using perceptual metrics to convey some of the properties of the distribution of natural images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge