Ricardo Gudwin

Binarized Neural Networks Converge Toward Algorithmic Simplicity: Empirical Support for the Learning-as-Compression Hypothesis

May 30, 2025Abstract:Understanding and controlling the informational complexity of neural networks is a central challenge in machine learning, with implications for generalization, optimization, and model capacity. While most approaches rely on entropy-based loss functions and statistical metrics, these measures often fail to capture deeper, causally relevant algorithmic regularities embedded in network structure. We propose a shift toward algorithmic information theory, using Binarized Neural Networks (BNNs) as a first proxy. Grounded in algorithmic probability (AP) and the universal distribution it defines, our approach characterizes learning dynamics through a formal, causally grounded lens. We apply the Block Decomposition Method (BDM) -- a scalable approximation of algorithmic complexity based on AP -- and demonstrate that it more closely tracks structural changes during training than entropy, consistently exhibiting stronger correlations with training loss across varying model sizes and randomized training runs. These results support the view of training as a process of algorithmic compression, where learning corresponds to the progressive internalization of structured regularities. In doing so, our work offers a principled estimate of learning progression and suggests a framework for complexity-aware learning and regularization, grounded in first principles from information theory, complexity, and computability.

Evaluating Training in Binarized Neural Networks Through the Lens of Algorithmic Information Theory

May 27, 2025Abstract:Understanding and controlling the informational complexity of neural networks is a central challenge in machine learning, with implications for generalization, optimization, and model capacity. While most approaches rely on entropy-based loss functions and statistical metrics, these measures often fail to capture deeper, causally relevant algorithmic regularities embedded in network structure. We propose a shift toward algorithmic information theory, using Binarized Neural Networks (BNNs) as a first proxy. Grounded in algorithmic probability (AP) and the universal distribution it defines, our approach characterizes learning dynamics through a formal, causally grounded lens. We apply the Block Decomposition Method (BDM) -- a scalable approximation of algorithmic complexity based on AP -- and demonstrate that it more closely tracks structural changes during training than entropy, consistently exhibiting stronger correlations with training loss across varying model sizes and randomized training runs. These results support the view of training as a process of algorithmic compression, where learning corresponds to the progressive internalization of structured regularities. In doing so, our work offers a principled estimate of learning progression and suggests a framework for complexity-aware learning and regularization, grounded in first principles from information theory, complexity, and computability.

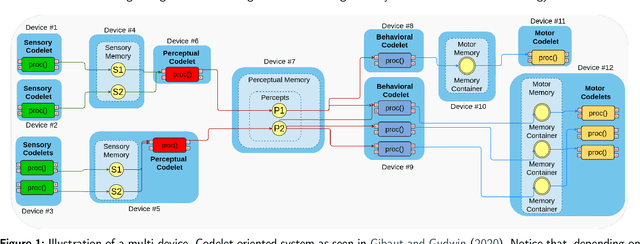

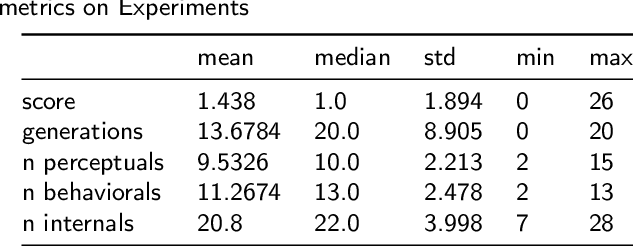

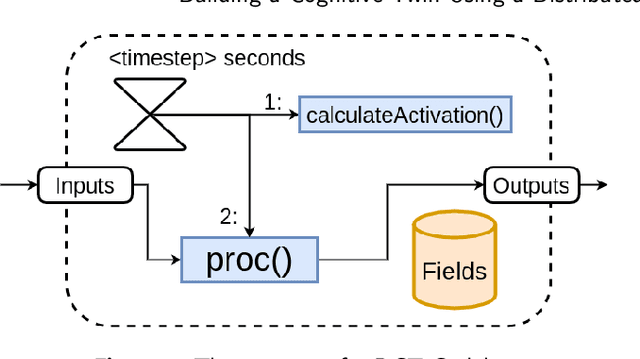

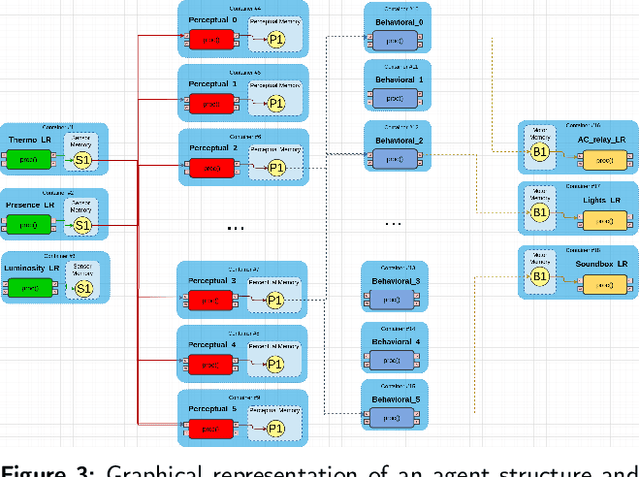

Building a Cognitive Twin Using a Distributed Cognitive System and an Evolution Strategy

Feb 03, 2025

Abstract:This work presents a technique to build interaction-based Cognitive Twins (a computational version of an external agent) using input-output training and an Evolution Strategy on top of a framework for distributed Cognitive Architectures. Here, we show that it's possible to orchestrate many simple physical and virtual devices to achieve good approximations of a person's interaction behavior by training the system in an end-to-end fashion and present performance metrics. The generated Cognitive Twin may later be used to automate tasks, generate more realistic human-like artificial agents or further investigate its behaviors.

* first submitted on 09/22/2022, published on 01/20/2025

Learning Goal-based Movement via Motivational-based Models in Cognitive Mobile Robots

Feb 20, 2023Abstract:Humans have needs motivating their behavior according to intensity and context. However, we also create preferences associated with each action's perceived pleasure, which is susceptible to changes over time. This makes decision-making more complex, requiring learning to balance needs and preferences according to the context. To understand how this process works and enable the development of robots with a motivational-based learning model, we computationally model a motivation theory proposed by Hull. In this model, the agent (an abstraction of a mobile robot) is motivated to keep itself in a state of homeostasis. We added hedonic dimensions to see how preferences affect decision-making, and we employed reinforcement learning to train our motivated-based agents. We run three agents with energy decay rates representing different metabolisms in two different environments to see the impact on their strategy, movement, and behavior. The results show that the agent learned better strategies in the environment that enables choices more adequate according to its metabolism. The use of pleasure in the motivational mechanism significantly impacted behavior learning, mainly for slow metabolism agents. When survival is at risk, the agent ignores pleasure and equilibrium, hinting at how to behave in harsh scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge