Reza Jalayer

A Review on Sound Source Localization in Robotics: Focusing on Deep Learning Methods

Jul 01, 2025Abstract:Sound source localization (SSL) adds a spatial dimension to auditory perception, allowing a system to pinpoint the origin of speech, machinery noise, warning tones, or other acoustic events, capabilities that facilitate robot navigation, human-machine dialogue, and condition monitoring. While existing surveys provide valuable historical context, they typically address general audio applications and do not fully account for robotic constraints or the latest advancements in deep learning. This review addresses these gaps by offering a robotics-focused synthesis, emphasizing recent progress in deep learning methodologies. We start by reviewing classical methods such as Time Difference of Arrival (TDOA), beamforming, Steered-Response Power (SRP), and subspace analysis. Subsequently, we delve into modern machine learning (ML) and deep learning (DL) approaches, discussing traditional ML and neural networks (NNs), convolutional neural networks (CNNs), convolutional recurrent neural networks (CRNNs), and emerging attention-based architectures. The data and training strategy that are the two cornerstones of DL-based SSL are explored. Studies are further categorized by robot types and application domains to facilitate researchers in identifying relevant work for their specific contexts. Finally, we highlight the current challenges in SSL works in general, regarding environmental robustness, sound source multiplicity, and specific implementation constraints in robotics, as well as data and learning strategies in DL-based SSL. Also, we sketch promising directions to offer an actionable roadmap toward robust, adaptable, efficient, and explainable DL-based SSL for next-generation robots.

Testing Human-Hand Segmentation on In-Distribution and Out-of-Distribution Data in Human-Robot Interactions Using a Deep Ensemble Model

Jan 13, 2025

Abstract:Reliable detection and segmentation of human hands are critical for enhancing safety and facilitating advanced interactions in human-robot collaboration. Current research predominantly evaluates hand segmentation under in-distribution (ID) data, which reflects the training data of deep learning (DL) models. However, this approach fails to address out-of-distribution (OOD) scenarios that often arise in real-world human-robot interactions. In this study, we present a novel approach by evaluating the performance of pre-trained DL models under both ID data and more challenging OOD scenarios. To mimic realistic industrial scenarios, we designed a diverse dataset featuring simple and cluttered backgrounds with industrial tools, varying numbers of hands (0 to 4), and hands with and without gloves. For OOD scenarios, we incorporated unique and rare conditions such as finger-crossing gestures and motion blur from fast-moving hands, addressing both epistemic and aleatoric uncertainties. To ensure multiple point of views (PoVs), we utilized both egocentric cameras, mounted on the operator's head, and static cameras to capture RGB images of human-robot interactions. This approach allowed us to account for multiple camera perspectives while also evaluating the performance of models trained on existing egocentric datasets as well as static-camera datasets. For segmentation, we used a deep ensemble model composed of UNet and RefineNet as base learners. Performance evaluation was conducted using segmentation metrics and uncertainty quantification via predictive entropy. Results revealed that models trained on industrial datasets outperformed those trained on non-industrial datasets, highlighting the importance of context-specific training. Although all models struggled with OOD scenarios, those trained on industrial datasets demonstrated significantly better generalization.

Evaluating deep learning models for fault diagnosis of a rotating machinery with epistemic and aleatoric uncertainty

Dec 25, 2024

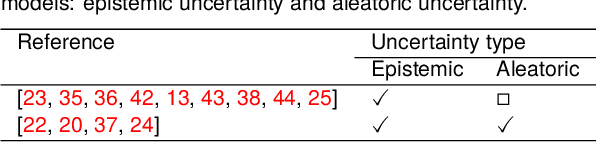

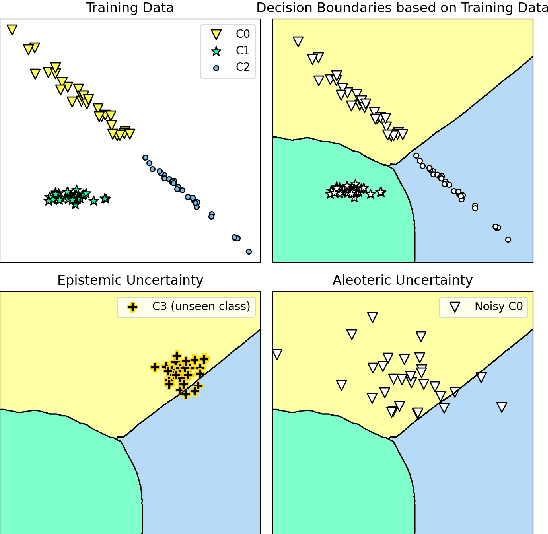

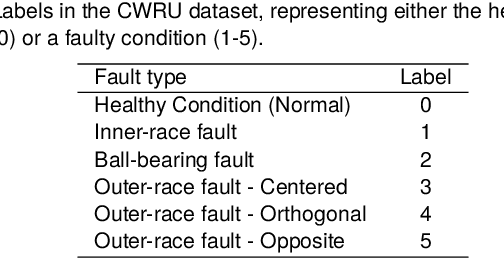

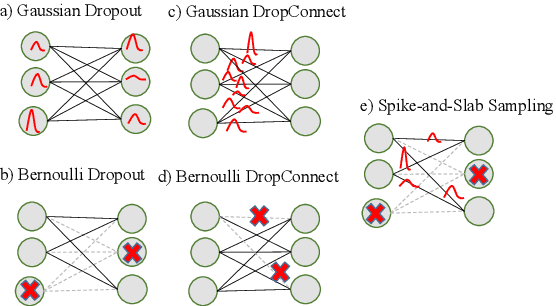

Abstract:Uncertainty-aware deep learning (DL) models recently gained attention in fault diagnosis as a way to promote the reliable detection of faults when out-of-distribution (OOD) data arise from unseen faults (epistemic uncertainty) or the presence of noise (aleatoric uncertainty). In this paper, we present the first comprehensive comparative study of state-of-the-art uncertainty-aware DL architectures for fault diagnosis in rotating machinery, where different scenarios affected by epistemic uncertainty and different types of aleatoric uncertainty are investigated. The selected architectures include sampling by dropout, Bayesian neural networks, and deep ensembles. Moreover, to distinguish between in-distribution and OOD data in the different scenarios two uncertainty thresholds, one of which is introduced in this paper, are alternatively applied. Our empirical findings offer guidance to practitioners and researchers who have to deploy real-world uncertainty-aware fault diagnosis systems. In particular, they reveal that, in the presence of epistemic uncertainty, all DL models are capable of effectively detecting, on average, a substantial portion of OOD data across all the scenarios. However, deep ensemble models show superior performance, independently of the uncertainty threshold used for discrimination. In the presence of aleatoric uncertainty, the noise level plays an important role. Specifically, low noise levels hinder the models' ability to effectively detect OOD data. Even in this case, however, deep ensemble models exhibit a milder degradation in performance, dominating the others. These achievements, combined with their shorter inference time, make deep ensemble architectures the preferred choice.

Automatic Visual Inspection of Rare Defects: A Framework based on GP-WGAN and Enhanced Faster R-CNN

May 02, 2021

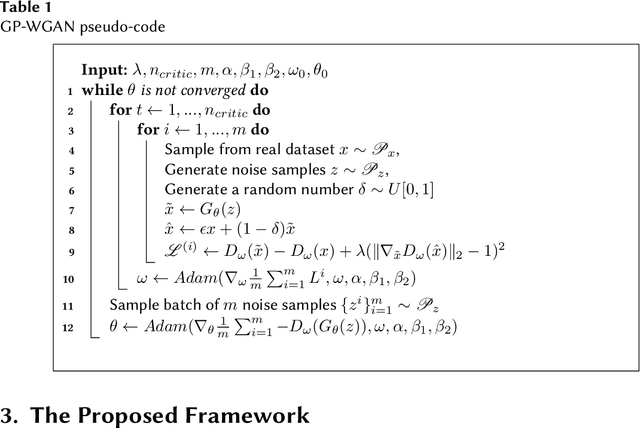

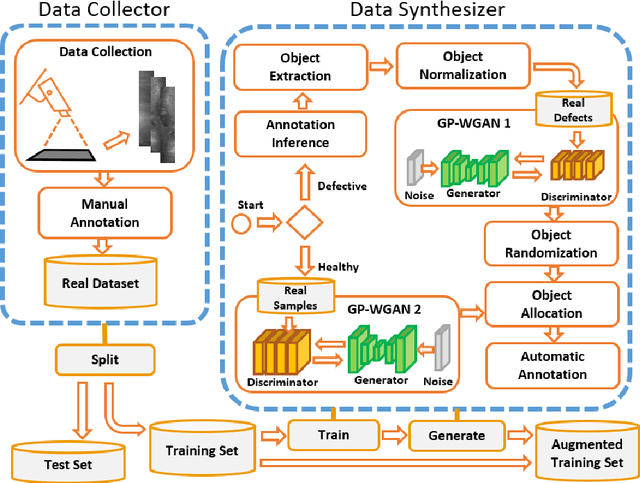

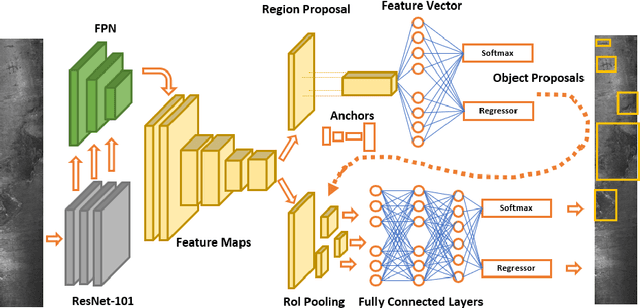

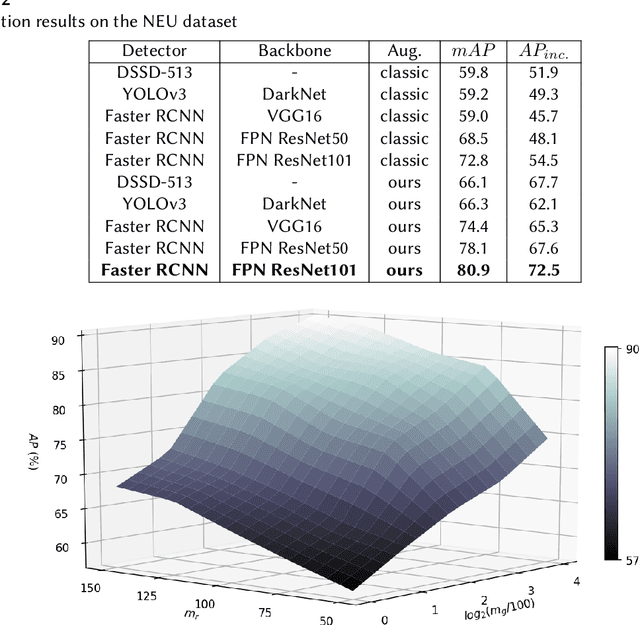

Abstract:A current trend in industries such as semiconductors and foundry is to shift their visual inspection processes to Automatic Visual Inspection (AVI) systems, to reduce their costs, mistakes, and dependency on human experts. This paper proposes a two-staged fault diagnosis framework for AVI systems. In the first stage, a generation model is designed to synthesize new samples based on real samples. The proposed augmentation algorithm extracts objects from the real samples and blends them randomly, to generate new samples and enhance the performance of the image processor. In the second stage, an improved deep learning architecture based on Faster R-CNN, Feature Pyramid Network (FPN), and a Residual Network is proposed to perform object detection on the enhanced dataset. The performance of the algorithm is validated and evaluated on two multi-class datasets. The experimental results performed over a range of imbalance severities demonstrate the superiority of the proposed framework compared to other solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge