Remi R. Lam

Contour location via entropy reduction leveraging multiple information sources

Oct 24, 2018

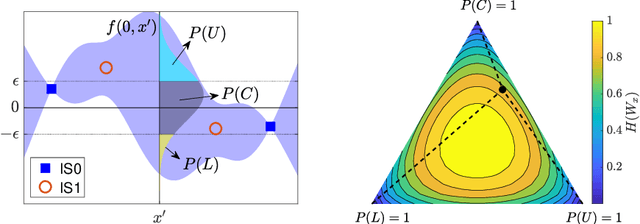

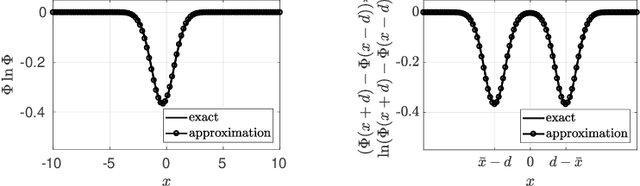

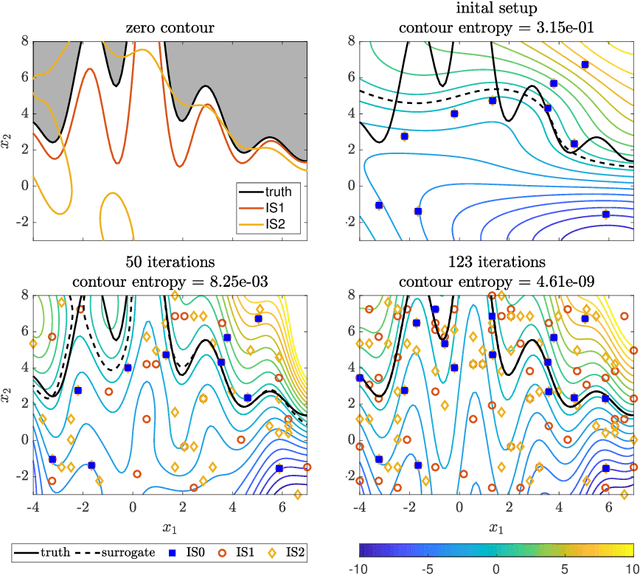

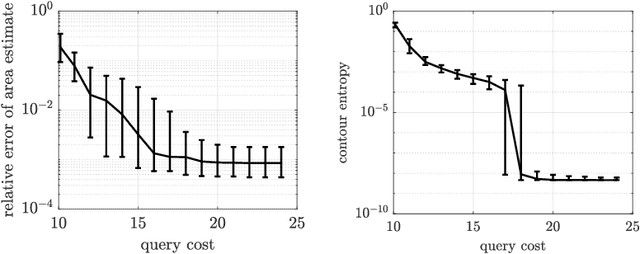

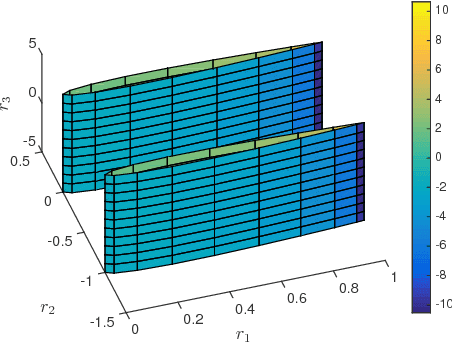

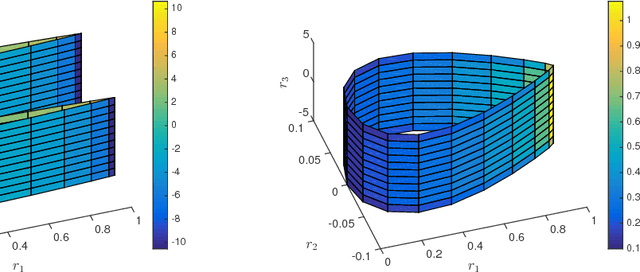

Abstract:We introduce an algorithm to locate contours of functions that are expensive to evaluate. The problem of locating contours arises in many applications, including classification, constrained optimization, and performance analysis of mechanical and dynamical systems (reliability, probability of failure, stability, etc.). Our algorithm locates contours using information from multiple sources, which are available in the form of relatively inexpensive, biased, and possibly noisy approximations to the original function. Considering multiple information sources can lead to significant cost savings. We also introduce the concept of contour entropy, a formal measure of uncertainty about the location of the zero contour of a function approximated by a statistical surrogate model. Our algorithm locates contours efficiently by maximizing the reduction of contour entropy per unit cost.

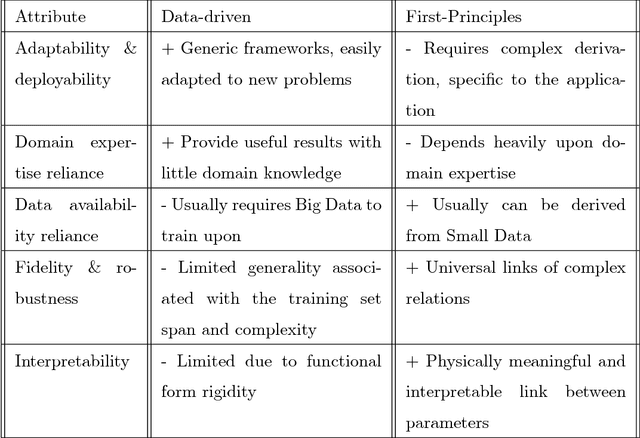

Should You Derive, Or Let the Data Drive? An Optimization Framework for Hybrid First-Principles Data-Driven Modeling

Nov 12, 2017

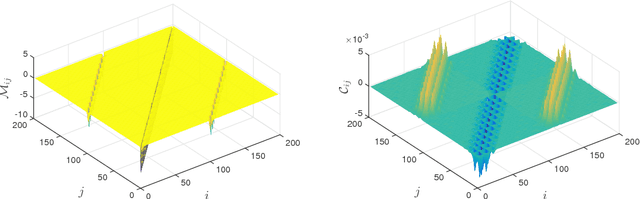

Abstract:Mathematical models are used extensively for diverse tasks including analysis, optimization, and decision making. Frequently, those models are principled but imperfect representations of reality. This is either due to incomplete physical description of the underlying phenomenon (simplified governing equations, defective boundary conditions, etc.), or due to numerical approximations (discretization, linearization, round-off error, etc.). Model misspecification can lead to erroneous model predictions, and respectively suboptimal decisions associated with the intended end-goal task. To mitigate this effect, one can amend the available model using limited data produced by experiments or higher fidelity models. A large body of research has focused on estimating explicit model parameters. This work takes a different perspective and targets the construction of a correction model operator with implicit attributes. We investigate the case where the end-goal is inversion and illustrate how appropriate choices of properties imposed upon the correction and corrected operator lead to improved end-goal insights.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge