Ravi Balasubramanian

The Grasp Reset Mechanism: An Automated Apparatus for Conducting Grasping Trials

Feb 28, 2024Abstract:Advancing robotic grasping and manipulation requires the ability to test algorithms and/or train learning models on large numbers of grasps. Towards the goal of more advanced grasping, we present the Grasp Reset Mechanism (GRM), a fully automated apparatus for conducting large-scale grasping trials. The GRM automates the process of resetting a grasping environment, repeatably placing an object in a fixed location and controllable 1-D orientation. It also collects data and swaps between multiple objects enabling robust dataset collection with no human intervention. We also present a standardized state machine interface for control, which allows for integration of most manipulators with minimal effort. In addition to the physical design and corresponding software, we include a dataset of 1,020 grasps. The grasps were created with a Kinova Gen3 robot arm and Robotiq 2F-85 Adaptive Gripper to enable training of learning models and to demonstrate the capabilities of the GRM. The dataset includes ranges of grasps conducted across four objects and a variety of orientations. Manipulator states, object pose, video, and grasp success data are provided for every trial.

The Door and Drawer Reset Mechanisms: Automated Mechanisms for Testing and Data Collection

Feb 26, 2024Abstract:Robotic manipulation in human environments is a challenging problem for researchers and industry alike. In particular, opening doors/drawers can be challenging for robots, as the size, shape, actuation and required force is variable. Because of this, it can be difficult to collect large real-world datasets and to benchmark different control algorithms on the same hardware. In this paper we present two automated testbeds, the Door Reset Mechanism (DORM) and Drawer Reset Mechanism (DWRM), for the purpose of real world testing and data collection. These devices are low-cost, are sensorized, operate with customized variable resistance, and come with open source software. Additionally, we provide a dataset of over 600 grasps using the DORM and DWRM. We use this dataset to highlight how much variability can exist even with the same trial on the same hardware. This data can also serve as a source for real-world noise in simulation environments.

Measuring a Robot Hand's Graspable Region using Power and Precision Grasps

Apr 27, 2022

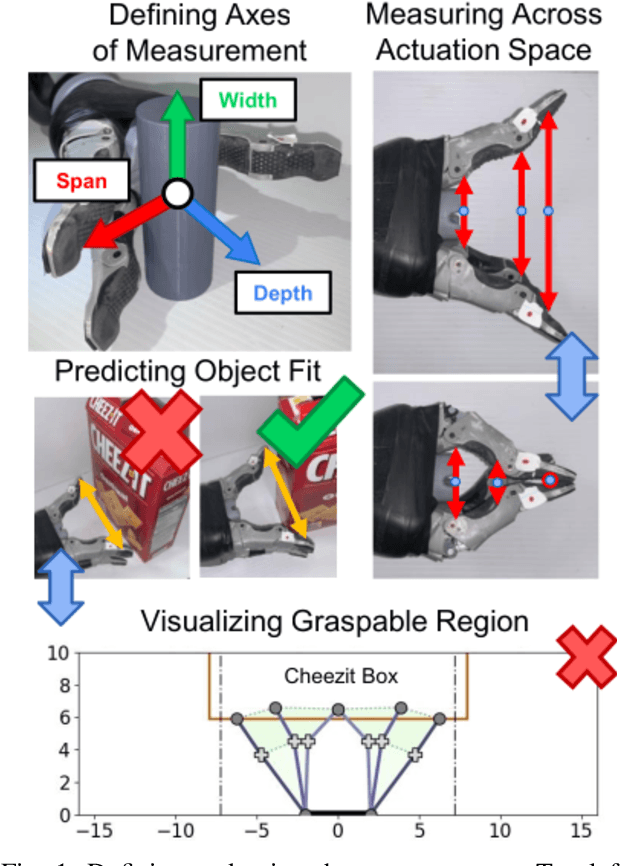

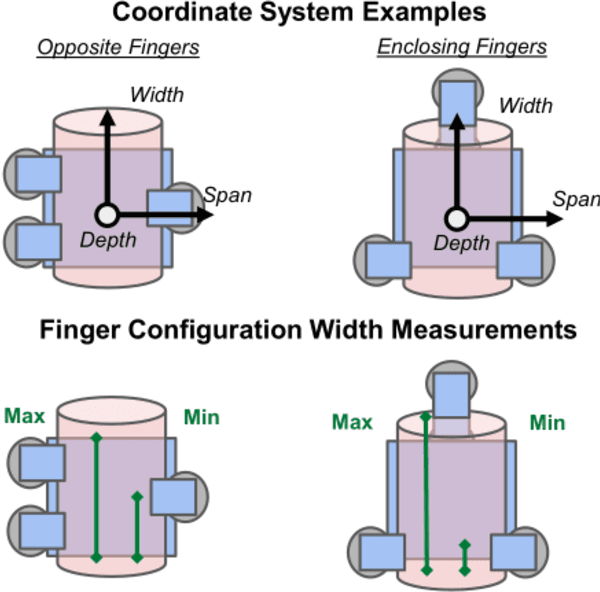

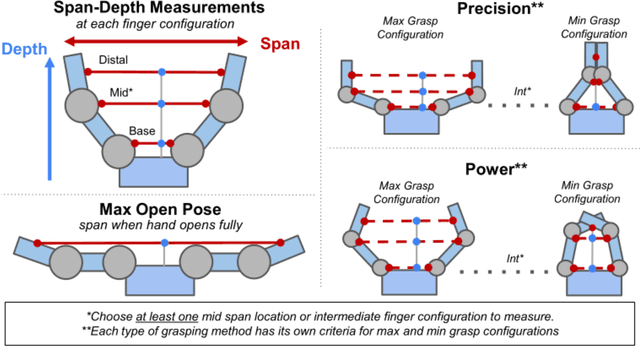

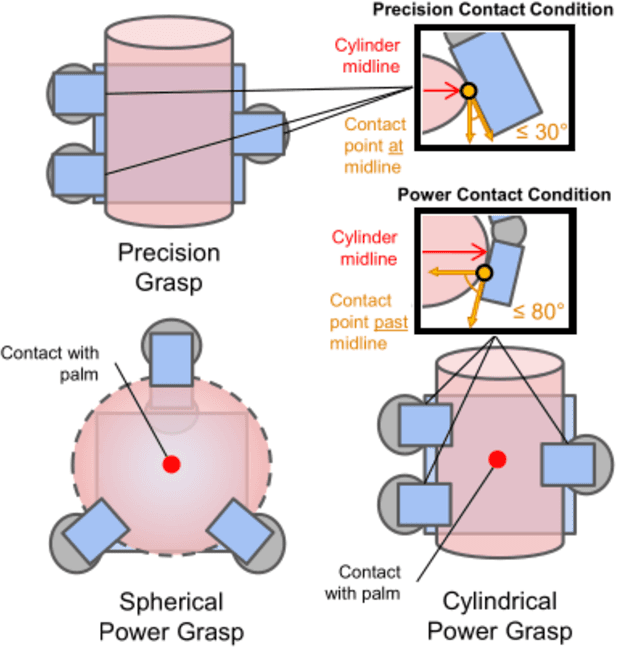

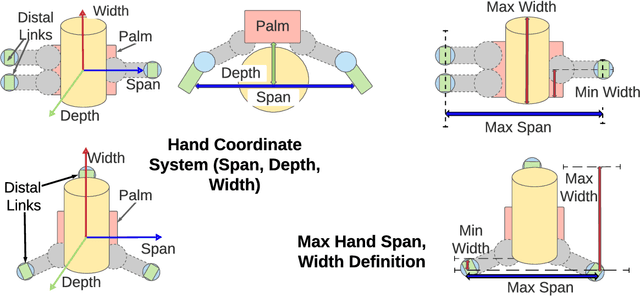

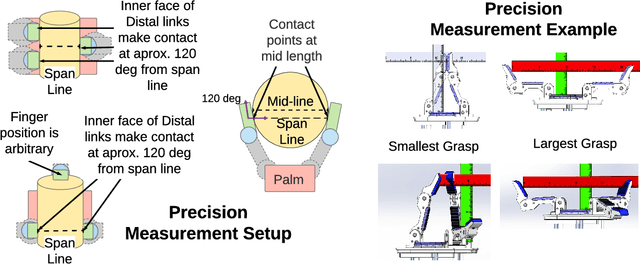

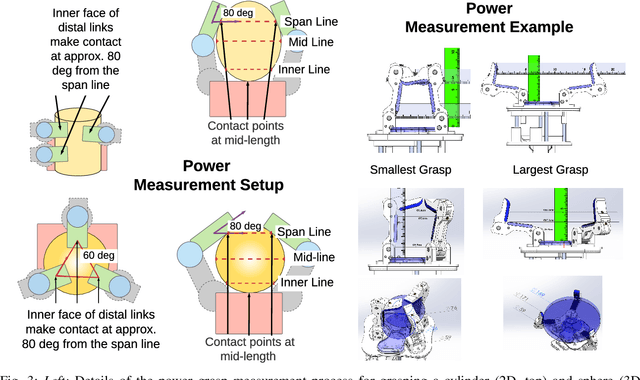

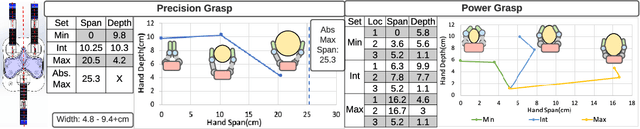

Abstract:The variety of robotic hand designs and actuation schemes makes it difficult to measure a hand's graspable volume. For end-users, this lack of standardized measurements makes it challenging to determine a priori if a robot hand is the right size for grasping an object. We propose a practical hand measurement standard, based on precision and power grasps, that is applicable to a wide variety of robot hand designs. The resulting measurements can be used to both determine if an object will fit in the hand and characterize the size of an object with respect to the hand. Our measurement procedure uses a functional approach, based on grasping a hypothetical cylinder, that allows the measurer choose the exact hand orientation and finger configurations that are used for the measurements. This ensures that the measurements are functionally comparable while relying on the human to determine the finger configurations that best match the intended grasp. We demonstrate using our measurement standard with three commercial robot hand designs and objects from the YCB data set.

Grasping Benchmarks: Normalizing for Object Size \& Approximating Hand Workspaces

Jun 19, 2021

Abstract:The varied landscape of robotic hand designs makes it difficult to set a standard for how to measure hand size and to communicate the size of objects it can grasp. Defining consistent workspace measurements would greatly assist scientific communication in robotic grasping research because it would allow researchers to 1) quantitatively communicate an object's relative size to a hand's and 2) approximate a functional subspace of a hand's kinematic workspace in a human-readable way. The goal of this paper is to specify a measurement procedure that quantitatively captures a hand's workspace size for both a precision and power grasp. This measurement procedure uses a {\em functional} approach -- based on a generic grasping scenario of a hypothetical object -- in order to make the procedure as generalizable and repeatable as possible, regardless of the actual hand design. This functional approach lets the measurer choose the exact finger configurations and contact points that satisfy the generic grasping scenario, while ensuring that the measurements are {\em functionally} comparable. We demonstrate these functional measurements on seven hand configurations. Additional hand measurements and instructions are provided in a GitHub Repository.

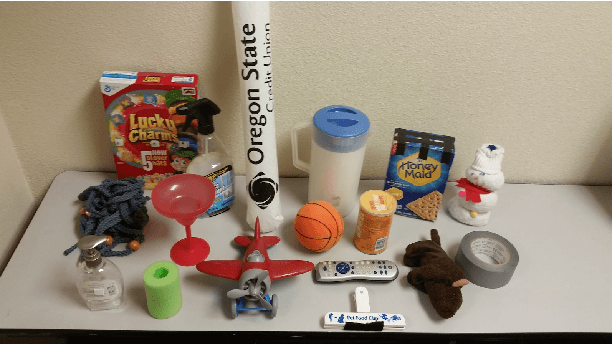

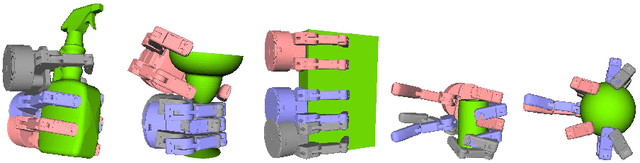

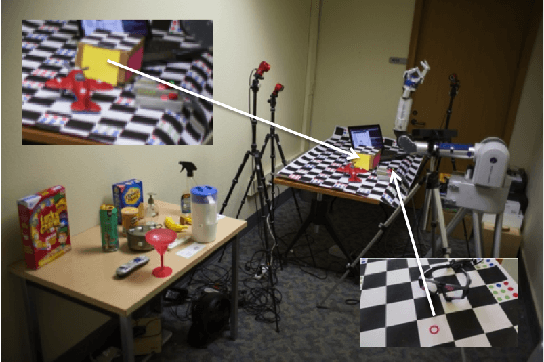

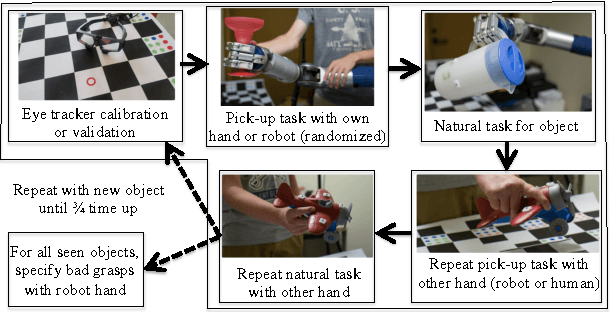

Human-Planned Robotic Grasp Ranges: Capture and Validation

Jul 12, 2016

Abstract:Leveraging human grasping skills to teach a robot to perform a manipulation task is appealing, but there are several limitations to this approach: time-inefficient data capture procedures, limited generalization of the data to other grasps and objects, and inability to use that data to learn more about how humans perform and evaluate grasps. This paper presents a data capture protocol that partially addresses these deficiencies by asking participants to specify ranges over which a grasp is valid. The protocol is verified both qualitatively through online survey questions (where 95.38% of within-range grasps are identified correctly with the nearest extreme grasp) and quantitatively by showing that there is small variation in grasps ranges from different participants as measured by joint angles, contact points, and position. We demonstrate that these grasp ranges are valid through testing on a physical robot (93.75% of grasps interpolated from grasp ranges are successful).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge