Raphaël Phan

A new Time-decay Radiomics Integrated Network (TRINet) for short-term breast cancer risk prediction

Dec 04, 2024

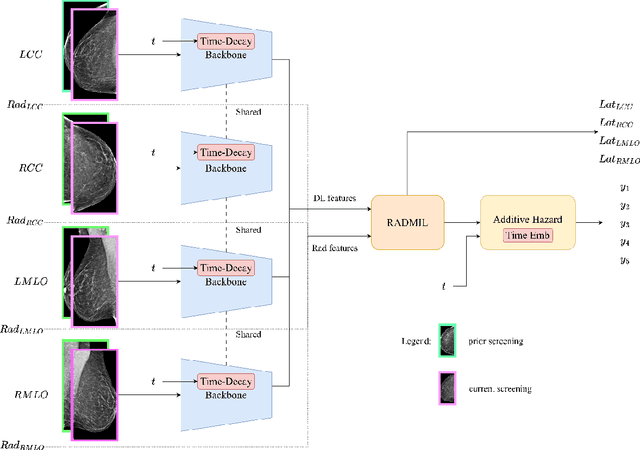

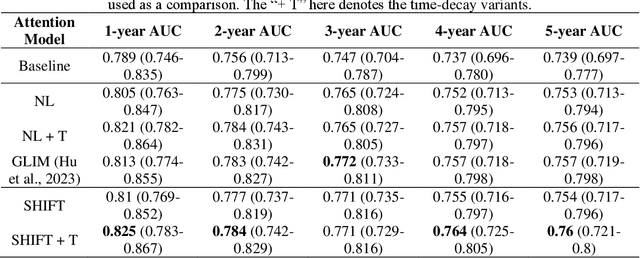

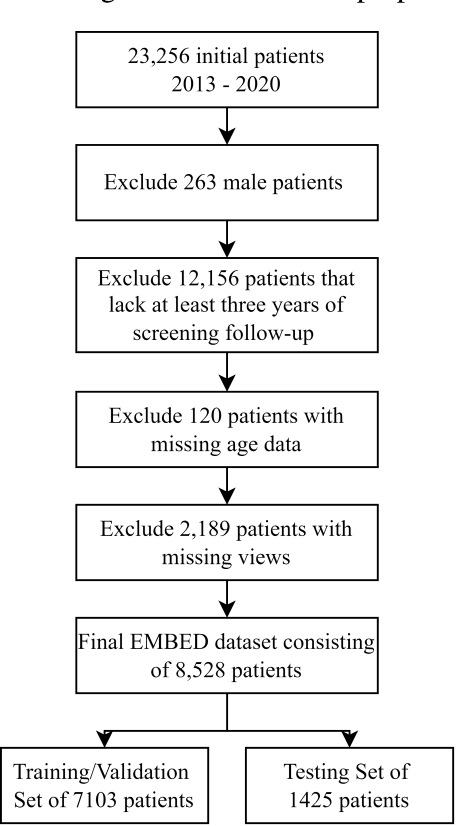

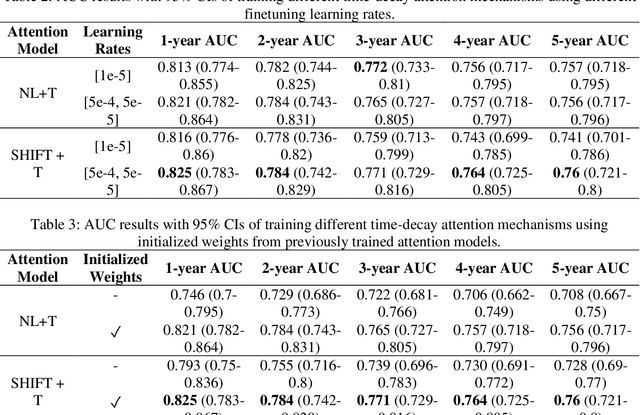

Abstract:To facilitate early detection of breast cancer, there is a need to develop short-term risk prediction schemes that can prescribe personalized/individualized screening mammography regimens for women. In this study, we propose a new deep learning architecture called TRINet that implements time-decay attention to focus on recent mammographic screenings, as current models do not account for the relevance of newer images. We integrate radiomic features with an Attention-based Multiple Instance Learning (AMIL) framework to weigh and combine multiple views for better risk estimation. In addition, we introduce a continual learning approach with a new label assignment strategy based on bilateral asymmetry to make the model more adaptable to asymmetrical cancer indicators. Finally, we add a time-embedded additive hazard layer to perform dynamic, multi-year risk forecasting based on individualized screening intervals. We used two public datasets, namely 8,528 patients from the American EMBED dataset and 8,723 patients from the Swedish CSAW dataset in our experiments. Evaluation results on the EMBED test set show that our approach significantly outperforms state-of-the-art models, achieving AUC scores of 0.851, 0.811, 0.796, 0.793, and 0.789 across 1-, 2-, to 5-year intervals, respectively. Our results underscore the importance of integrating temporal attention, radiomic features, time embeddings, bilateral asymmetry, and continual learning strategies, providing a more adaptive and precise tool for short-term breast cancer risk prediction.

CAFCT: Contextual and Attentional Feature Fusions of Convolutional Neural Networks and Transformer for Liver Tumor Segmentation

Jan 30, 2024

Abstract:Medical image semantic segmentation techniques can help identify tumors automatically from computed tomography (CT) scans. In this paper, we propose a Contextual and Attentional feature Fusions enhanced Convolutional Neural Network (CNN) and Transformer hybrid network (CAFCT) model for liver tumor segmentation. In the proposed model, three other modules are introduced in the network architecture: Attentional Feature Fusion (AFF), Atrous Spatial Pyramid Pooling (ASPP) of DeepLabv3, and Attention Gates (AGs) to improve contextual information related to tumor boundaries for accurate segmentation. Experimental results show that the proposed CAFCT achieves a mean Intersection over Union (IoU) of 90.38% and Dice score of 86.78%, respectively, on the Liver Tumor Segmentation Benchmark (LiTS) dataset, outperforming pure CNN or Transformer methods, e.g., Attention U-Net, and PVTFormer.

CST-YOLO: A Novel Method for Blood Cell Detection Based on Improved YOLOv7 and CNN-Swin Transformer

Jun 26, 2023

Abstract:Blood cell detection is a typical small-scale object detection problem in computer vision. In this paper, we propose a CST-YOLO model for blood cell detection based on YOLOv7 architecture and enhance it with the CNN-Swin Transformer (CST), which is a new attempt at CNN-Transformer fusion. We also introduce three other useful modules: Weighted Efficient Layer Aggregation Networks (W-ELAN), Multiscale Channel Split (MCS), and Concatenate Convolutional Layers (CatConv) in our CST-YOLO to improve small-scale object detection precision. Experimental results show that the proposed CST-YOLO achieves 92.7, 95.6, and 91.1 mAP@0.5 respectively on three blood cell datasets, outperforming state-of-the-art object detectors, e.g., YOLOv5 and YOLOv7. Our code is available at https://github.com/mkang315/CST-YOLO.

RADIFUSION: A multi-radiomics deep learning based breast cancer risk prediction model using sequential mammographic images with image attention and bilateral asymmetry refinement

Apr 01, 2023

Abstract:Breast cancer is a significant public health concern and early detection is critical for triaging high risk patients. Sequential screening mammograms can provide important spatiotemporal information about changes in breast tissue over time. In this study, we propose a deep learning architecture called RADIFUSION that utilizes sequential mammograms and incorporates a linear image attention mechanism, radiomic features, a new gating mechanism to combine different mammographic views, and bilateral asymmetry-based finetuning for breast cancer risk assessment. We evaluate our model on a screening dataset called Cohort of Screen-Aged Women (CSAW) dataset. Based on results obtained on the independent testing set consisting of 1,749 women, our approach achieved superior performance compared to other state-of-the-art models with area under the receiver operating characteristic curves (AUCs) of 0.905, 0.872 and 0.866 in the three respective metrics of 1-year AUC, 2-year AUC and > 2-year AUC. Our study highlights the importance of incorporating various deep learning mechanisms, such as image attention, radiomic features, gating mechanism, and bilateral asymmetry-based fine-tuning, to improve the accuracy of breast cancer risk assessment. We also demonstrate that our model's performance was enhanced by leveraging spatiotemporal information from sequential mammograms. Our findings suggest that RADIFUSION can provide clinicians with a powerful tool for breast cancer risk assessment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge