Ramin Pichevar

Perceptive, non-linear Speech Processing and Spiking Neural Networks

Mar 31, 2022Abstract:Source separation and speech recognition are very difficult in the context of noisy and corrupted speech. Most conventional techniques need huge databases to estimate speech (or noise) density probabilities to perform separation or recognition. We discuss the potential of perceptive speech analysis and processing in combination with biologically plausible neural network processors. We illustrate the potential of such non-linear processing of speech on a source separation system inspired by an Auditory Scene Analysis paradigm. We also discuss a potential application in speech recognition.

Design and Optimization of a Speech Recognition Front-End for Distant-Talking Control of a Music Playback Device

May 05, 2014

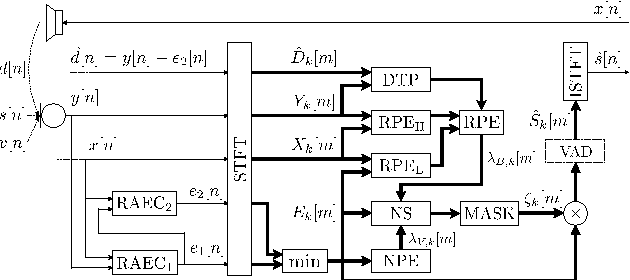

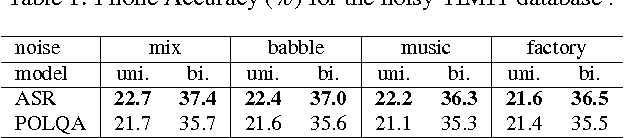

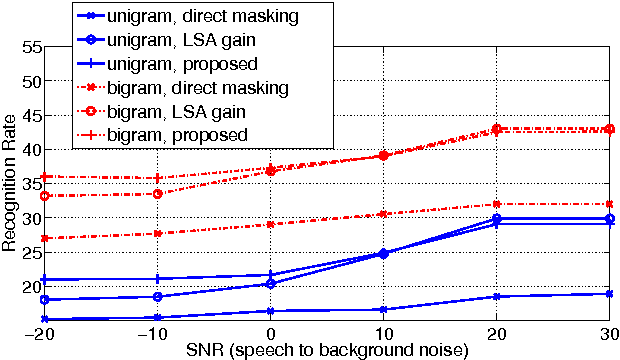

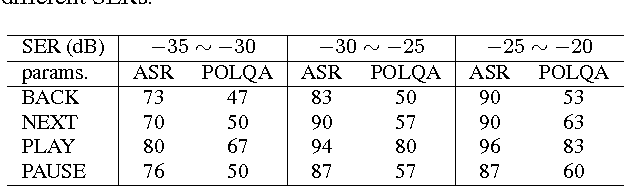

Abstract:This paper addresses the challenging scenario for the distant-talking control of a music playback device, a common portable speaker with four small loudspeakers in close proximity to one microphone. The user controls the device through voice, where the speech-to-music ratio can be as low as -30 dB during music playback. We propose a speech enhancement front-end that relies on known robust methods for echo cancellation, double-talk detection, and noise suppression, as well as a novel adaptive quasi-binary mask that is well suited for speech recognition. The optimization of the system is then formulated as a large scale nonlinear programming problem where the recognition rate is maximized and the optimal values for the system parameters are found through a genetic algorithm. We validate our methodology by testing over the TIMIT database for different music playback levels and noise types. Finally, we show that the proposed front-end allows a natural interaction with the device for limited-vocabulary voice commands.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge