Ramesh Sarukkai

Accurate Deep Direct Geo-Localization from Ground Imagery and Phone-Grade GPS

Apr 20, 2018

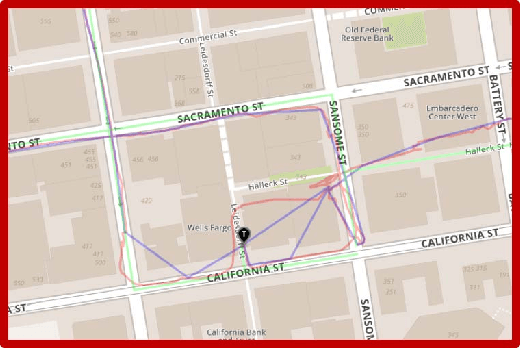

Abstract:One of the most critical topics in autonomous driving or ride-sharing technology is to accurately localize vehicles in the world frame. In addition to common multi-view camera systems, it usually also relies on industrial grade sensors, such as LiDAR, differential GPS, high precision IMU, and etc. In this paper, we develop an approach to provide an effective solution to this problem. We propose a method to train a geo-spatial deep neural network (CNN+LSTM) to predict accurate geo-locations (latitude and longitude) using only ordinary ground imagery and low accuracy phone-grade GPS. We evaluate our approach on the open dataset released during ACM Multimedia 2017 Grand Challenge. Having ground truth locations for training, we are able to reach nearly lane-level accuracy. We also evaluate the proposed method on our own collected images in San Francisco downtown area often described as "downtown canyon" where consumer GPS signals are extremely inaccurate. The results show the model can predict quality locations that suffice in real business applications, such as ride-sharing, only using phone-grade GPS. Unlike classic visual localization or recent PoseNet-like methods that may work well in indoor environments or small-scale outdoor environments, we avoid using a map or an SFM (structure-from-motion) model at all. More importantly, the proposed method can be scaled up without concerns over the potential failure of 3D reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge