Rajdeep Hazra Banerjee

Attr2Style: A Transfer Learning Approach for Inferring Fashion Styles via Apparel Attributes

Aug 26, 2020

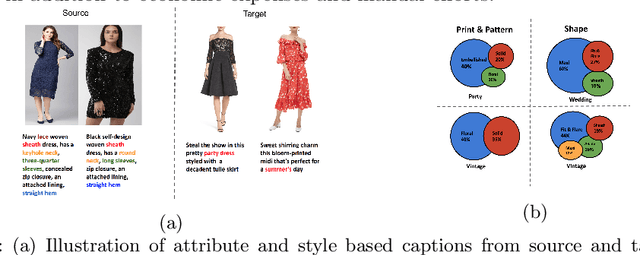

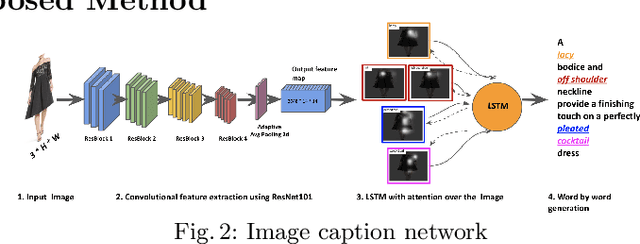

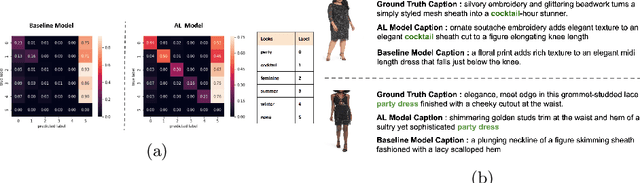

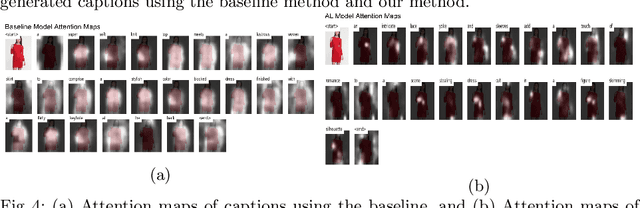

Abstract:Popular fashion e-commerce platforms mostly provide details about low-level attributes of an apparel (for example, neck type, dress length, collar type, print etc) on their product detail pages. However, customers usually prefer to buy apparels based on their style information, or simply put, occasion (for example, party wear, sports wear, casual wear etc). Application of a supervised image-captioning model to generate style-based image captions is limited because obtaining ground-truth annotations in the form of style-based captions is difficult. This is because annotating style-based captions requires a certain amount of fashion domain expertise, and also adds to the costs and manual effort. On the contrary, low-level attribute based annotations are much more easily available. To address this issue, we propose a transfer-learning based image captioning model that is trained on a source dataset with sufficient attribute-based ground-truth captions, and used to predict style-based captions on a target dataset. The target dataset has only a limited amount of images with style-based ground-truth captions. The main motivation of our approach comes from the fact that most often there are correlations among the low-level attributes and the higher-level styles for an apparel. We leverage this fact and train our model in an encoder-decoder based framework using attention mechanism. In particular, the encoder of the model is first trained on the source dataset to obtain latent representations capturing the low-level attributes. The trained model is fine-tuned to generate style-based captions for the target dataset. To highlight the effectiveness of our method, we qualitatively demonstrate that the captions generated by our approach are close to the actual style information for the evaluated apparels.

Teaching DNNs to design fast fashion

Jul 03, 2019

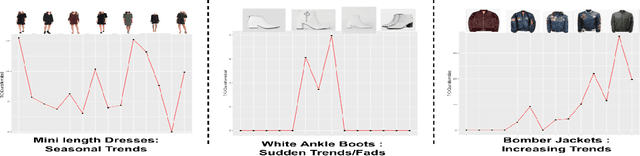

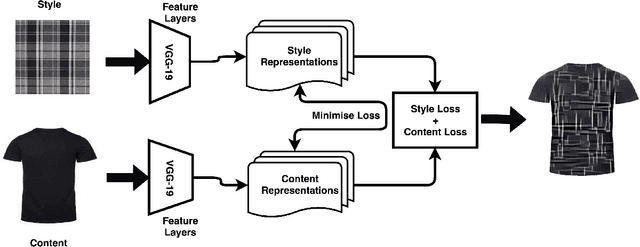

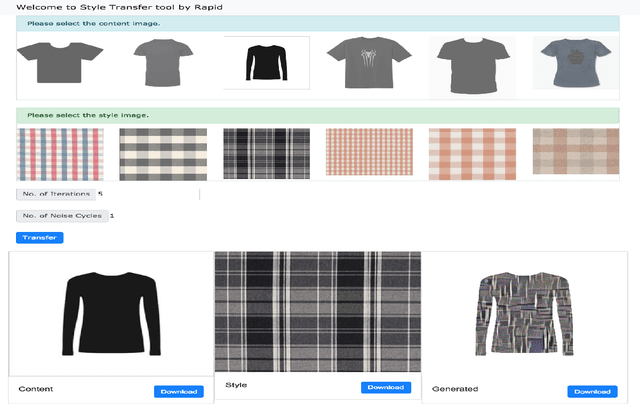

Abstract:$ $"Fast Fashion" spearheads the biggest disruption in fashion that enabled to engineer resilient supply chains to quickly respond to changing fashion trends. The conventional design process in commercial manufacturing is often fed through "trends" or prevailing modes of dressing around the world that indicate sudden interest in a new form of expression, cyclic patterns, and popular modes of expression for a given time frame. In this work, we propose a fully automated system to explore, detect, and finally synthesize trends in fashion into design elements by designing representative prototypes of apparel given time series signals generated from social media feeds. Our system is envisioned to be the first step in design of Fast Fashion where the production cycle for clothes from design inception to manufacturing is meant to be rapid and responsive to current "trends". It also works to reduce wastage in fashion production by taking in customer feedback on sellability at the time of design generation. We also provide an interface wherein the designers can play with multiple trending styles in fashion and visualize designs as interpolations of elements of these styles. We aim to aid the creative process through generating interesting and inspiring combinations for a designer to mull by running them through her key customers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge