Rainer Goebel

Contextual Encoder-Decoder Network for Visual Saliency Prediction

Feb 18, 2019

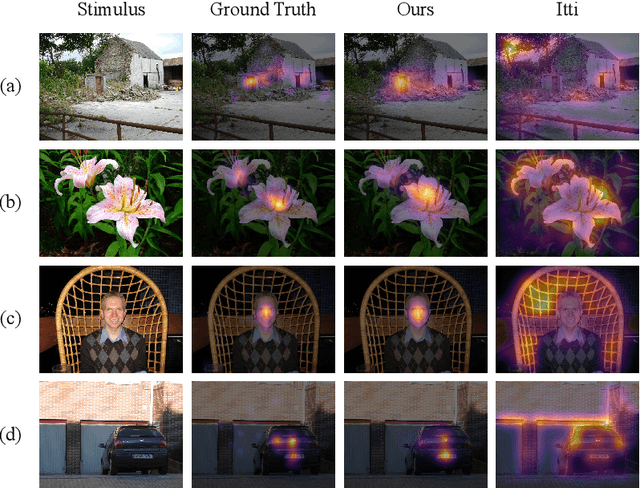

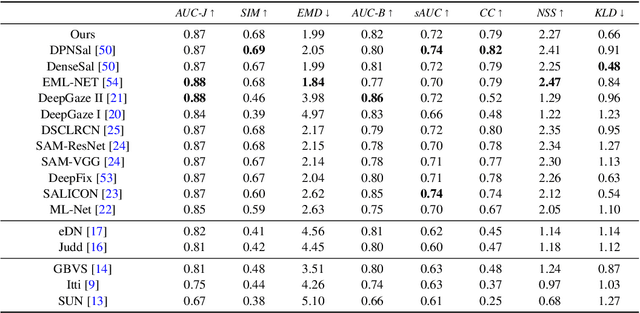

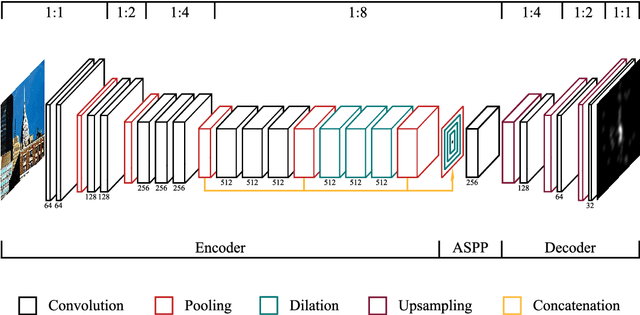

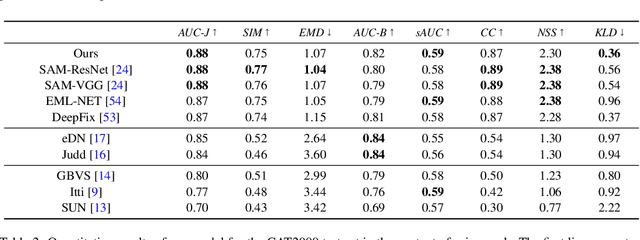

Abstract:Predicting salient regions in natural images requires the detection of objects that are present in a scene. To develop robust representations for this challenging task, high-level visual features at multiple spatial scales must be extracted and augmented with contextual information. However, existing models aimed at explaining human fixation maps do not incorporate such a mechanism explicitly. Here we propose an approach based on a convolutional neural network pre-trained on a large-scale image classification task. The architecture forms an encoder-decoder structure and includes a module with multiple convolutional layers at different dilation rates to capture multi-scale features in parallel. Moreover, we combine the resulting representations with global scene information for accurately predicting visual saliency. Our model achieves competitive results on two public saliency benchmarks and we demonstrate the effectiveness of the suggested approach on selected examples. The network is based on a lightweight image classification backbone and hence presents a suitable choice for applications with limited computational resources to estimate human fixations across complex natural scenes.

Deep driven fMRI decoding of visual categories

Jan 09, 2017

Abstract:Deep neural networks have been developed drawing inspiration from the brain visual pathway, implementing an end-to-end approach: from image data to video object classes. However building an fMRI decoder with the typical structure of Convolutional Neural Network (CNN), i.e. learning multiple level of representations, seems impractical due to lack of brain data. As a possible solution, this work presents the first hybrid fMRI and deep features decoding approach: collected fMRI and deep learnt representations of video object classes are linked together by means of Kernel Canonical Correlation Analysis. In decoding, this allows exploiting the discriminatory power of CNN by relating the fMRI representation to the last layer of CNN (fc7). We show the effectiveness of embedding fMRI data onto a subspace related to deep features in distinguishing semantic visual categories based solely on brain imaging data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge