Rahul Santhanam

Learning algorithms versus automatability of Frege systems

Nov 20, 2021Abstract:We connect learning algorithms and algorithms automating proof search in propositional proof systems: for every sufficiently strong, well-behaved propositional proof system $P$, we prove that the following statements are equivalent, 1. Provable learning: $P$ proves efficiently that p-size circuits are learnable by subexponential-size circuits over the uniform distribution with membership queries. 2. Provable automatability: $P$ proves efficiently that $P$ is automatable by non-uniform circuits on propositional formulas expressing p-size circuit lower bounds. Here, $P$ is sufficiently strong and well-behaved if I.-III. holds: I. $P$ p-simulates Je\v{r}\'abek's system $WF$ (which strengthens the Extended Frege system $EF$ by a surjective weak pigeonhole principle); II. $P$ satisfies some basic properties of standard proof systems which p-simulate $WF$; III. $P$ proves efficiently for some Boolean function $h$ that $h$ is hard on average for circuits of subexponential size. For example, if III. holds for $P=WF$, then Items 1 and 2 are equivalent for $P=WF$. If there is a function $h\in NE\cap coNE$ which is hard on average for circuits of size $2^{n/4}$, for each sufficiently big $n$, then there is an explicit propositional proof system $P$ satisfying properties I.-III., i.e. the equivalence of Items 1 and 2 holds for $P$.

Conspiracies between Learning Algorithms, Circuit Lower Bounds and Pseudorandomness

Nov 03, 2016

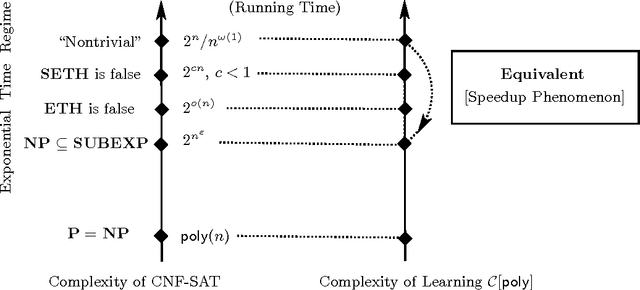

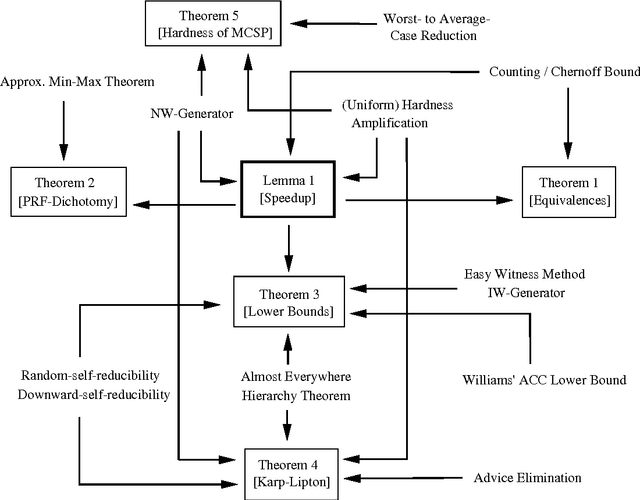

Abstract:We prove several results giving new and stronger connections between learning, circuit lower bounds and pseudorandomness. Among other results, we show a generic learning speedup lemma, equivalences between various learning models in the exponential time and subexponential time regimes, a dichotomy between learning and pseudorandomness, consequences of non-trivial learning for circuit lower bounds, Karp-Lipton theorems for probabilistic exponential time, and NC$^1$-hardness for the Minimum Circuit Size Problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge