Rafayel Mkrtchyan

Vision Transformers for Efficient Indoor Pathloss Radio Map Prediction

Dec 12, 2024

Abstract:Vision Transformers (ViTs) have demonstrated remarkable success in achieving state-of-the-art performance across various image-based tasks and beyond. In this study, we employ a ViT-based neural network to address the problem of indoor pathloss radio map prediction. The network's generalization ability is evaluated across diverse settings, including unseen buildings, frequencies, and antennas with varying radiation patterns. By leveraging extensive data augmentation techniques and pretrained DINOv2 weights, we achieve promising results, even under the most challenging scenarios.

Outdoor Environment Reconstruction with Deep Learning on Radio Propagation Paths

Feb 27, 2024

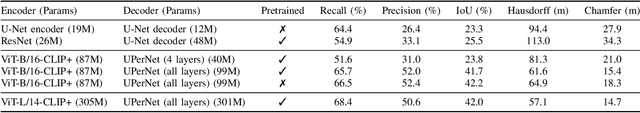

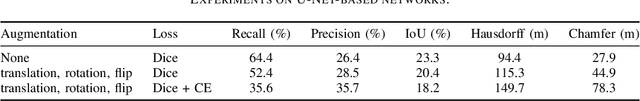

Abstract:Conventional methods for outdoor environment reconstruction rely predominantly on vision-based techniques like photogrammetry and LiDAR, facing limitations such as constrained coverage, susceptibility to environmental conditions, and high computational and energy demands. These challenges are particularly pronounced in applications like augmented reality navigation, especially when integrated with wearable devices featuring constrained computational resources and energy budgets. In response, this paper proposes a novel approach harnessing ambient wireless signals for outdoor environment reconstruction. By analyzing radio frequency (RF) data, the paper aims to deduce the environmental characteristics and digitally reconstruct the outdoor surroundings. Investigating the efficacy of selected deep learning (DL) techniques on the synthetic RF dataset WAIR-D, the study endeavors to address the research gap in this domain. Two DL-driven approaches are evaluated (convolutional U-Net and CLIP+ based on vision transformers), with performance assessed using metrics like intersection-over-union (IoU), Hausdorff distance, and Chamfer distance. The results demonstrate promising performance of the RF-based reconstruction method, paving the way towards lightweight and scalable reconstruction solutions.

ML-based Approaches for Wireless NLOS Localization: Input Representations and Uncertainty Estimation

Apr 22, 2023

Abstract:The challenging problem of non-line-of-sight (NLOS) localization is critical for many wireless networking applications. The lack of available datasets has made NLOS localization difficult to tackle with ML-driven methods, but recent developments in synthetic dataset generation have provided new opportunities for research. This paper explores three different input representations: (i) single wireless radio path features, (ii) wireless radio link features (multi-path), and (iii) image-based representations. Inspired by the two latter new representations, we design two convolutional neural networks (CNNs) and we demonstrate that, although not significantly improving the NLOS localization performance, they are able to support richer prediction outputs, thus allowing deeper analysis of the predictions. In particular, the richer outputs enable reliable identification of non-trustworthy predictions and support the prediction of the top-K candidate locations for a given instance. We also measure how the availability of various features (such as angles of signal departure and arrival) affects the model's performance, providing insights about the types of data that should be collected for enhanced NLOS localization. Our insights motivate future work on building more efficient neural architectures and input representations for improved NLOS localization performance, along with additional useful application features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge