Outdoor Environment Reconstruction with Deep Learning on Radio Propagation Paths

Paper and Code

Feb 27, 2024

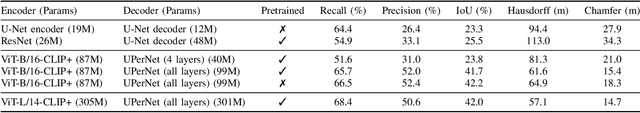

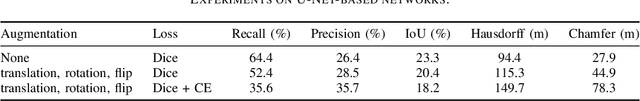

Conventional methods for outdoor environment reconstruction rely predominantly on vision-based techniques like photogrammetry and LiDAR, facing limitations such as constrained coverage, susceptibility to environmental conditions, and high computational and energy demands. These challenges are particularly pronounced in applications like augmented reality navigation, especially when integrated with wearable devices featuring constrained computational resources and energy budgets. In response, this paper proposes a novel approach harnessing ambient wireless signals for outdoor environment reconstruction. By analyzing radio frequency (RF) data, the paper aims to deduce the environmental characteristics and digitally reconstruct the outdoor surroundings. Investigating the efficacy of selected deep learning (DL) techniques on the synthetic RF dataset WAIR-D, the study endeavors to address the research gap in this domain. Two DL-driven approaches are evaluated (convolutional U-Net and CLIP+ based on vision transformers), with performance assessed using metrics like intersection-over-union (IoU), Hausdorff distance, and Chamfer distance. The results demonstrate promising performance of the RF-based reconstruction method, paving the way towards lightweight and scalable reconstruction solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge