Radhakrishnan Balu

On the Computational Cost of Stochastic Security

May 13, 2023

Abstract:We investigate whether long-run persistent chain Monte Carlo simulation of Langevin dynamics improves the quality of the representations achieved by energy-based models (EBM). We consider a scheme wherein Monte Carlo simulation of a diffusion process using a trained EBM is used to improve the adversarial robustness and the calibration score of an independent classifier network. Our results show that increasing the computational budget of Gibbs sampling in persistent contrastive divergence improves the calibration and adversarial robustness of the model, elucidating the practical merit of realizing new quantum and classical hardware and software for efficient Gibbs sampling from continuous energy potentials.

Bayesian Networks based Hybrid Quantum-Classical Machine Learning Approach to Elucidate Gene Regulatory Pathways

Jan 23, 2019

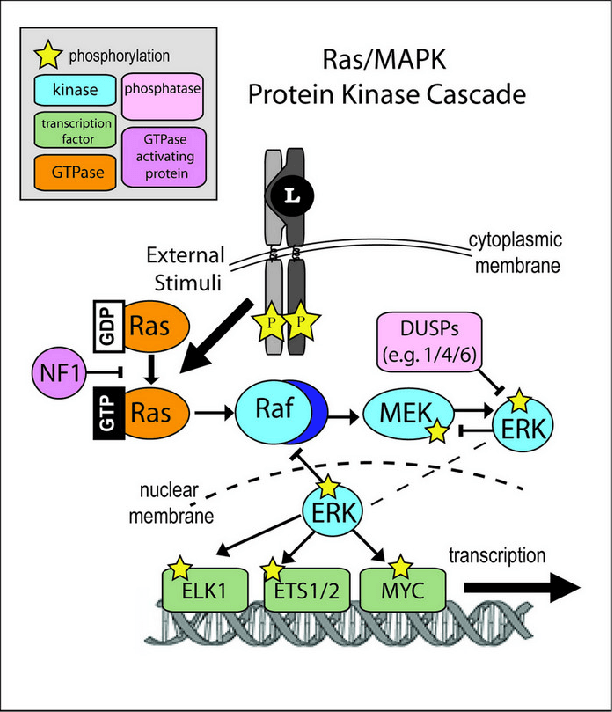

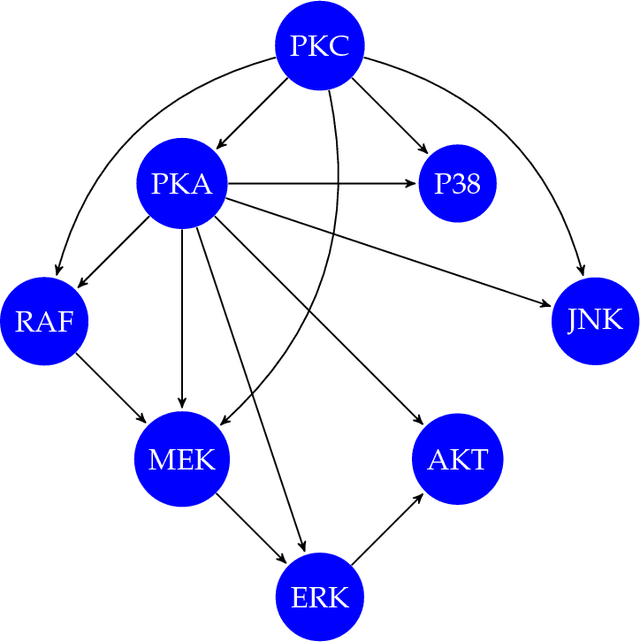

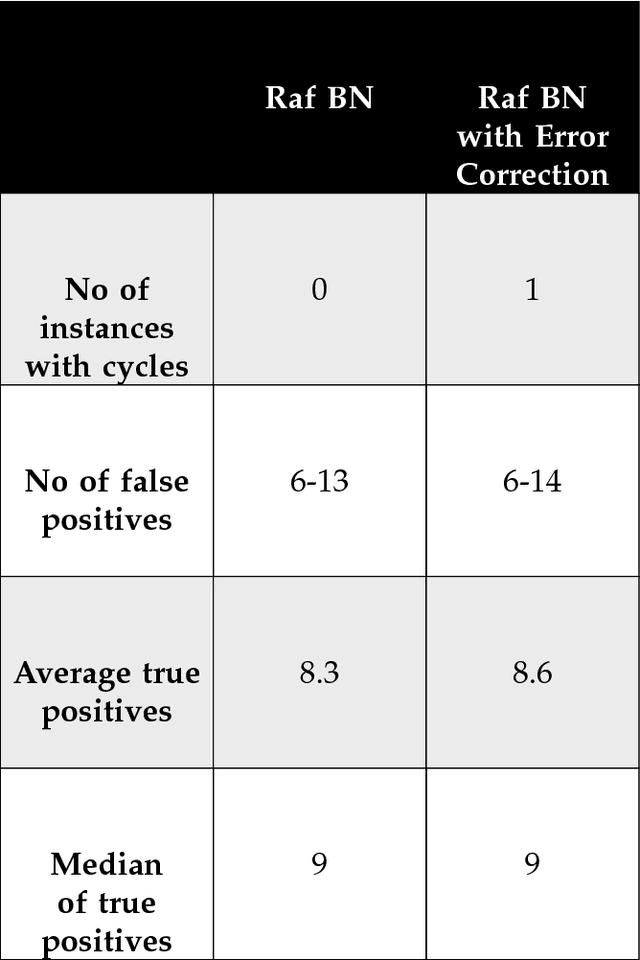

Abstract:We report a scalable hybrid quantum-classical machine learning framework to build Bayesian networks (BN) that captures the conditional dependence and causal relationships of random variables. The generation of a BN consists of finding a directed acyclic graph (DAG) and the associated joint probability distribution of the nodes consistent with a given dataset. This is a combinatorial problem of structural learning of the underlying graph, starting from a single node and building one arc at a time, that fits a given ensemble using maximum likelihood estimators (MLE). It is cast as an optimization problem that consists of a scoring step performed on a classical computer, penalties for acyclicity and number of parents allowed constraints, and a search step implemented using a quantum annealer. We have assumed uniform priors in deriving the Bayesian network that can be relaxed by formulating the problem as an estimation Dirichlet parameters. We demonstrate the utility of the framework by applying to the problem of elucidating the gene regulatory network for the MAPK/Raf pathway in human T-cells using proteomics data where the concentration of proteins, nodes of the BN, are interpreted as probabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge