Rachada Kongkachandra

Data Generation for Satellite Image Classification Using Self-Supervised Representation Learning

May 28, 2022

Abstract:Supervised deep neural networks are the-state-of-the-art for many tasks in the remote sensing domain, against the fact that such techniques require the dataset consisting of pairs of input and label, which are rare and expensive to collect in term of both manpower and resources. On the other hand, there are abundance of raw satellite images available both for commercial and academic purposes. Hence, in this work, we tackle the insufficient labeled data problem in satellite image classification task by introducing the process based on the self-supervised learning technique to create the synthetic labels for satellite image patches. These synthetic labels can be used as the training dataset for the existing supervised learning techniques. In our experiments, we show that the models trained on the synthetic labels give similar performance to the models trained on the real labels. And in the process of creating the synthetic labels, we also obtain the visual representation vectors that are versatile and knowledge transferable.

Automatic Training Data Synthesis for Handwriting Recognition Using the Structural Crossing-Over Technique

Oct 09, 2014

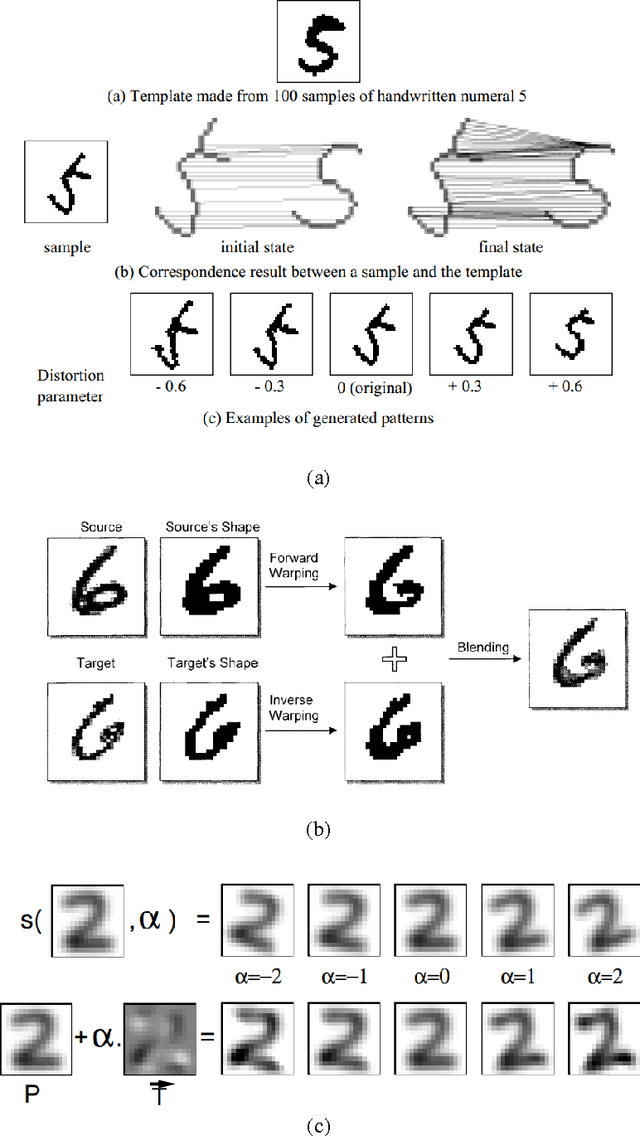

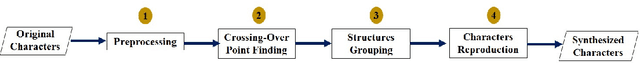

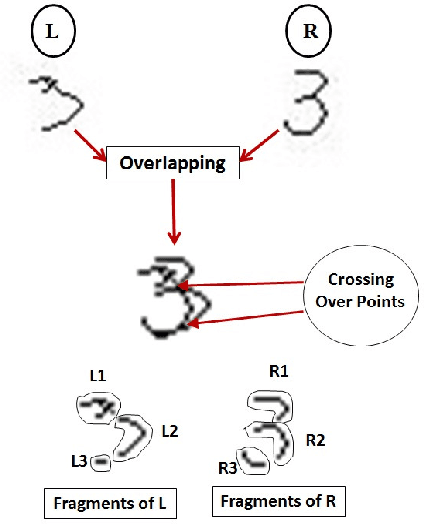

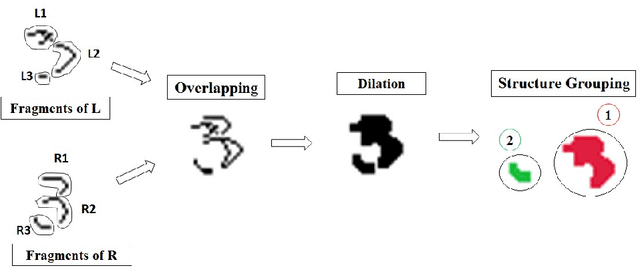

Abstract:The paper presents a novel technique called "Structural Crossing-Over" to synthesize qualified data for training machine learning-based handwriting recognition. The proposed technique can provide a greater variety of patterns of training data than the existing approaches such as elastic distortion and tangent-based affine transformation. A couple of training characters are chosen, then they are analyzed by their similar and different structures, and finally are crossed over to generate the new characters. The experiments are set to compare the performances of tangent-based affine transformation and the proposed approach in terms of the variety of generated characters and percent of recognition errors. The standard MNIST corpus including 60,000 training characters and 10,000 test characters is employed in the experiments. The proposed technique uses 1,000 characters to synthesize 60,000 characters, and then uses these data to train and test the benchmark handwriting recognition system that exploits Histogram of Gradient (HOG) as features and Support Vector Machine (SVM) as recognizer. The experimental result yields 8.06% of errors. It significantly outperforms the tangent-based affine transformation and the original MNIST training data, which are 11.74% and 16.55%, respectively.

* 8 pages, 6 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge