Raúl Pérez

Learning to Select Goals in Automated Planning with Deep-Q Learning

Jun 20, 2024Abstract:In this work we propose a planning and acting architecture endowed with a module which learns to select subgoals with Deep Q-Learning. This allows us to decrease the load of a planner when faced with scenarios with real-time restrictions. We have trained this architecture on a video game environment used as a standard test-bed for intelligent systems applications, testing it on different levels of the same game to evaluate its generalization abilities. We have measured the performance of our approach as more training data is made available, as well as compared it with both a state-of-the-art, classical planner and the standard Deep Q-Learning algorithm. The results obtained show our model performs better than the alternative methods considered, when both plan quality (plan length) and time requirements are taken into account. On the one hand, it is more sample-efficient than standard Deep Q-Learning, and it is able to generalize better across levels. On the other hand, it reduces problem-solving time when compared with a state-of-the-art automated planner, at the expense of obtaining plans with only 9% more actions.

* 25 pages, 4 figures

Discovering and Explaining Driver Behaviour under HoS Regulations

Jan 12, 2023Abstract:World wide transport authorities are imposing complex Hours of Service regulations to drivers, which constraint the amount of working, driving and resting time when delivering a service. As a consequence, transport companies are responsible not only of scheduling driving plans aligned with laws that define the legal behaviour of a driver, but also of monitoring and identifying as soon as possible problematic patterns that can incur in costs due to sanctions. Transport experts are frequently in charge of many drivers and lack time to analyse the vast amount of data recorded by the onboard sensors, and companies have grown accustomed to pay sanctions rather than predict and forestall wrongdoings. This paper exposes an application for summarising raw driver activity logs according to these regulations and for explaining driver behaviour in a human readable format. The system employs planning, constraint, and clustering techniques to extract and describe what the driver has been doing while identifying infractions and the activities that originate them. Furthermore, it groups drivers based on similar driving patterns. An experimentation in real world data indicates that recurring driving patterns can be clustered from short basic driving sequences to whole drivers working days.

Learning Numerical Action Models from Noisy Input Data

Nov 09, 2021

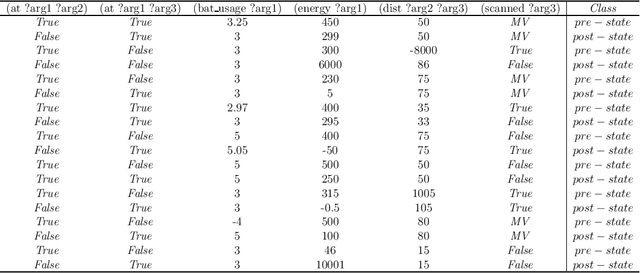

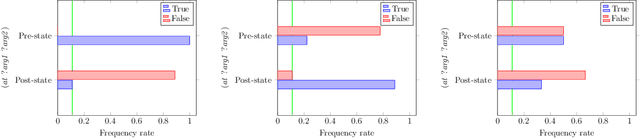

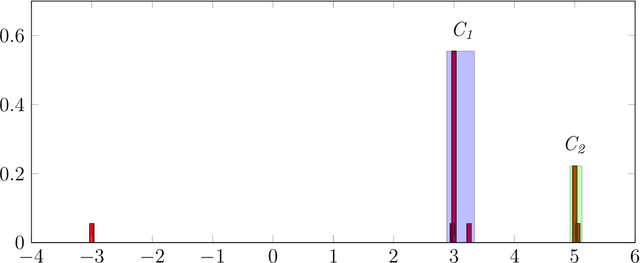

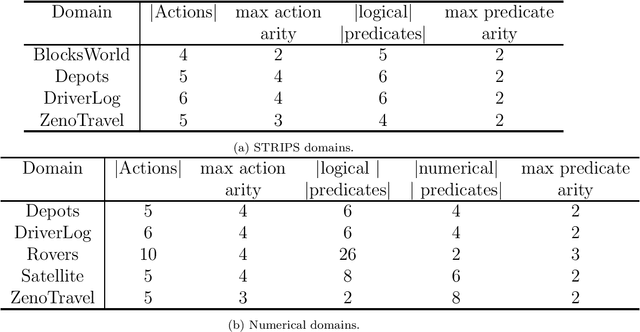

Abstract:This paper presents the PlanMiner-N algorithm, a domain learning technique based on the PlanMiner domain learning algorithm. The algorithm presented here improves the learning capabilities of PlanMiner when using noisy data as input. The PlanMiner algorithm is able to infer arithmetic and logical expressions to learn numerical planning domains from the input data, but it was designed to work under situations of incompleteness making it unreliable when facing noisy input data. In this paper, we propose a series of enhancements to the learning process of PlanMiner to expand its capabilities to learn from noisy data. These methods preprocess the input data by detecting noise and filtering it and study the learned action models learned to find erroneous preconditions/effects in them. The methods proposed in this paper were tested using a set of domains from the International Planning Competition (IPC). The results obtained indicate that PlanMiner-N improves the performance of PlanMiner greatly when facing noisy input data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge