Róbert Moni

Imitation Learning for Generalizable Self-driving Policy with Sim-to-real Transfer

Jun 22, 2022

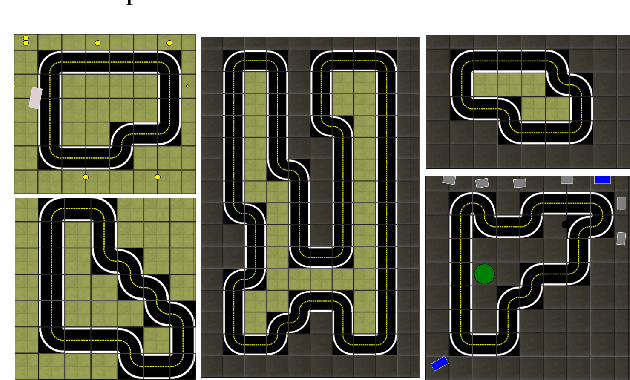

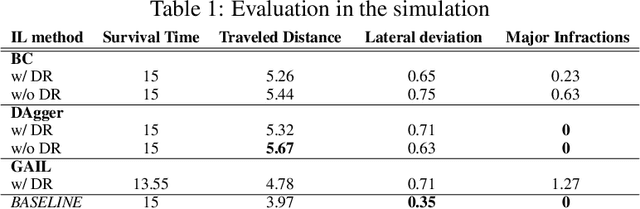

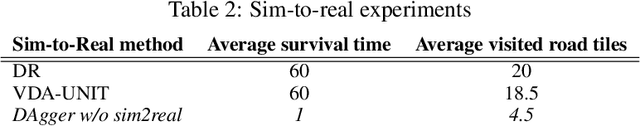

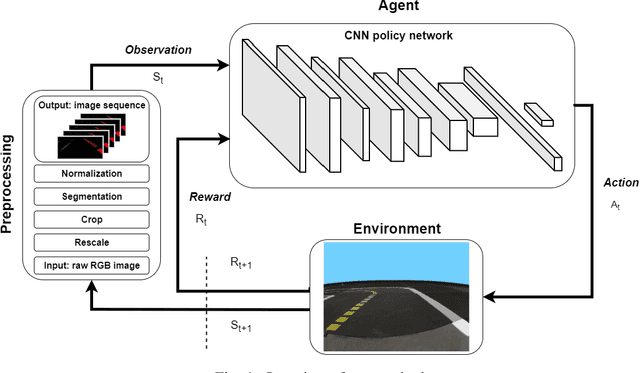

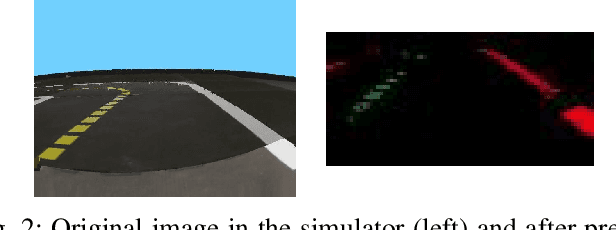

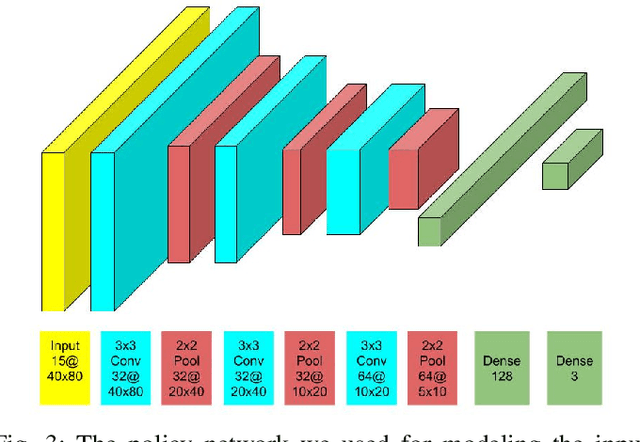

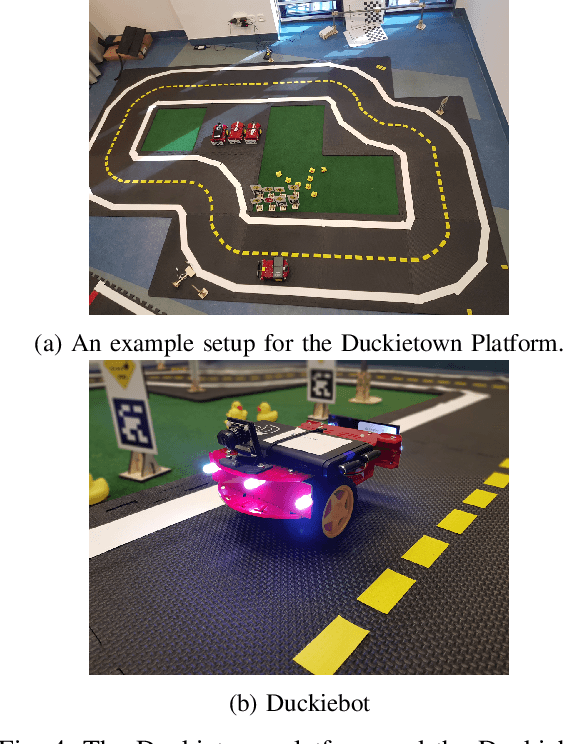

Abstract:Imitation Learning uses the demonstrations of an expert to uncover the optimal policy and it is suitable for real-world robotics tasks as well. In this case, however, the training of the agent is carried out in a simulation environment due to safety, economic and time constraints. Later, the agent is applied in the real-life domain using sim-to-real methods. In this paper, we apply Imitation Learning methods that solve a robotics task in a simulated environment and use transfer learning to apply these solutions in the real-world environment. Our task is set in the Duckietown environment, where the robotic agent has to follow the right lane based on the input images of a single forward-facing camera. We present three Imitation Learning and two sim-to-real methods capable of achieving this task. A detailed comparison is provided on these techniques to highlight their advantages and disadvantages.

Sim-to-real reinforcement learning applied to end-to-end vehicle control

Dec 14, 2020Abstract:In this work, we study vision-based end-to-end reinforcement learning on vehicle control problems, such as lane following and collision avoidance. Our controller policy is able to control a small-scale robot to follow the right-hand lane of a real two-lane road, while its training was solely carried out in a simulation. Our model, realized by a simple, convolutional network, only relies on images of a forward-facing monocular camera and generates continuous actions that directly control the vehicle. To train this policy we used Proximal Policy Optimization, and to achieve the generalization capability required for real performance we used domain randomization. We carried out thorough analysis of the trained policy, by measuring multiple performance metrics and comparing these to baselines that rely on other methods. To assess the quality of the simulation-to-reality transfer learning process and the performance of the controller in the real world, we measured simple metrics on a real track and compared these with results from a matching simulation. Further analysis was carried out by visualizing salient object maps.

* \c{opyright} 2020 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

Robust Reinforcement Learning-based Autonomous Driving Agent for Simulation and Real World

Sep 23, 2020

Abstract:Deep Reinforcement Learning (DRL) has been successfully used to solve different challenges, e.g. complex board and computer games, recently. However, solving real-world robotics tasks with DRL seems to be a more difficult challenge. The desired approach would be to train the agent in a simulator and transfer it to the real world. Still, models trained in a simulator tend to perform poorly in real-world environments due to the differences. In this paper, we present a DRL-based algorithm that is capable of performing autonomous robot control using Deep Q-Networks (DQN). In our approach, the agent is trained in a simulated environment and it is able to navigate both in a simulated and real-world environment. The method is evaluated in the Duckietown environment, where the agent has to follow the lane based on a monocular camera input. The trained agent is able to run on limited hardware resources and its performance is comparable to state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge