Qizhu Dai

Enriching Information and Preserving Semantic Consistency in Expanding Curvilinear Object Segmentation Datasets

Jul 11, 2024

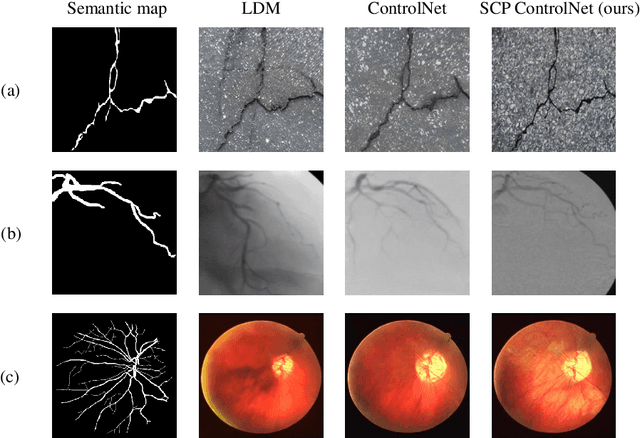

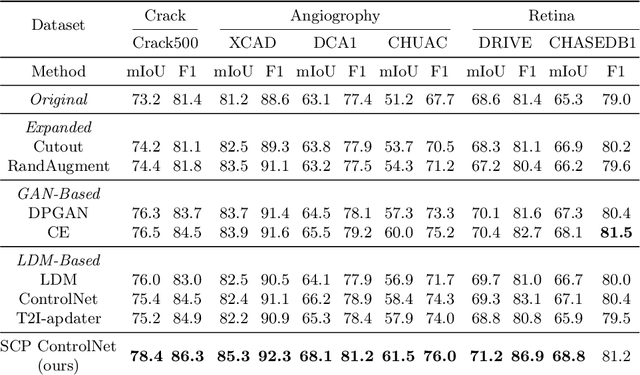

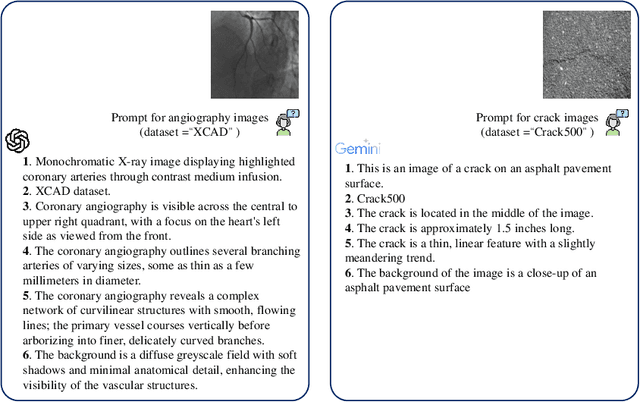

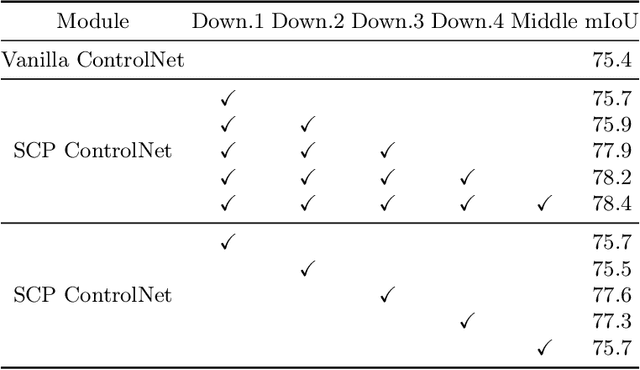

Abstract:Curvilinear object segmentation plays a crucial role across various applications, yet datasets in this domain often suffer from small scale due to the high costs associated with data acquisition and annotation. To address these challenges, this paper introduces a novel approach for expanding curvilinear object segmentation datasets, focusing on enhancing the informativeness of generated data and the consistency between semantic maps and generated images. Our method enriches synthetic data informativeness by generating curvilinear objects through their multiple textual features. By combining textual features from each sample in original dataset, we obtain synthetic images that beyond the original dataset's distribution. This initiative necessitated the creation of the Curvilinear Object Segmentation based on Text Generation (COSTG) dataset. Designed to surpass the limitations of conventional datasets, COSTG incorporates not only standard semantic maps but also some textual descriptions of curvilinear object features. To ensure consistency between synthetic semantic maps and images, we introduce the Semantic Consistency Preserving ControlNet (SCP ControlNet). This involves an adaptation of ControlNet with Spatially-Adaptive Normalization (SPADE), allowing it to preserve semantic information that would typically be washed away in normalization layers. This modification facilitates more accurate semantic image synthesis. Experimental results demonstrate the efficacy of our approach across three types of curvilinear objects (angiography, crack and retina) and six public datasets (CHUAC, XCAD, DCA1, DRIVE, CHASEDB1 and Crack500). The synthetic data generated by our method not only expand the dataset, but also effectively improves the performance of other curvilinear object segmentation models. Source code and dataset are available at \url{https://github.com/tanlei0/COSTG}.

CorefDRE: Document-level Relation Extraction with coreference resolution

Feb 22, 2022

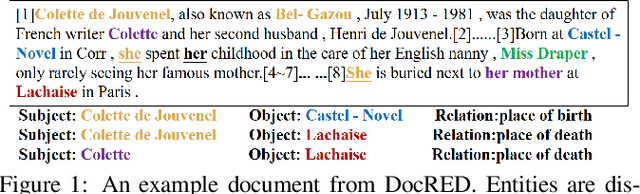

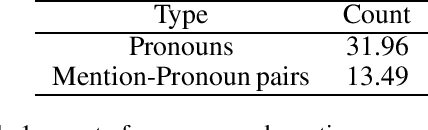

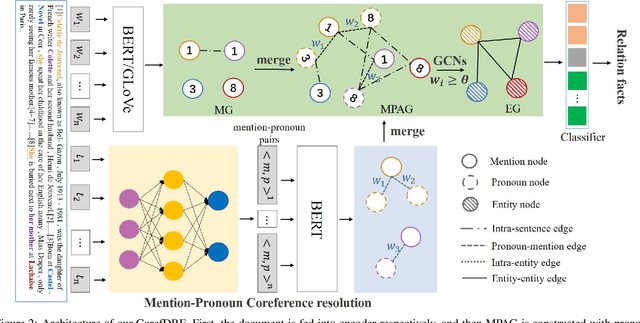

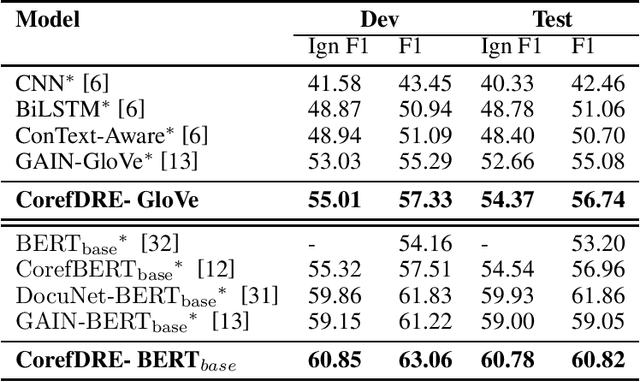

Abstract:Document-level relation extraction is to extract relation facts from a document consisting of multiple sentences, in which pronoun crossed sentences are a ubiquitous phenomenon against a single sentence. However, most of the previous works focus more on mentions coreference resolution except for pronouns, and rarely pay attention to mention-pronoun coreference and capturing the relations. To represent multi-sentence features by pronouns, we imitate the reading process of humans by leveraging coreference information when dynamically constructing a heterogeneous graph to enhance semantic information. Since the pronoun is notoriously ambiguous in the graph, a mention-pronoun coreference resolution is introduced to calculate the affinity between pronouns and corresponding mentions, and the noise suppression mechanism is proposed to reduce the noise caused by pronouns. Experiments on the public dataset, DocRED, DialogRE and MPDD, show that Coref-aware Doc-level Relation Extraction based on Graph Inference Network outperforms the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge