Qianqian Zou

Calibrated and Efficient Sampling-Free Confidence Estimation for LiDAR Scene Semantic Segmentation

Nov 18, 2024Abstract:Reliable deep learning models require not only accurate predictions but also well-calibrated confidence estimates to ensure dependable uncertainty estimation. This is crucial in safety-critical applications like autonomous driving, which depend on rapid and precise semantic segmentation of LiDAR point clouds for real-time 3D scene understanding. In this work, we introduce a sampling-free approach for estimating well-calibrated confidence values for classification tasks, achieving alignment with true classification accuracy and significantly reducing inference time compared to sampling-based methods. Our evaluation using the Adaptive Calibration Error (ACE) metric for LiDAR semantic segmentation shows that our approach maintains well-calibrated confidence values while achieving increased processing speed compared to a sampling baseline. Additionally, reliability diagrams reveal that our method produces underconfidence rather than overconfident predictions, an advantage for safety-critical applications. Our sampling-free approach offers well-calibrated and time-efficient predictions for LiDAR scene semantic segmentation.

3D Uncertain Distance Field Mapping using GMM and GP

Mar 12, 2024

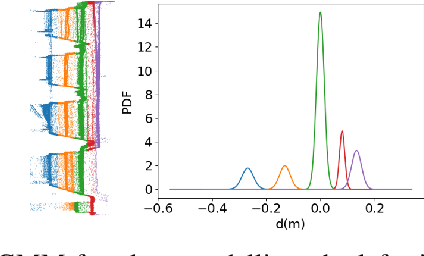

Abstract:In this study, we address the challenge of constructing continuous three-dimensional (3D) models that accurately represent uncertain surfaces, derived from noisy and incomplete LiDAR scanning data. Building upon our prior work, which utilized the Gaussian Process (GP) and Gaussian Mixture Model (GMM) for structured building models, we introduce a more generalized approach tailored for complex surfaces in urban scenes, where four-dimensional (4D) GMM Regression and GP with derivative observations are applied. A Hierarchical GMM (HGMM) is employed to optimize the number of GMM components and speed up the GMM training. With the prior map obtained from HGMM, GP inference is followed for the refinement of the final map. Our approach models the implicit surface of the geo-object and enables the inference of the regions that are not completely covered by measurements. The integration of GMM and GP yields well-calibrated uncertainty estimates alongside the surface model, enhancing both accuracy and reliability. The proposed method is evaluated on the real data collected by a mobile mapping system. Compared to the performance in mapping accuracy and uncertainty quantification of other methods such as Gaussian Process Implicit Surface map (GPIS) and log-Gaussian Process Implicit Surface map (Log-GPIS), the proposed method achieves lower RMSEs, higher log-likelihood values and fewer computational costs for the evaluated datasets.

Gaussian Process Mapping of Uncertain Building Models with GMM as Prior

Dec 15, 2022

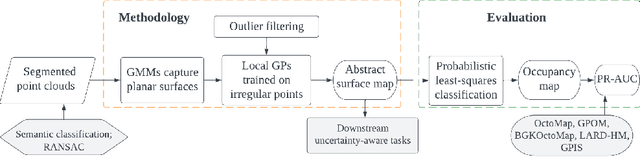

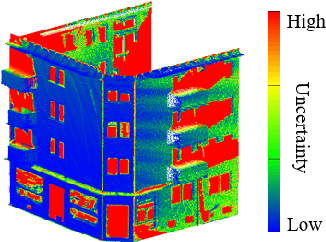

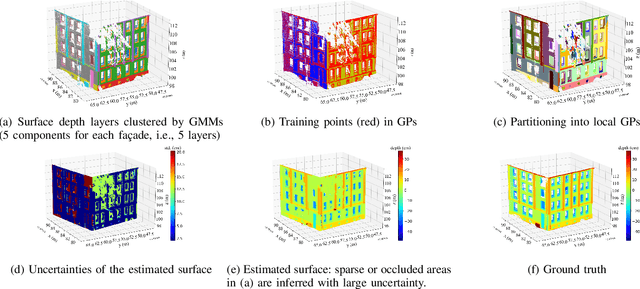

Abstract:Mapping with uncertainty representation is required in many research domains, such as localization and sensor fusion. Although there are many uncertainty explorations in pose estimation of an ego-robot with map information, the quality of the reference maps is often neglected. To avoid the potential problems caused by the errors of maps and a lack of the uncertainty quantification, an adequate uncertainty measure for the maps is required. In this paper, uncertain building models with abstract map surface using Gaussian Process (GP) is proposed to measure the map uncertainty in a probabilistic way. To reduce the redundant computation for simple planar objects, extracted facets from a Gaussian Mixture Model (GMM) are combined with the implicit GP map while local GP-block techniques are used as well. The proposed method is evaluated on LiDAR point clouds of city buildings collected by a mobile mapping system. Compared to the performances of other methods such like Octomap, Gaussian Process Occupancy Map (GPOM) and Bayersian Generalized Kernel Inference (BGKOctomap), our method has achieved higher Precision-Recall AUC for evaluated buildings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge