Qi Zhan

Assessment of Generative Named Entity Recognition in the Era of Large Language Models

Jan 25, 2026Abstract:Named entity recognition (NER) is evolving from a sequence labeling task into a generative paradigm with the rise of large language models (LLMs). We conduct a systematic evaluation of open-source LLMs on both flat and nested NER tasks. We investigate several research questions including the performance gap between generative NER and traditional NER models, the impact of output formats, whether LLMs rely on memorization, and the preservation of general capabilities after fine-tuning. Through experiments across eight LLMs of varying scales and four standard NER datasets, we find that: (1) With parameter-efficient fine-tuning and structured formats like inline bracketed or XML, open-source LLMs achieve performance competitive with traditional encoder-based models and surpass closed-source LLMs like GPT-3; (2) The NER capability of LLMs stems from instruction-following and generative power, not mere memorization of entity-label pairs; and (3) Applying NER instruction tuning has minimal impact on general capabilities of LLMs, even improving performance on datasets like DROP due to enhanced entity understanding. These findings demonstrate that generative NER with LLMs is a promising, user-friendly alternative to traditional methods. We release the data and code at https://github.com/szu-tera/LLMs4NER.

Verified Lifting of Deep learning Operators

Dec 30, 2024

Abstract:Deep learning operators are fundamental components of modern deep learning frameworks. With the growing demand for customized operators, it has become increasingly common for developers to create their own. However, designing and implementing operators is complex and error-prone, due to hardware-specific optimizations and the need for numerical stability. There is a pressing need for tools that can summarize the functionality of both existing and user-defined operators. To address this gap, this work introduces a novel framework for the verified lifting of deep learning operators, which synthesizes high-level mathematical formulas from low-level implementations. Our approach combines symbolic execution, syntax-guided synthesis, and SMT-based verification to produce readable and formally verified mathematical formulas. In synthesis, we employ a combination of top-down and bottom-up strategies to explore the vast search space efficiently; In verification, we design invariant synthesis patterns and leverage SMT solvers to validate the correctness of the derived summaries; In simplification, we use egraph-based techniques with custom rules to restore complex formulas to their natural, intuitive forms. Evaluated on a dataset of deep learning operators implemented in Triton from the real world, our method demonstrates the effectiveness of synthesis and verification compared to existing techniques. This framework bridges the gap between low-level implementations and high-level abstractions, improving understanding and reliability in deep learning operator development.

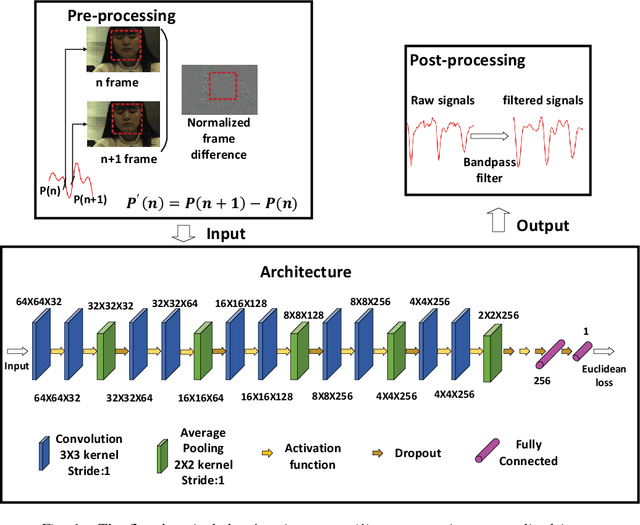

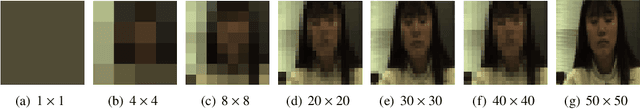

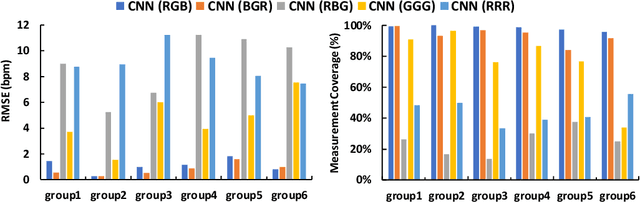

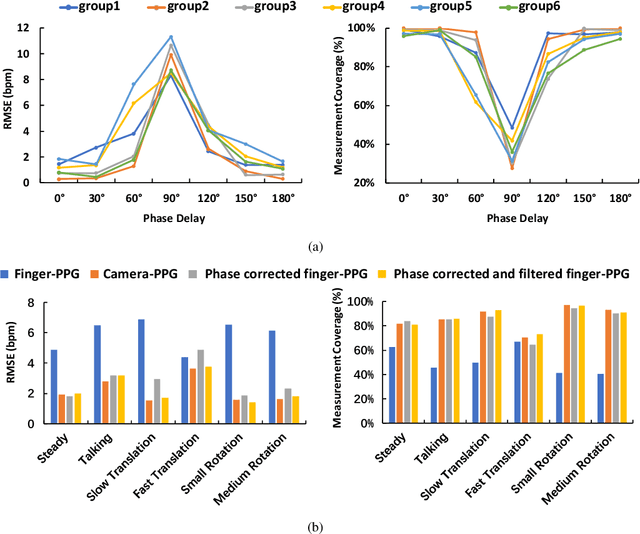

Analysis of CNN-based remote-PPG to understand limitations and sensitivities

Nov 07, 2019

Abstract:Deep learning based on convolutional neural network (CNN) has shown promising results in various vision-based applications, recently also in camera-based vital signs monitoring. The CNN-based Photoplethysmography (PPG) extraction has, so far, been focused on performance rather than understanding. In this paper, we try to answer 4 questions with experiments aiming at improving our understanding of this methodology as it gains popularity. We conclude that the network exploits the blood absorption color variance to extract the physiological signals, and that the choice and parameters (phase, spectral content, etc.) of the reference-signal may be more critical than anticipated. Furthermore, we conclude that the availability of multiple convolutional kernels in the skin-region is necessary for the method to arrive at a flexible channel combination through the spatial operation, but does not provide the same advantages as a multi-site measurement with a knowledge based PPG extraction method. Finally, we show that a hybrid of knowledge based color-channel combination (pre-processing) and CNN is possible and enables an improved motion robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge