Purba Mukherjee

Geometric Determinations Of Characteristic Redshifts From DESI-DR2 BAO and DES-SN5YR Observations: Hints For New Expansion Rate Anomalies

May 25, 2025Abstract:In this work, we perform a model-agnostic reconstruction of the cosmic expansion history by combining DESI-DR2 BAO and DES-SN5YR data, with a focus on geometric determination of characteristic redshifts where notable tensions in the expansion rate are found to emerge. Employing Gaussian process regression alongside knot-based spline techniques, we reconstruct cosmic distances and their derivatives to pinpoint these characteristic redshifts and infer $E(z)$. Our analysis reveals significant deviations of approximately 4 to 5$\sigma$ from the Planck 2018 $\Lambda$CDM predictions, particularly pronounced in the redshift range $z \sim 0.35-0.55$. These anomalies are consistently observed across both reconstruction methods and combined datasets, indicating robust late-time departures that could signal new physics beyond the standard cosmological framework. The joint use of BAO and SN probes enhances the precision of our constraints, allowing us to isolate these deviations without reliance on specific cosmological assumptions. Our findings underscore the role of characteristic redshifts as sensitive indicators of expansion rate anomalies and motivate further scrutiny with forthcoming datasets from DESI-5YR BAO, Euclid, and LSST. These future surveys will tighten constraints and help distinguish whether these late-time anomalies arise from new fundamental physics or unresolved systematics in the data.

A New $\sim 5σ$ Tension at Characteristic Redshift from DESI DR1 and DES-SN5YR observations

Mar 04, 2025Abstract:We perform a model-independent reconstruction of the angular diameter distance ($D_{A}$) using the Multi-Task Gaussian Process (MTGP) framework with DESI-DR1 BAO and DES-SN5YR datasets. We calibrate the comoving sound horizon at the baryon drag epoch $r_d$ to the Planck best-fit value, ensuring consistency with early-universe physics. With the reconstructed $D_A$ at two key redshifts, $z\sim 1.63$ (where $D_{A}^{\prime} =0$) and at $z\sim 0.512$ (where $D_{A}^{\prime} = D_{A}$), we derive the expansion rate of the Universe $H(z)$ at these redshifts. Our findings reveal that at $z\sim 1.63$, the $H(z)$ is fully consistent with the Planck-2018 $\Lambda$CDM prediction, confirming no new physics at that redshift. However, at $z \sim 0.512$, the derived $H(z)$ shows a more than $5\sigma$ discrepancy with the Planck-2018 $\Lambda$CDM prediction, suggesting a possible breakdown of the $\Lambda$CDM model as constrained by Planck-2018 at this lower redshift. This emerging $\sim 5\sigma$ tension at $z\sim 0.512$, distinct from the existing ``Hubble Tension'', may signal the first strong evidence for new physics at low redshifts.

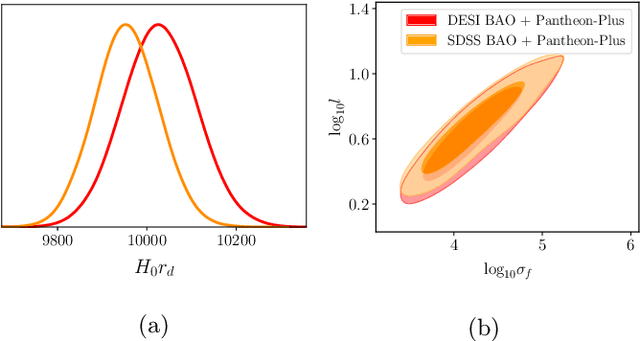

Deep Learning Based Recalibration of SDSS and DESI BAO Alleviates Hubble and Clustering Tensions

Dec 19, 2024Abstract:Conventional calibration of Baryon Acoustic Oscillations (BAO) data relies on estimation of the sound horizon at drag epoch $r_d$ from early universe observations by assuming a cosmological model. We present a recalibration of two independent BAO datasets, SDSS and DESI, by employing deep learning techniques for model-independent estimation of $r_d$, and explore the impacts on $\Lambda$CDM cosmological parameters. Significant reductions in both Hubble ($H_0$) and clustering ($S_8$) tensions are observed for both the recalibrated datasets. Moderate shifts in some other parameters hint towards further exploration of such data-driven approaches.

Model-Agnostic Cosmological Inference with SDSS-IV eBOSS: Simultaneous Probing for Background and Perturbed Universe

Dec 18, 2024Abstract:Here we explore certain subtle features imprinted in data from the completed Sloan Digital Sky Survey IV (SDSS-IV) extended Baryon Oscillation Spectroscopic Survey (eBOSS) as a combined probe for the background and perturbed Universe. We reconstruct the baryon Acoustic Oscillation (BAO) and Redshift Space Distortion (RSD) observables as functions of redshift, using measurements from SDSS alone. We apply the Multi-Task Gaussian Process (MTGP) framework to model the interdependencies of cosmological observables $D_M(z)/r_d$, $D_H(z)/r_d$, and $f\sigma_8(z)$, and track their evolution across different redshifts. Subsequently, we obtain constrained three-dimensional phase space containing $D_M(z)/r_d$, $D_H(z)/r_d$, and $f\sigma_8(z)$ at different redshifts probed by the SDSS-IV eBOSS survey. Furthermore, assuming the $\Lambda$CDM model, we obtain constraints on model parameters $\Omega_{m}$, $H_{0}r_{d}$, $\sigma_{8}$ and $S_{8}$ at each redshift probed by SDSS-IV eBOSS. This indicates redshift-dependent trends in $H_0$, $\Omega_m$, $\sigma_8$ and $S_8$ in the $\Lambda$CDM model, suggesting a possible inconsistency in the $\Lambda$CDM model. Ours is a template for model-independent extraction of information for both background and perturbed Universe using a single galaxy survey taking into account all the existing correlations between background and perturbed observables and this can be easily extended to future DESI-3YR as well as Euclid results.

What can we learn about Reionization astrophysical parameters using Gaussian Process Regression?

Jul 28, 2024Abstract:Reionization is one of the least understood processes in the evolution history of the Universe, mostly because of the numerous astrophysical processes occurring simultaneously about which we do not have a very clear idea so far. In this article, we use the Gaussian Process Regression (GPR) method to learn the reionization history and infer the astrophysical parameters. We reconstruct the UV luminosity density function using the HFF and early JWST data. From the reconstructed history of reionization, the global differential brightness temperature fluctuation during this epoch has been computed. We perform MCMC analysis of the global 21-cm signal using the instrumental specifications of SARAS, in combination with Lyman-$\alpha$ ionization fraction data, Planck optical depth measurements and UV luminosity data. Our analysis reveals that GPR can help infer the astrophysical parameters in a model-agnostic way than conventional methods. Additionally, we analyze the 21-cm power spectrum using the reconstructed history of reionization and demonstrate how the future 21-cm mission SKA, in combination with Planck and Lyman-$\alpha$ forest data, improves the bounds on the reionization astrophysical parameters by doing a joint MCMC analysis for the astrophysical parameters plus 6 cosmological parameters for $\Lambda$CDM model. The results make the GPR-based reconstruction technique a robust learning process and the inferences on the astrophysical parameters obtained therefrom are quite reliable that can be used for future analysis.

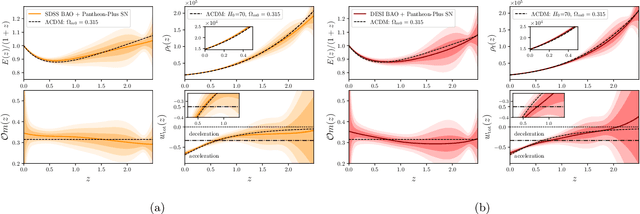

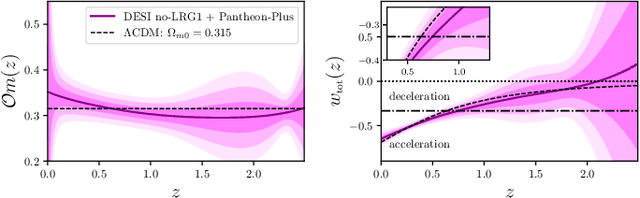

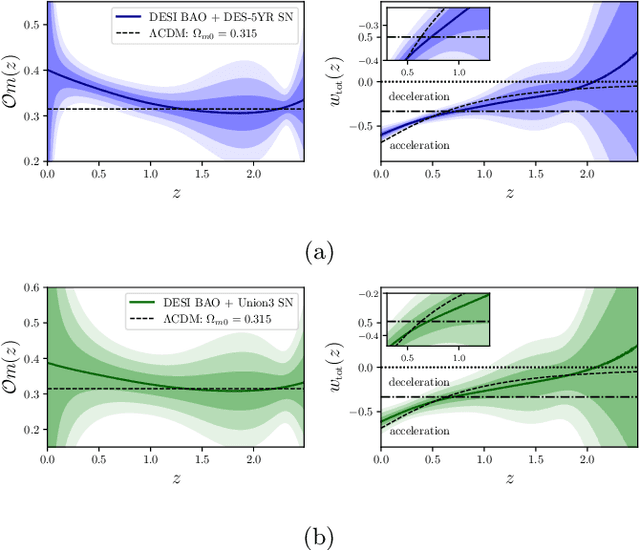

Model-independent cosmological inference post DESI DR1 BAO measurements

May 29, 2024

Abstract:In this work, we implement Gaussian process regression to reconstruct the expansion history of the universe in a model-agnostic manner, using the Pantheon-Plus SN-Ia compilation in combination with two different BAO measurements (SDSS-IV and DESI DR1). In both the reconstructions, the $\Lambda$CDM model is always included in the 95\% confidence intervals. We find evidence that the DESI LRG data at $z_{\text{eff}} = 0.51$ is not an outlier within our model-independent framework. We study the $\mathcal{O}m$-diagnostics and the evolution of the total equation of state (EoS) of our universe, which hint towards the possibility of a quintessence-like dark energy scenario with a very slowly varying EoS, and a phantom-crossing in higher $z$. The entire exercise is later complemented by considering two more SN-Ia compilations - DES-5YR and Union3 - in combination with DESI BAO. Reconstruction with the DESI BAO + DES-5YR SN data sets predicts that the $\Lambda$CDM model lies outside the 3$\sigma$ confidence levels, whereas with DESI BAO + Union3 data, the $\Lambda$CDM model is always included within 1$\sigma$. We also report constraints on $H_0 r_d$ from our model-agnostic analysis, independent of the pre-recombination physics. Our results point towards an $\approx$ 2$\sigma$ discrepancy between the DESI + Pantheon-Plus and DESI + DES-5YR data sets, which calls for further investigation.

Late-time transition of $M_B$ inferred via neural networks

Feb 16, 2024Abstract:The strengthening of tensions in the cosmological parameters has led to a reconsideration of fundamental aspects of standard cosmology. The tension in the Hubble constant can also be viewed as a tension between local and early Universe constraints on the absolute magnitude $M_B$ of Type Ia supernova. In this work, we reconsider the possibility of a variation of this parameter in a model-independent way. We employ neural networks to agnostically constrain the value of the absolute magnitude as well as assess the impact and statistical significance of a variation in $M_B$ with redshift from the Pantheon+ compilation, together with a thorough analysis of the neural network architecture. We find an indication for a transition redshift at the $z\approx 1$ region.

LADDER: Revisiting the Cosmic Distance Ladder with Deep Learning Approaches and Exploring its Applications

Jan 30, 2024Abstract:We investigate the prospect of reconstructing the ``cosmic distance ladder'' of the Universe using a novel deep learning framework called LADDER - Learning Algorithm for Deep Distance Estimation and Reconstruction. LADDER is trained on the apparent magnitude data from the Pantheon Type Ia supernovae compilation, incorporating the full covariance information among data points, to produce predictions along with corresponding errors. After employing several validation tests with a number of deep learning models, we pick LADDER as the best performing one. We then demonstrate applications of our method in the cosmological context, that include serving as a model-independent tool for consistency checks for other datasets like baryon acoustic oscillations, calibration of high-redshift datasets such as gamma ray bursts, use as a model-independent mock catalog generator for future probes, etc. Our analysis advocates for interesting yet cautious consideration of machine learning applications in these contexts.

Reconstructing the Hubble parameter with future Gravitational Wave missions using Machine Learning

Mar 09, 2023Abstract:We study the prospects of Machine Learning algorithms like Gaussian processes (GP) as a tool to reconstruct the Hubble parameter $H(z)$ with two upcoming gravitational wave missions, namely the evolved Laser Interferometer Space Antenna (eLISA) and the Einstein Telescope (ET). We perform non-parametric reconstructions of $H(z)$ with GP using realistically generated catalogues, assuming various background cosmological models, for each mission. We also take into account the effect of early-time and late-time priors separately on the reconstruction, and hence on the Hubble constant ($H_0$). Our analysis reveals that GPs are quite robust in reconstructing the expansion history of the Universe within the observational window of the specific mission under study. We further confirm that both eLISA and ET would be able to constrain $H(z)$ and $H_0$ to a much higher precision than possible today, and also find out their possible role in addressing the Hubble tension for each model, on a case-by-case basis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge