Pulkit Singh

End-to-end Deep Prototype and Exemplar Models for Predicting Human Behavior

Jul 17, 2020

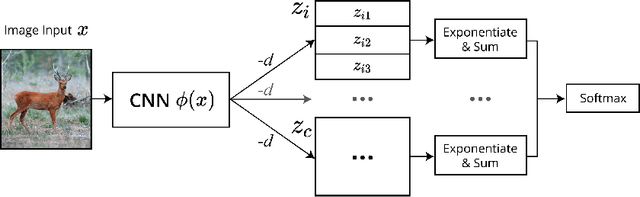

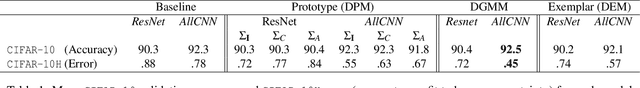

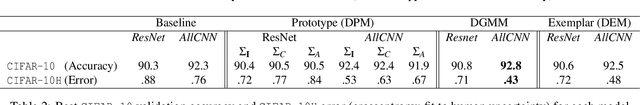

Abstract:Traditional models of category learning in psychology focus on representation at the category level as opposed to the stimulus level, even though the two are likely to interact. The stimulus representations employed in such models are either hand-designed by the experimenter, inferred circuitously from human judgments, or borrowed from pretrained deep neural networks that are themselves competing models of category learning. In this work, we extend classic prototype and exemplar models to learn both stimulus and category representations jointly from raw input. This new class of models can be parameterized by deep neural networks (DNN) and trained end-to-end. Following their namesakes, we refer to them as Deep Prototype Models, Deep Exemplar Models, and Deep Gaussian Mixture Models. Compared to typical DNNs, we find that their cognitively inspired counterparts both provide better intrinsic fit to human behavior and improve ground-truth classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge