Pranav Vaidik Dhulipala

Visual Servoing of Unmanned Surface Vehicle from Small Tethered Unmanned Aerial Vehicle

Oct 09, 2017

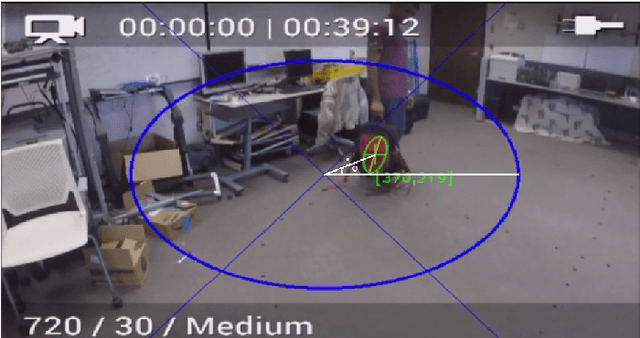

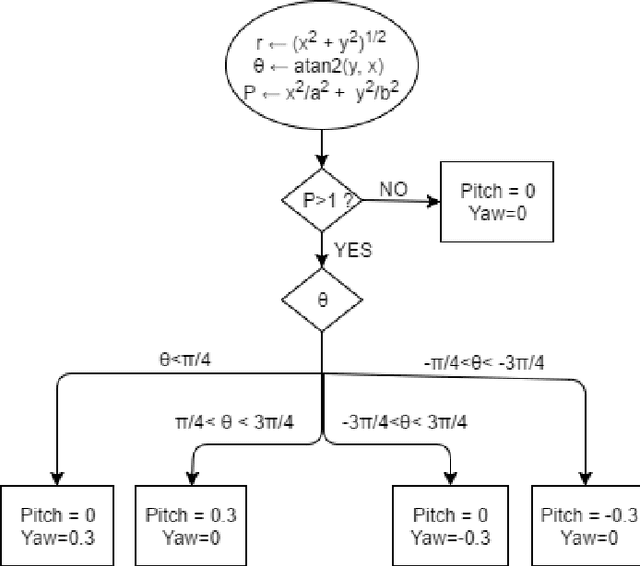

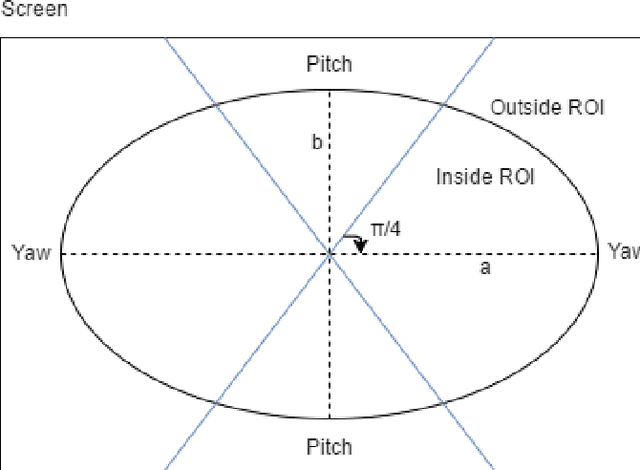

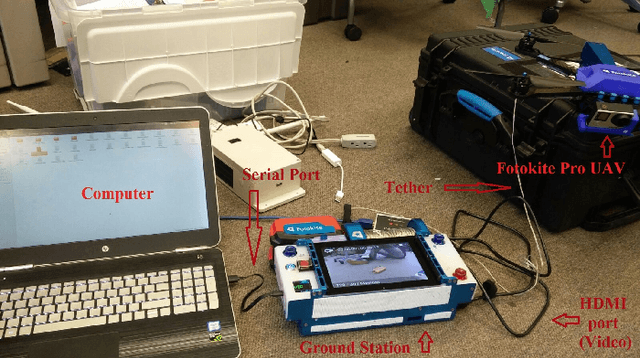

Abstract:This paper presents an algorithm and the implementation of a motor schema to aid the visual localization subsystem of the ongoing EMILY project at Texas A and M University. The EMILY project aims to team an Unmanned Surface Vehicle (USV) with an Unmanned Aerial Vehicle (UAV) to augment the search and rescue of marine casualties during an emergency response phase. The USV is designed to serve as a flotation device once it reaches the victims. A live video feed from the UAV is provided to the casuality responders giving them a visual estimate of the USVs orientation and position to help with its navigation. One of the challenges involved with casualty response using a USV UAV team is to simultaneously control the USV and track it. In this paper, we present an implemented solution to automate the UAV camera movements to keep the USV in view at all times. The motor schema proposed, uses the USVs coordinates from the visual localization subsystem to control the UAVs camera movements and track the USV with minimal camera movements such that the USV is always in the cameras field of view.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge