Piyush Makhija

hinglishNorm -- A Corpus of Hindi-English Code Mixed Sentences for Text Normalization

Oct 18, 2020

Abstract:We present hinglishNorm -- a human annotated corpus of Hindi-English code-mixed sentences for text normalization task. Each sentence in the corpus is aligned to its corresponding human annotated normalized form. To the best of our knowledge, there is no corpus of Hindi-English code-mixed sentences for text normalization task that is publicly available. Our work is the first attempt in this direction. The corpus contains 13494 parallel segments. Further, we present baseline normalization results on this corpus. We obtain a Word Error Rate (WER) of 15.55, BiLingual Evaluation Understudy (BLEU) score of 71.2, and Metric for Evaluation of Translation with Explicit ORdering (METEOR) score of 0.50.

User Generated Data: Achilles' Heel of BERT

Apr 21, 2020

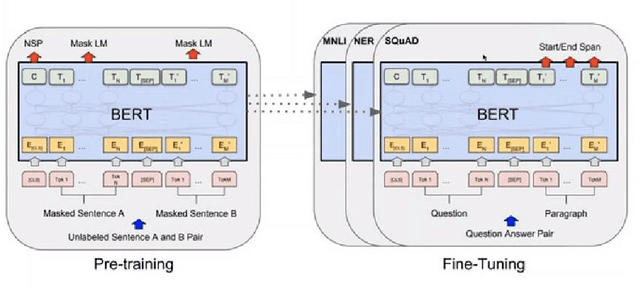

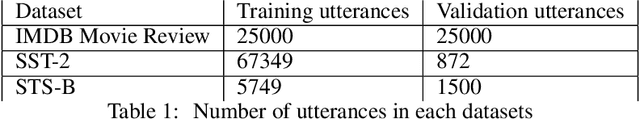

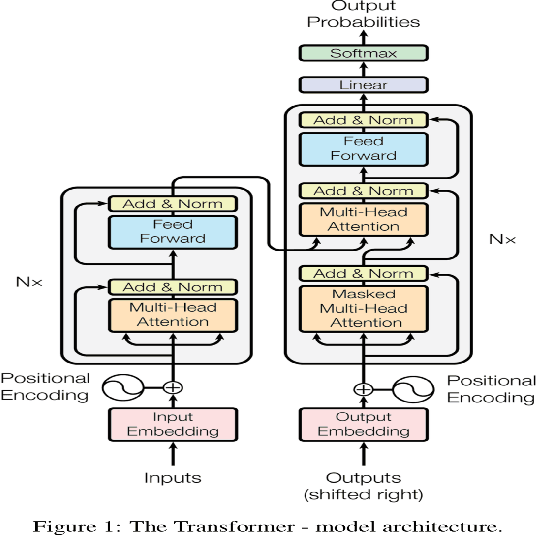

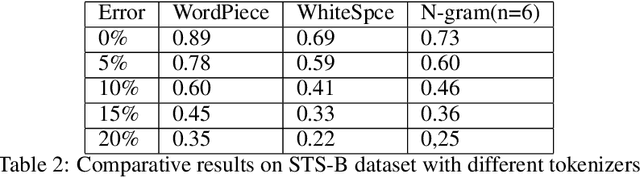

Abstract:Owing to BERT's phenomenal success on various NLP tasks and benchmark datasets, industry practitioners have started to experiment with incorporating BERT to build applications to solve industry use cases. Industrial NLP applications are known to deal with much more noisy data as compared to benchmark datasets. In this work we systematically show that when the text data is noisy, there is a significant degradation in the performance of BERT. While this work is motivated from our business use case of building NLP applications for user generated text data which is known to be very noisy, our findings are applicable across various use cases in the industry. Specifically, we show that BERT's performance on fundamental tasks like sentiment analysis and textual similarity drops significantly as we introduce noise in data in the form of spelling mistakes and typos. For our experiments we use three well known datasets - IMDB movie reviews, SST-2 and STS-B to measure the performance. Further, we identify the shortcomings in the BERT pipeline that are responsible for this drop in performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge