Pierre-Alain Duc

Multi-scale gridded Gabor attention for cirrus segmentation

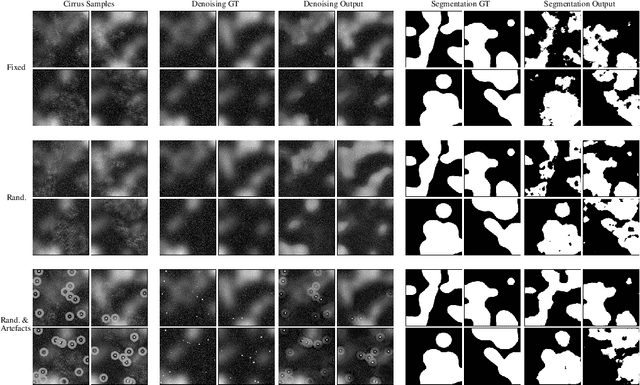

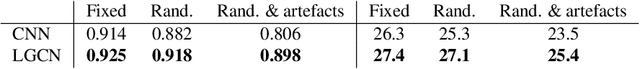

Jul 11, 2024Abstract:In this paper, we address the challenge of segmenting global contaminants in large images. The precise delineation of such structures requires ample global context alongside understanding of textural patterns. CNNs specialise in the latter, though their ability to generate global features is limited. Attention measures long range dependencies in images, capturing global context, though at a large computational cost. We propose a gridded attention mechanism to address this limitation, greatly increasing efficiency by processing multi-scale features into smaller tiles. We also enhance the attention mechanism for increased sensitivity to texture orientation, by measuring correlations across features dependent on different orientations, in addition to channel and positional attention. We present results on a new dataset of astronomical images, where the task is segmenting large contaminating dust clouds.

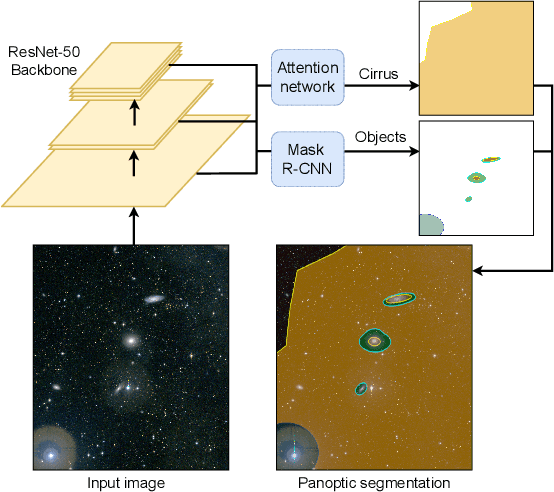

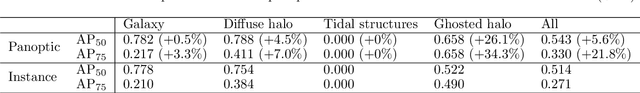

Panoptic Segmentation of Galactic Structures in LSB Images

Jul 10, 2024

Abstract:We explore the use of deep learning to localise galactic structures in low surface brightness (LSB) images. LSB imaging reveals many interesting structures, though these are frequently confused with galactic dust contamination, due to a strong local visual similarity. We propose a novel unified approach to multi-class segmentation of galactic structures and of extended amorphous image contaminants. Our panoptic segmentation model combines Mask R-CNN with a contaminant specialised network and utilises an adaptive preprocessing layer to better capture the subtle features of LSB images. Further, a human-in-the-loop training scheme is employed to augment ground truth labels. These different approaches are evaluated in turn, and together greatly improve the detection of both galactic structures and contaminants in LSB images.

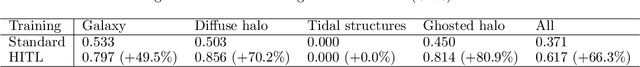

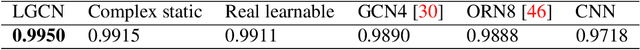

Learnable Gabor modulated complex-valued networks for orientation robustness

Nov 23, 2020

Abstract:Robustness to transformation is desirable in many computer vision tasks, given that input data often exhibits pose variance within classes. While translation invariance and equivariance is a documented phenomenon of CNNs, sensitivity to other transformations is typically encouraged through data augmentation. We investigate the modulation of complex valued convolutional weights with learned Gabor filters to enable orientation robustness. With Gabor modulation, the designed network is able to generate orientation dependent features free of interpolation with a single set of rotation-governing parameters. Moreover, by learning rotation parameters alongside traditional convolutional weights, the representation space is not constrained and may adapt to the exact input transformation. We present Learnable Convolutional Gabor Networks (LCGNs), that are parameter-efficient and offer increased model complexity while keeping backpropagation simple. We demonstrate that learned Gabor modulation utilising an end-to-end complex architecture enables rotation invariance and equivariance on MNIST and a new dataset of simulated images of galactic cirri.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge