Learnable Gabor modulated complex-valued networks for orientation robustness

Paper and Code

Nov 23, 2020

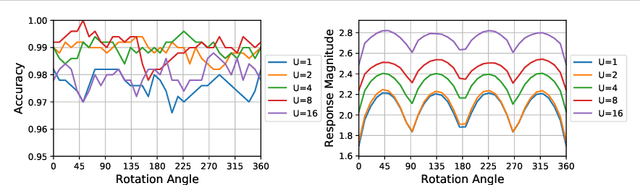

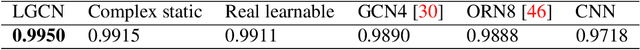

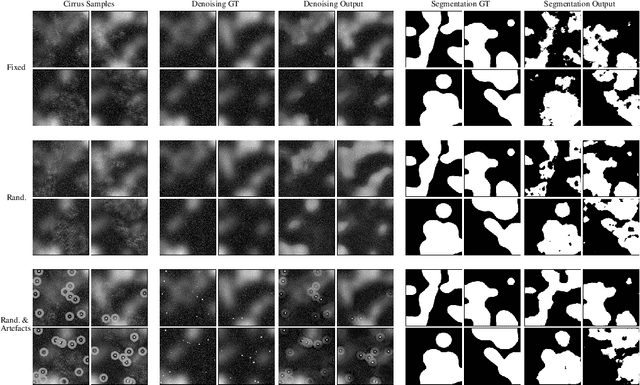

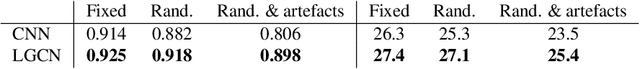

Robustness to transformation is desirable in many computer vision tasks, given that input data often exhibits pose variance within classes. While translation invariance and equivariance is a documented phenomenon of CNNs, sensitivity to other transformations is typically encouraged through data augmentation. We investigate the modulation of complex valued convolutional weights with learned Gabor filters to enable orientation robustness. With Gabor modulation, the designed network is able to generate orientation dependent features free of interpolation with a single set of rotation-governing parameters. Moreover, by learning rotation parameters alongside traditional convolutional weights, the representation space is not constrained and may adapt to the exact input transformation. We present Learnable Convolutional Gabor Networks (LCGNs), that are parameter-efficient and offer increased model complexity while keeping backpropagation simple. We demonstrate that learned Gabor modulation utilising an end-to-end complex architecture enables rotation invariance and equivariance on MNIST and a new dataset of simulated images of galactic cirri.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge