Pierre Ambrosini

Automated Detection of Cribriform Growth Patterns in Prostate Histology Images

Mar 23, 2020

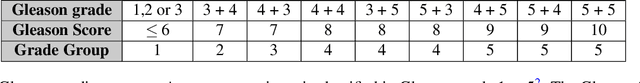

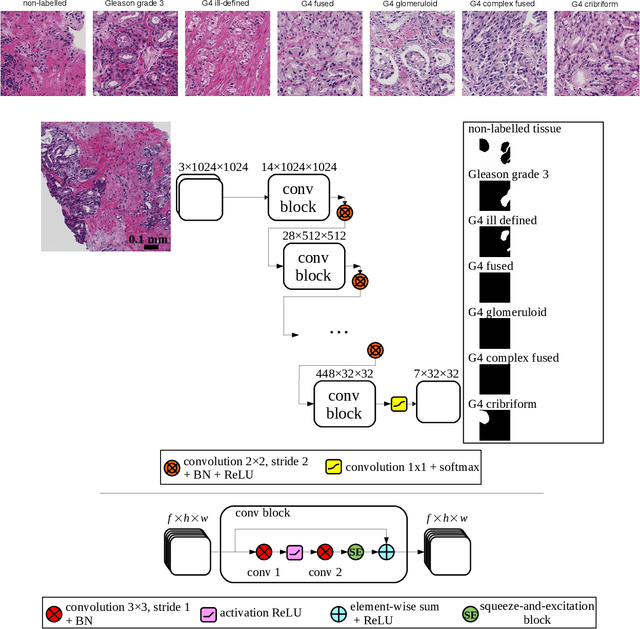

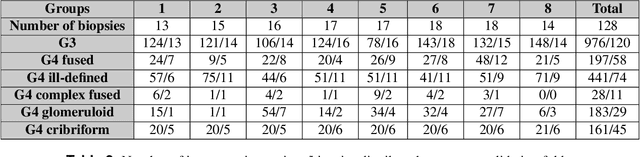

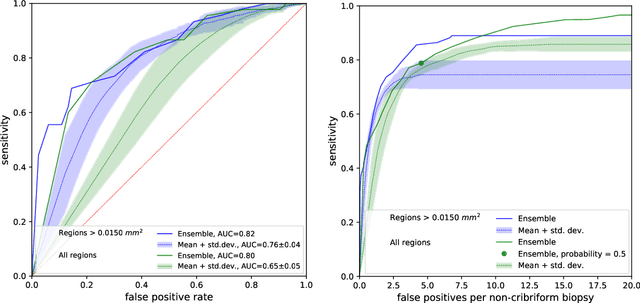

Abstract:Cribriform growth patterns in prostate carcinoma are associated with poor prognosis. We aimed to introduce a deep learning method to detect such patterns automatically. To do so, convolutional neural network was trained to detect cribriform growth patterns on 128 prostate needle biopsies. Ensemble learning taking into account other tumor growth patterns during training was used to cope with heterogeneous and limited tumor tissue occurrences. ROC and FROC analyses were applied to assess network performance regarding detection of biopsies harboring cribriform growth pattern. The ROC analysis yielded an area under the curve up to 0.82. FROC analysis demonstrated a sensitivity of 0.9 for regions larger than 0.0150 mm2 with on average 6.8 false positives. To benchmark method performance for intra-observer annotation variability, false positive and negative detections were re-evaluated by the pathologists. Pathologists considered 9% of the false positive regions as cribriform, and 11% as possibly cribriform; 44% of the false negative regions were not annotated as cribriform. As a final experiment, the network was also applied on a dataset of 60 biopsy regions annotated by 23 pathologists. With the cut-off reaching highest sensitivity, all images annotated as cribriform by at least 7/23 of the pathologists, were all detected as cribriform by the network. In conclusion, the proposed deep learning method has high sensitivity for detecting cribriform growth patterns at the expense of a limited number of false positives. It can detect cribriform regions that are labelled as such by at least a minority of pathologists. Therefore, it could assist clinical decision making by suggesting suspicious regions.

Fully Automatic and Real-Time Catheter Segmentation in X-Ray Fluoroscopy

Jul 17, 2017

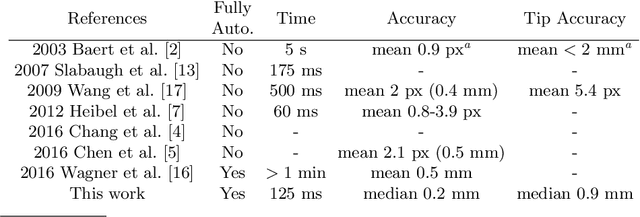

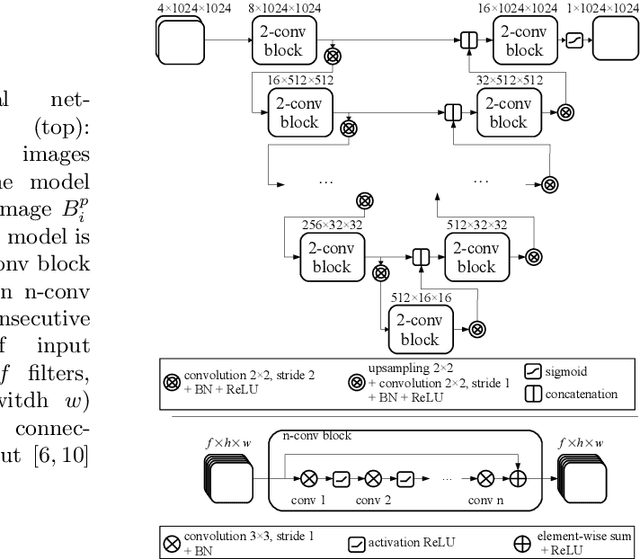

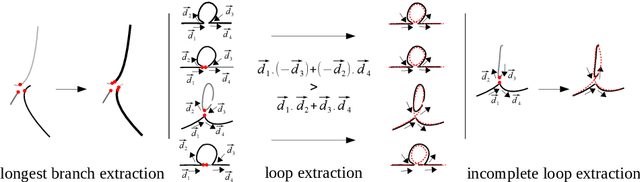

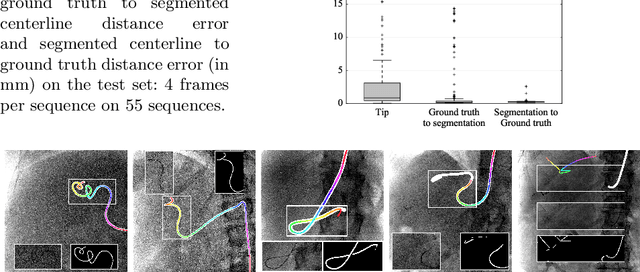

Abstract:Augmenting X-ray imaging with 3D roadmap to improve guidance is a common strategy. Such approaches benefit from automated analysis of the X-ray images, such as the automatic detection and tracking of instruments. In this paper, we propose a real-time method to segment the catheter and guidewire in 2D X-ray fluoroscopic sequences. The method is based on deep convolutional neural networks. The network takes as input the current image and the three previous ones, and segments the catheter and guidewire in the current image. Subsequently, a centerline model of the catheter is constructed from the segmented image. A small set of annotated data combined with data augmentation is used to train the network. We trained the method on images from 182 X-ray sequences from 23 different interventions. On a testing set with images of 55 X-ray sequences from 5 other interventions, a median centerline distance error of 0.2 mm and a median tip distance error of 0.9 mm was obtained. The segmentation of the instruments in 2D X-ray sequences is performed in a real-time fully-automatic manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge