Phillip Stanley-Marbell

Distributional Computational Graphs: Error Bounds

Jan 22, 2026Abstract:We study a general framework of distributional computational graphs: computational graphs whose inputs are probability distributions rather than point values. We analyze the discretization error that arises when these graphs are evaluated using finite approximations of continuous probability distributions. Such an approximation might be the result of representing a continuous real-valued distribution using a discrete representation or from constructing an empirical distribution from samples (or might be the output of another distributional computational graph). We establish non-asymptotic error bounds in terms of the Wasserstein-1 distance, without imposing structural assumptions on the computational graph.

Machine Learning for Sensor Transducer Conversion Routines

Aug 23, 2021

Abstract:Sensors with digital outputs require software conversion routines to transform the unitless ADC samples to physical quantities with the correct units. These conversion routines are computationally complex given the limited computational resources of low-power embedded systems. This article presents a set of machine learning methods to learn new, less-complex conversion routines that do not sacrifice accuracy for the BME680 environmental sensor. We present a Pareto analysis of the tradeoff between accuracy and computational overhead for the models and present models that reduce the computational overhead of the existing industry-standard conversion routines for temperature, pressure, and humidity by 62 %, 71 %, and 18 % respectively. The corresponding RMS errors for these methods are 0.0114 $^\circ$C, 0.0280 KPa, and 0.0337 %. These results show that machine learning methods for learning conversion routines can produce conversion routines with reduced computational overhead while maintaining good accuracy.

On-Sensor Inference for Uncertainty Reduction

Dec 27, 2020

Abstract:This article presents an algorithm for reducing measurement uncertainty of one quantity when given measurements of two quantities with correlated noise. The algorithm assumes that the measurements of both physical quantities follow a Gaussian distribution and provides concrete justification that these assumptions are valid. When applied to humidity sensors, it provides reduced uncertainty in humidity estimates from correlated temperature and humidity measurements. In an experimental evaluation, the algorithm achieves uncertainty reduction of 4.2%. The algorithm incurs an execution time overhead of 1.4% when compared to the minimum algorithm required to measure and calculate the uncertainty. Detailed instruction-level emulation of a C-language implementation compiled to the RISC-V architecture shows that the uncertainty reduction program required 0.05% more instructions per iteration than the minimum operations required to calculate the uncertainty.

Inferring Human Observer Spectral Sensitivities from Video Game Data

Jul 01, 2020

Abstract:With the use of primaries which have increasingly narrow bandwidths in modern displays, observer metameric breakdown is becoming a significant factor. This can lead to discrepancies in the perceived color between different observers. If the spectral sensitivity of a user's eyes could be easily measured, next generation displays would be able to adjust the display content to ensure that the colors are perceived as intended by a given observer. We present a mathematical framework for calculating spectral sensitivities of a given human observer using a color matching experiment that could be done on a mobile phone display. This forgoes the need for expensive in-person experiments and allows system designers to easily calibrate displays to match the user's vision, in-the-wild. We show how to use sRGB pixel values along with a simple display model to calculate plausible color matching functions (CMFs) for the users of a given display device (e.g., a mobile phone). We evaluate the effect of different regularization functions on the shape of the calculated CMFs and the results show that a sum of squares regularizer is able to predict smooth and qualitatively realistic CMFs.

A System for Generating Non-Uniform Random Variates using Graphene Field-Effect Transistors

Apr 28, 2020

Abstract:We introduce a new method for hardware non-uniform random number generation based on the transfer characteristics of graphene field-effect transistors (GFETs) which requires as few as two transistors and a resistor (or transimpedance amplifier). The method could be integrated into a custom computing system to provide samples from arbitrary univariate distributions. We also demonstrate the use of wavelet decomposition of the target distribution to determine GFET bias voltages in a multi-GFET array. We implement the method by fabricating multiple GFETs and experimentally validating that their transfer characteristics exhibit the nonlinearity on which our method depends. We use the characterization data in simulations of a proposed architecture for generating samples from dynamically-selectable non-uniform probability distributions. Using a combination of experimental measurements of GFETs under a range of biasing conditions and simulation of the GFET-based non-uniform random variate generator architecture, we demonstrate a speedup of Monte Carlo integration by a factor of up to 2$\times$. This speedup assumes the analog-to-digital converters reading the outputs from the circuit can produce samples in the same amount of time that it takes to perform memory accesses.

Efficient Programmable Random Variate Generation Accelerator from Sensor Noise

Jan 10, 2020

Abstract:We introduce a method for non-uniform random number generation based on sampling a physical process in a controlled environment. We demonstrate one proof-of-concept implementation of the method that reduces the error of Monte Carlo integration of a univariate Gaussian by 1068 times while doubling the speed of the Monte Carlo simulation. We show that the supply voltage and temperature of the physical process must be controlled to prevent the mean and standard deviation of the random number generator from drifting.

A Hardware Platform for Efficient Multi-Modal Sensing with Adaptive Approximation

Apr 06, 2018

Abstract:We present Warp, a hardware platform to support research in approximate computing, sensor energy optimization, and energy-scavenged systems. Warp incorporates 11 state-of-the-art sensor integrated circuits, computation, and an energy-scavenged power supply, all within a miniature system that is just 3.6 cm x 3.3 cm x 0.5 cm. Warp's sensor integrated circuits together contain a total of 21 sensors with a range of precisions and accuracies for measuring eight sensing modalities of acceleration, angular rate, magnetic flux density (compass heading), humidity, atmospheric pressure (elevation), infrared radiation, ambient temperature, and color. Warp uses a combination of analog circuits and digital control to facilitate further tradeoffs between sensor and communication accuracy, energy efficiency, and performance. This article presents the design of Warp and presents an evaluation of our hardware implementation. The results show how Warp's design enables performance and energy efficiency versus ac- curacy tradeoffs.

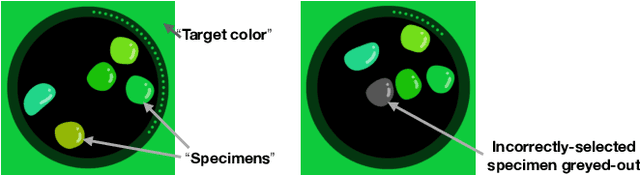

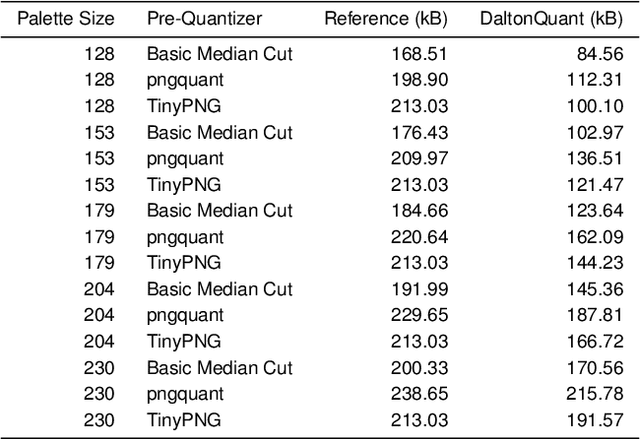

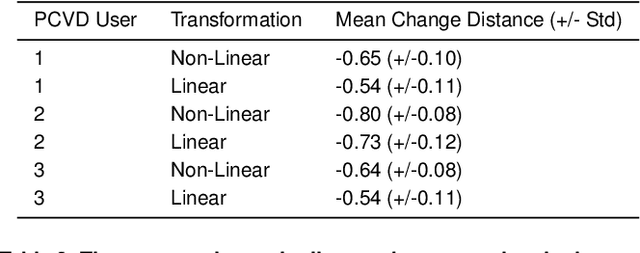

Incremental Color Quantization for Color-Vision-Deficient Observers Using Mobile Gaming Data

Mar 22, 2018

Abstract:The sizes of compressed images depend on their spatial resolution (number of pixels) and on their color resolution (number of color quantization levels). We introduce DaltonQuant, a new color quantization technique for image compression that cloud services can apply to images destined for a specific user with known color vision deficiencies. DaltonQuant improves compression in a user-specific but reversible manner thereby improving a user's network bandwidth and data storage efficiency. DaltonQuant quantizes image data to account for user-specific color perception anomalies, using a new method for incremental color quantization based on a large corpus of color vision acuity data obtained from a popular mobile game. Servers that host images can revert DaltonQuant's image requantization and compression when those images must be transmitted to a different user, making the technique practical to deploy on a large scale. We evaluate DaltonQuant's compression performance on the Kodak PC reference image set and show that it improves compression by an additional 22%-29% over the state-of-the-art compressors TinyPNG and pngquant.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge