Philippe Nadeau

Robustness Assessment of Static Structures for Efficient Object Handling

Nov 14, 2024

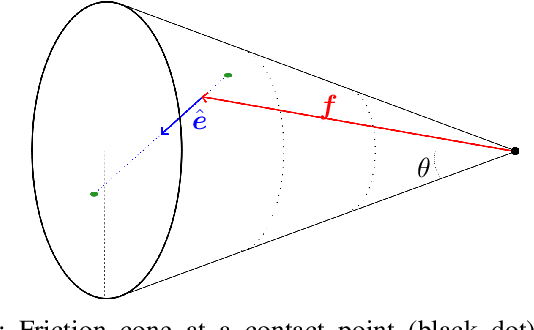

Abstract:This work establishes a solution to the problem of assessing the robustness of multi-object assemblies to external forces. Our physically-grounded approach handles arbitrary static structures made from rigid objects of any shape and mass distribution without relying on heuristics or approximations. The result is a method that provides a foundation for autonomous robot decision-making when interacting with objects in frictional contact. Our strategy decouples slipping from toppling, enabling independent assessments of these two phenomena, with a shared robustness representation being key to combining the results into an accurate robustness assessment. Our algorithms can be used by motion planners to produce efficient assembly transportation plans, and by object placement planners to select poses that improve the strength of an assembly. Compared to prior work, our approach is more generally applicable than commonly used heuristics and more efficient than dynamics simulations.

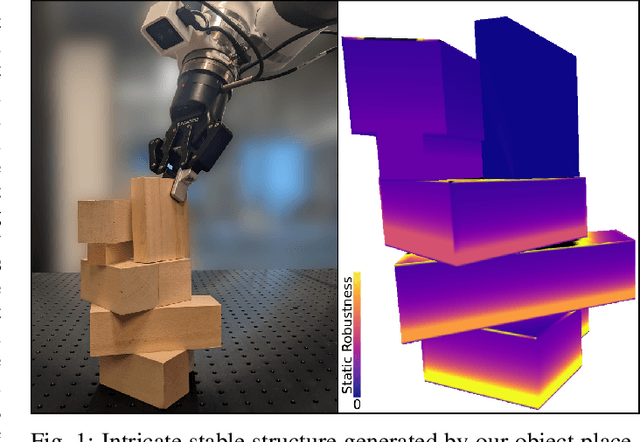

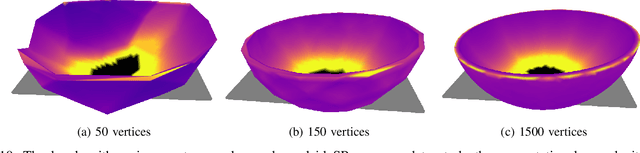

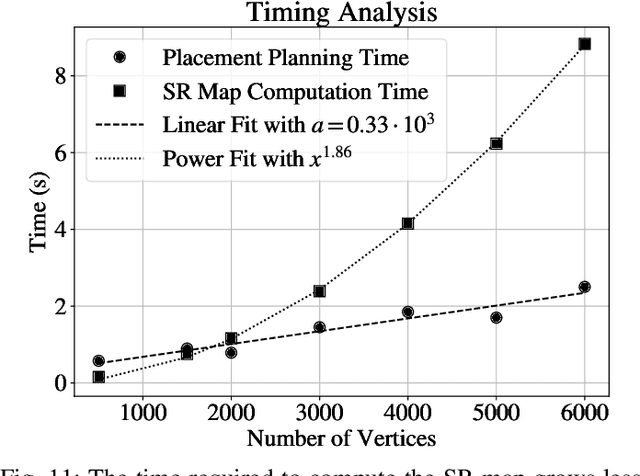

Stable Object Placement Planning From Contact Point Robustness

Oct 16, 2024

Abstract:We introduce a planner designed to guide robot manipulators in stably placing objects within intricate scenes. Our proposed method reverses the traditional approach to object placement: our planner selects contact points first and then determines a placement pose that solicits the selected points. This is instead of sampling poses, identifying contact points, and evaluating pose quality. Our algorithm facilitates stability-aware object placement planning, imposing no restrictions on object shape, convexity, or mass density homogeneity, while avoiding combinatorial computational complexity. Our proposed stability heuristic enables our planner to find a solution about 20 times faster when compared to the same algorithm not making use of the heuristic and eight times faster than a state-of-the-art method that uses the traditional sample-and-evaluate approach. Our proposed planner is also more successful in finding stable placements than the five other benchmarked algorithms. Derived from first principles and validated in ten real robot experiments, our planner offers a general and scalable method to tackle the problem of object placement planning with rigid objects.

A Standard Rigid Transformation Notation Convention for Robotics Research

May 12, 2024Abstract:Notation conventions for rigid transformations are as diverse as they are fundamental to the field of robotics. A well-defined convention that is practical, consistent and unambiguous is essential for the clear communication of ideas and to foster collaboration between researchers. This work presents an analysis of conventions used in state-of-the-art robotics research, defines a new notation convention, and provides software packages to facilitate its use. To shed some light on the current state of notation conventions in robotics research, this work presents an analysis of the ICRA 2023 proceedings, focusing on the notation conventions used for rigid transformations. A total of 1655 papers were inspected to identify the convention used, and key insights about trends and usage preferences are derived. Based on this analysis, a new notation convention called RIGID is defined, which complies with the "ISO 80000 Standard on Quantities and Units". The RIGID convention is designed to be concise yet unambiguous and easy to use. Additionally, this work introduces a LaTeX package that facilitates the use of the RIGID notation in manuscripts preparation through simple customizable commands that can be easily translated into variable names for software development.

Automated Continuous Force-Torque Sensor Bias Estimation

Mar 02, 2024Abstract:Six axis force-torque sensors are commonly attached to the wrist of serial robots to measure the external forces and torques acting on the robot's end-effector. These measurements are used for load identification, contact detection, and human-robot interaction amongst other applications. Typically, the measurements obtained from the force-torque sensor are more accurate than estimates computed from joint torque readings, as the former is independent of the robot's dynamic and kinematic models. However, the force-torque sensor measurements are affected by a bias that drifts over time, caused by the compounding effects of temperature changes, mechanical stresses, and other factors. In this work, we present a pipeline that continuously estimates the bias and the drift of the bias of a force-torque sensor attached to the wrist of a robot. The first component of the pipeline is a Kalman filter that estimates the kinematic state (position, velocity, and acceleration) of the robot's joints. The second component is a kinematic model that maps the joint-space kinematics to the task-space kinematics of the force-torque sensor. Finally, the third component is a Kalman filter that estimates the bias and the drift of the bias of the force-torque sensor assuming that the inertial parameters of the gripper attached to the distal end of the force-torque sensor are known with certainty.

The Sum of Its Parts: Visual Part Segmentation for Inertial Parameter Identification of Manipulated Objects

Feb 13, 2023Abstract:To operate safely and efficiently alongside human workers, collaborative robots (cobots) require the ability to quickly understand the dynamics of manipulated objects. However, traditional methods for estimating the full set of inertial parameters rely on motions that are necessarily fast and unsafe (to achieve a sufficient signal-to-noise ratio). In this work, we take an alternative approach: by combining visual and force-torque measurements, we develop an inertial parameter identification algorithm that requires slow or 'stop-and-go' motions only, and hence is ideally tailored for use around humans. Our technique, called Homogeneous Part Segmentation (HPS), leverages the observation that man-made objects are often composed of distinct, homogeneous parts. We combine a surface-based point clustering method with a volumetric shape segmentation algorithm to quickly produce a part-level segmentation of a manipulated object; the segmented representation is then used by HPS to accurately estimate the object's inertial parameters. To benchmark our algorithm, we create and utilize a novel dataset consisting of realistic meshes, segmented point clouds, and inertial parameters for 20 common workshop tools. Finally, we demonstrate the real-world performance and accuracy of HPS by performing an intricate 'hammer balancing act' autonomously and online with a low-cost collaborative robotic arm. Our code and dataset are open source and freely available.

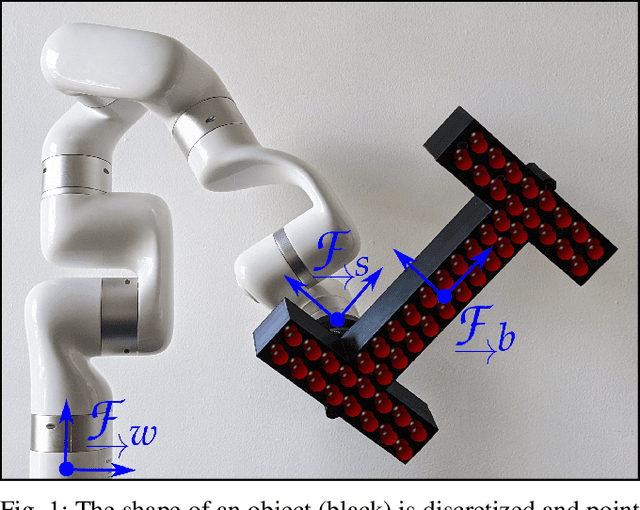

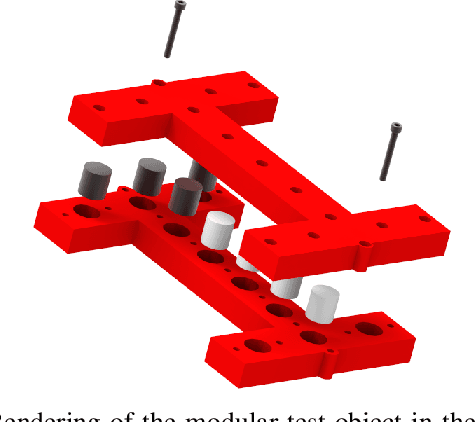

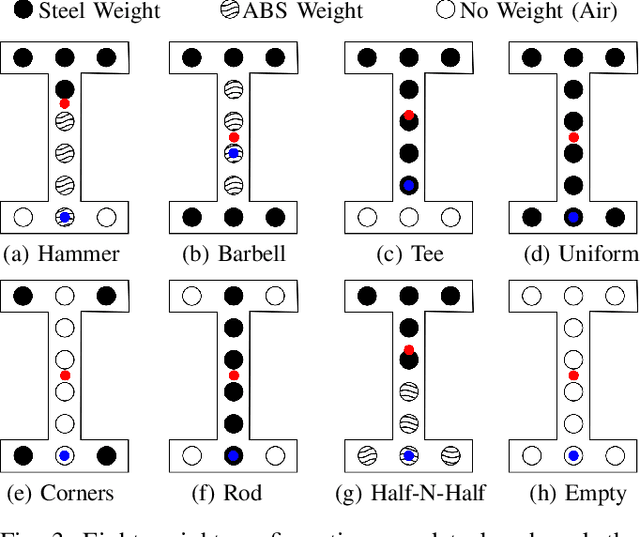

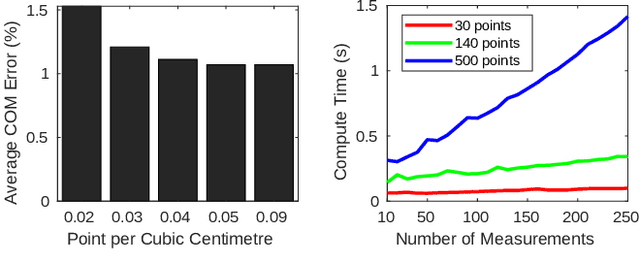

Fast Object Inertial Parameter Identification for Collaborative Robots

Mar 02, 2022

Abstract:Collaborative robots (cobots) are machines designed to work safely alongside people in human-centric environments. Providing cobots with the ability to quickly infer the inertial parameters of manipulated objects will improve their flexibility and enable greater usage in manufacturing and other areas. To ensure safety, cobots are subject to kinematic limits that result in low signal-to-noise ratios (SNR) for velocity, acceleration, and force-torque data. This renders existing inertial parameter identification algorithms prohibitively slow and inaccurate. Motivated by the desire for faster model acquisition, we investigate the use of an approximation of rigid body dynamics to improve the SNR. Additionally, we introduce a mass discretization method that can make use of shape information to quickly identify plausible inertial parameters for a manipulated object. We present extensive simulation studies and real-world experiments demonstrating that our approach complements existing inertial parameter identification methods by specifically targeting the typical cobot operating regime.

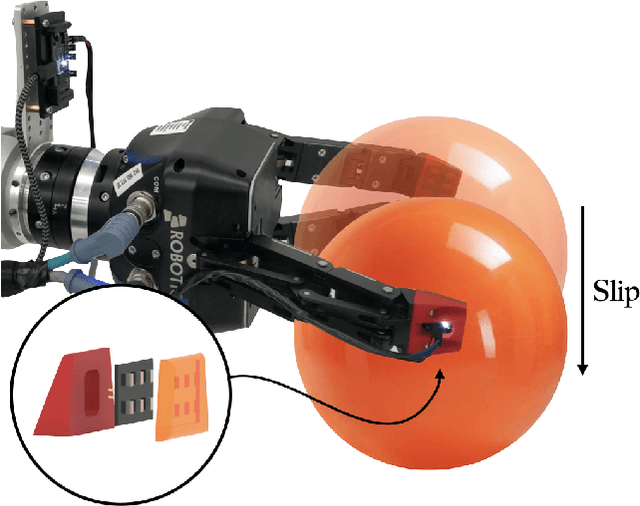

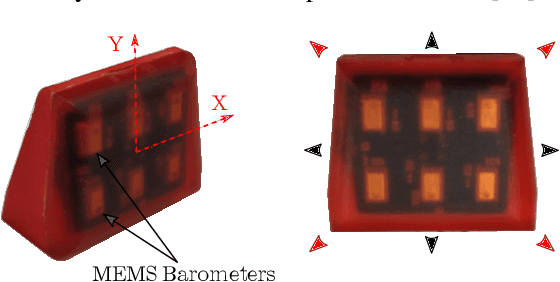

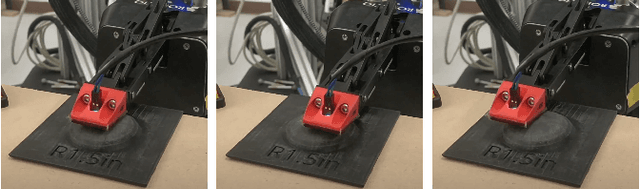

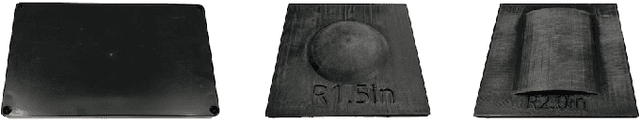

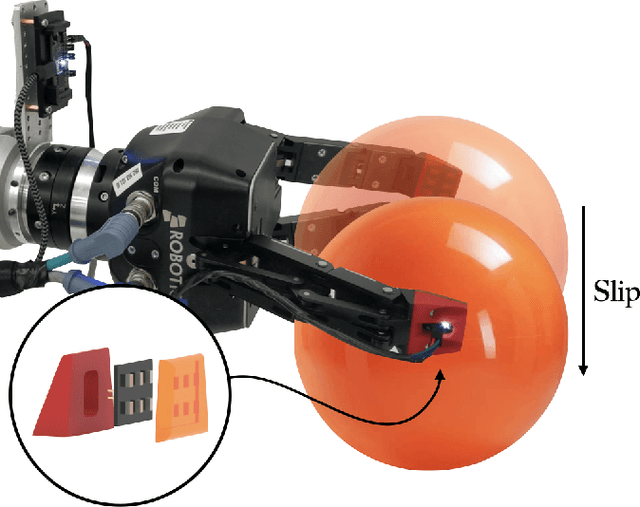

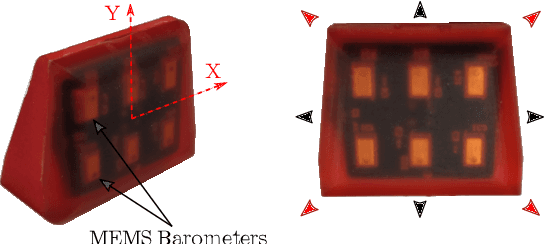

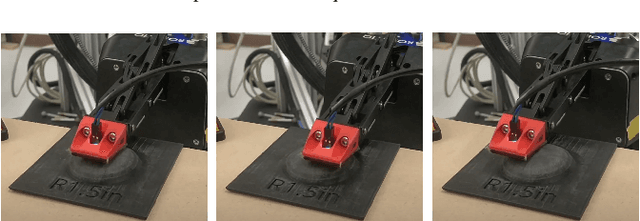

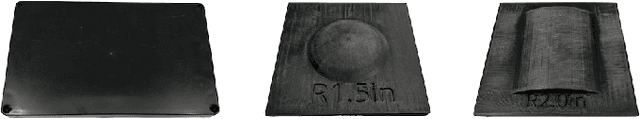

Learning to Detect Slip with Barometric Tactile Sensors and a Temporal Convolutional Neural Network

Feb 19, 2022

Abstract:The ability to perceive object slip via tactile feedback enables humans to accomplish complex manipulation tasks including maintaining a stable grasp. Despite the utility of tactile information for many applications, tactile sensors have yet to be widely deployed in industrial robotics settings; part of the challenge lies in identifying slip and other events from the tactile data stream. In this paper, we present a learning-based method to detect slip using barometric tactile sensors. These sensors have many desirable properties including high durability and reliability, and are built from inexpensive, off-the-shelf components. We train a temporal convolution neural network to detect slip, achieving high detection accuracies while displaying robustness to the speed and direction of the slip motion. Further, we test our detector on two manipulation tasks involving a variety of common objects and demonstrate successful generalization to real-world scenarios not seen during training. We argue that barometric tactile sensing technology, combined with data-driven learning, is suitable for many manipulation tasks such as slip compensation.

Under Pressure: Learning to Detect Slip with Barometric Tactile Sensors

Mar 24, 2021

Abstract:The ability to perceive object slip through tactile feedback allows humans to accomplish complex manipulation tasks including maintaining a stable grasp. Despite the utility of tactile information for many robotics applications, tactile sensors have yet to be widely deployed in industrial settings -- part of the challenge lies in identifying slip and other key events from the tactile data stream. In this paper, we present a learning-based method to detect slip using barometric tactile sensors. These sensors have many desirable properties including high reliability and durability, and are built from very inexpensive components. We are able to achieve slip detection accuracies of greater than 91% while displaying robustness to the speed and direction of the slip motion. Further, we test our detector on two robot manipulation tasks involving a variety of common objects and demonstrate successful generalization to real-world scenarios not seen during training. We show that barometric tactile sensing technology, combined with data-driven learning, is potentially suitable for many complex manipulation tasks such as slip compensation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge