Philip Tully

A Practical Guide for Evaluating LLMs and LLM-Reliant Systems

Jun 16, 2025Abstract:Recent advances in generative AI have led to remarkable interest in using systems that rely on large language models (LLMs) for practical applications. However, meaningful evaluation of these systems in real-world scenarios comes with a distinct set of challenges, which are not well-addressed by synthetic benchmarks and de-facto metrics that are often seen in the literature. We present a practical evaluation framework which outlines how to proactively curate representative datasets, select meaningful evaluation metrics, and employ meaningful evaluation methodologies that integrate well with practical development and deployment of LLM-reliant systems that must adhere to real-world requirements and meet user-facing needs.

Transformers for End-to-End InfoSec Tasks: A Feasibility Study

Dec 05, 2022Abstract:In this paper, we assess the viability of transformer models in end-to-end InfoSec settings, in which no intermediate feature representations or processing steps occur outside the model. We implement transformer models for two distinct InfoSec data formats - specifically URLs and PE files - in a novel end-to-end approach, and explore a variety of architectural designs, training regimes, and experimental settings to determine the ingredients necessary for performant detection models. We show that in contrast to conventional transformers trained on more standard NLP-related tasks, our URL transformer model requires a different training approach to reach high performance levels. Specifically, we show that 1) pre-training on a massive corpus of unlabeled URL data for an auto-regressive task does not readily transfer to binary classification of malicious or benign URLs, but 2) that using an auxiliary auto-regressive loss improves performance when training from scratch. We introduce a method for mixed objective optimization, which dynamically balances contributions from both loss terms so that neither one of them dominates. We show that this method yields quantitative evaluation metrics comparable to that of several top-performing benchmark classifiers. Unlike URLs, binary executables contain longer and more distributed sequences of information-rich bytes. To accommodate such lengthy byte sequences, we introduce additional context length into the transformer by providing its self-attention layers with an adaptive span similar to Sukhbaatar et al. We demonstrate that this approach performs comparably to well-established malware detection models on benchmark PE file datasets, but also point out the need for further exploration into model improvements in scalability and compute efficiency.

* Post-print of a manuscript accepted to ACM Asia-CCS Workshop on Robust Malware Analysis (WoRMA) 2022. 11 Pages total. arXiv admin note: substantial text overlap with arXiv:2011.03040

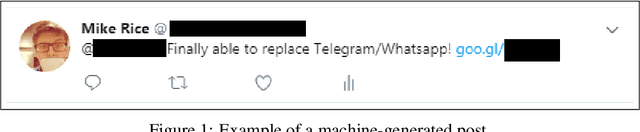

Generative Models for Spear Phishing Posts on Social Media

Feb 14, 2018

Abstract:Historically, machine learning in computer security has prioritized defense: think intrusion detection systems, malware classification, and botnet traffic identification. Offense can benefit from data just as well. Social networks, with their access to extensive personal data, bot-friendly APIs, colloquial syntax, and prevalence of shortened links, are the perfect venues for spreading machine-generated malicious content. We aim to discover what capabilities an adversary might utilize in such a domain. We present a long short-term memory (LSTM) neural network that learns to socially engineer specific users into clicking on deceptive URLs. The model is trained with word vector representations of social media posts, and in order to make a click-through more likely, it is dynamically seeded with topics extracted from the target's timeline. We augment the model with clustering to triage high value targets based on their level of social engagement, and measure success of the LSTM's phishing expedition using click-rates of IP-tracked links. We achieve state of the art success rates, tripling those of historic email attack campaigns, and outperform humans manually performing the same task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge