Petr Baudiš

Sentence Pair Scoring: Towards Unified Framework for Text Comprehension

May 17, 2016

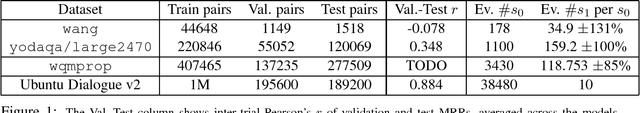

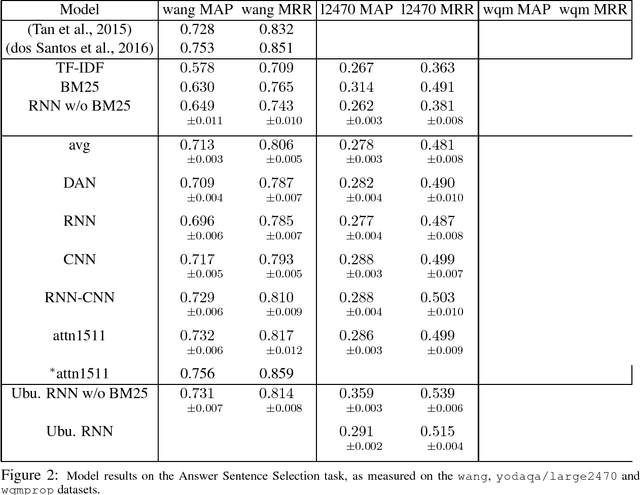

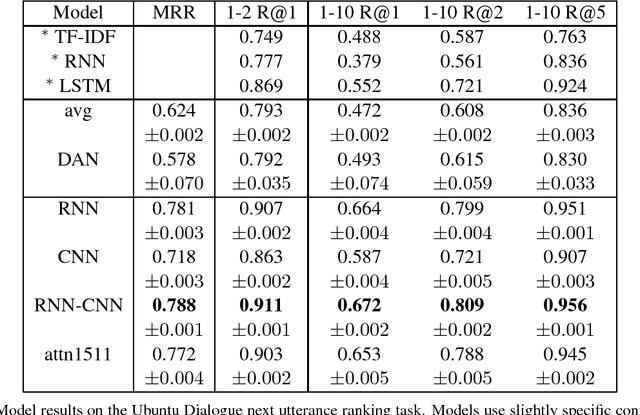

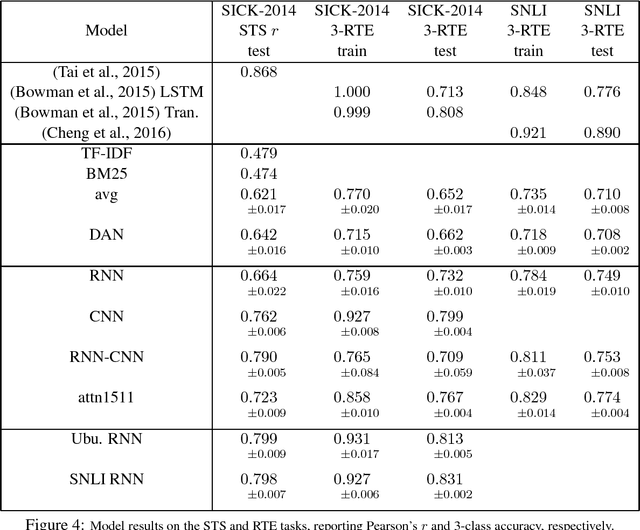

Abstract:We review the task of Sentence Pair Scoring, popular in the literature in various forms - viewed as Answer Sentence Selection, Semantic Text Scoring, Next Utterance Ranking, Recognizing Textual Entailment, Paraphrasing or e.g. a component of Memory Networks. We argue that all such tasks are similar from the model perspective and propose new baselines by comparing the performance of common IR metrics and popular convolutional, recurrent and attention-based neural models across many Sentence Pair Scoring tasks and datasets. We discuss the problem of evaluating randomized models, propose a statistically grounded methodology, and attempt to improve comparisons by releasing new datasets that are much harder than some of the currently used well explored benchmarks. We introduce a unified open source software framework with easily pluggable models and tasks, which enables us to experiment with multi-task reusability of trained sentence model. We set a new state-of-art in performance on the Ubuntu Dialogue dataset.

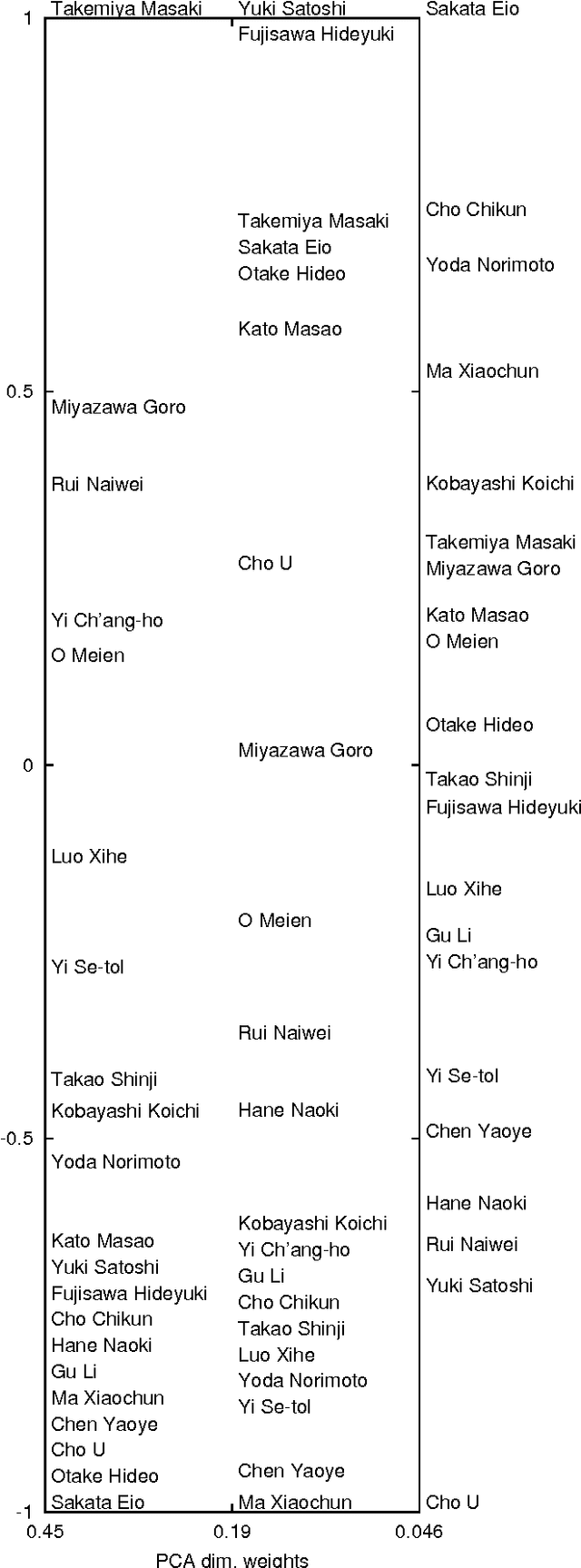

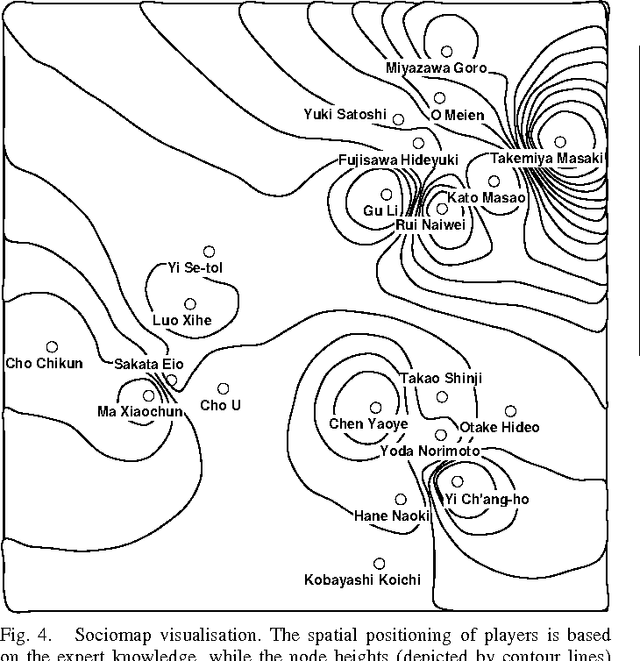

Evaluating Go Game Records for Prediction of Player Attributes

Dec 30, 2015

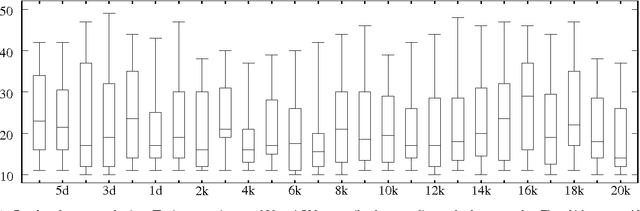

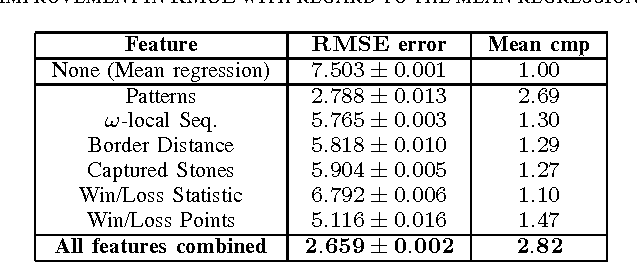

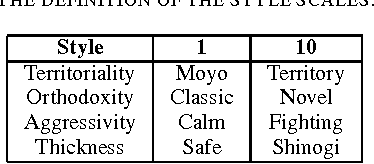

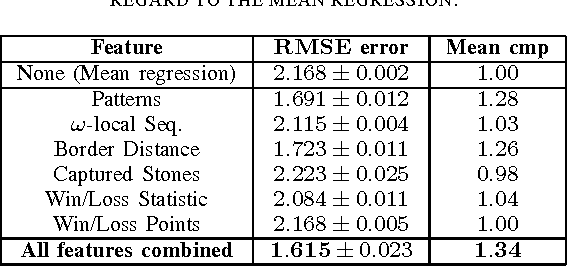

Abstract:We propose a way of extracting and aggregating per-move evaluations from sets of Go game records. The evaluations capture different aspects of the games such as played patterns or statistic of sente/gote sequences. Using machine learning algorithms, the evaluations can be utilized to predict different relevant target variables. We apply this methodology to predict the strength and playing style of the player (e.g. territoriality or aggressivity) with good accuracy. We propose a number of possible applications including aiding in Go study, seeding real-work ranks of internet players or tuning of Go-playing programs.

COCOpf: An Algorithm Portfolio Framework

May 14, 2014Abstract:Algorithm portfolios represent a strategy of composing multiple heuristic algorithms, each suited to a different class of problems, within a single general solver that will choose the best suited algorithm for each input. This approach recently gained popularity especially for solving combinatoric problems, but optimization applications are still emerging. The COCO platform of the BBOB workshop series is the current standard way to measure performance of continuous black-box optimization algorithms. As an extension to the COCO platform, we present the Python-based COCOpf framework that allows composing portfolios of optimization algorithms and running experiments with different selection strategies. In our framework, we focus on black-box algorithm portfolio and online adaptive selection. As a demonstration, we measure the performance of stock SciPy optimization algorithms and the popular CMA algorithm alone and in a portfolio with two simple selection strategies. We confirm that even a naive selection strategy can provide improved performance across problem classes.

* POSTER2014. arXiv admin note: text overlap with arXiv:1206.5780 by other authors without attribution

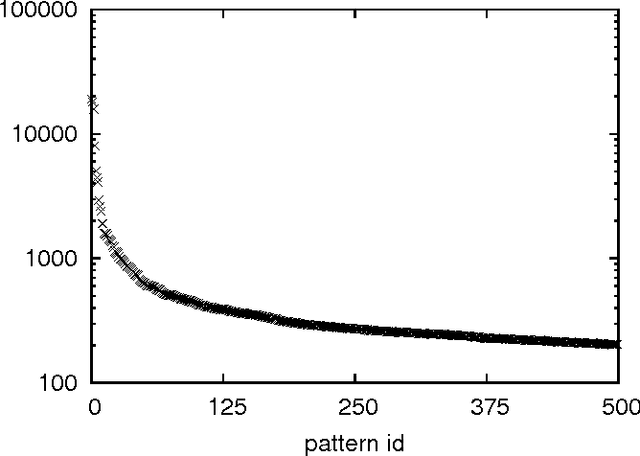

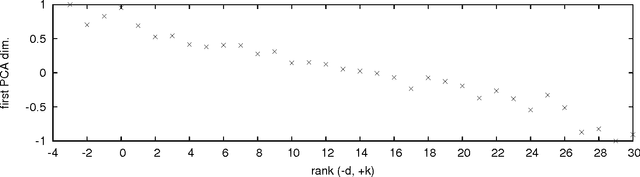

On Move Pattern Trends in a Large Go Games Corpus

Sep 24, 2012

Abstract:We process a large corpus of game records of the board game of Go and propose a way of extracting summary information on played moves. We then apply several basic data-mining methods on the summary information to identify the most differentiating features within the summary information, and discuss their correspondence with traditional Go knowledge. We show statistically significant mappings of the features to player attributes such as playing strength or informally perceived "playing style" (e.g. territoriality or aggressivity), describe accurate classifiers for these attributes, and propose applications including seeding real-work ranks of internet players, aiding in Go study and tuning of Go-playing programs, or contribution to Go-theoretical discussion on the scope of "playing style".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge