Peter R. Lewis

Proceedings of 1st Workshop on Advancing Artificial Intelligence through Theory of Mind

Apr 28, 2025

Abstract:This volume includes a selection of papers presented at the Workshop on Advancing Artificial Intelligence through Theory of Mind held at AAAI 2025 in Philadelphia US on 3rd March 2025. The purpose of this volume is to provide an open access and curated anthology for the ToM and AI research community.

Is Trust Correlated With Explainability in AI? A Meta-Analysis

Apr 16, 2025Abstract:This study critically examines the commonly held assumption that explicability in artificial intelligence (AI) systems inherently boosts user trust. Utilizing a meta-analytical approach, we conducted a comprehensive examination of the existing literature to explore the relationship between AI explainability and trust. Our analysis, incorporating data from 90 studies, reveals a statistically significant but moderate positive correlation between the explainability of AI systems and the trust they engender among users. This indicates that while explainability contributes to building trust, it is not the sole or predominant factor in this equation. In addition to academic contributions to the field of Explainable AI (XAI), this research highlights its broader socio-technical implications, particularly in promoting accountability and fostering user trust in critical domains such as healthcare and justice. By addressing challenges like algorithmic bias and ethical transparency, the study underscores the need for equitable and sustainable AI adoption. Rather than focusing solely on immediate trust, we emphasize the normative importance of fostering authentic and enduring trustworthiness in AI systems.

The challenge of uncertainty quantification of large language models in medicine

Apr 07, 2025Abstract:This study investigates uncertainty quantification in large language models (LLMs) for medical applications, emphasizing both technical innovations and philosophical implications. As LLMs become integral to clinical decision-making, accurately communicating uncertainty is crucial for ensuring reliable, safe, and ethical AI-assisted healthcare. Our research frames uncertainty not as a barrier but as an essential part of knowledge that invites a dynamic and reflective approach to AI design. By integrating advanced probabilistic methods such as Bayesian inference, deep ensembles, and Monte Carlo dropout with linguistic analysis that computes predictive and semantic entropy, we propose a comprehensive framework that manages both epistemic and aleatoric uncertainties. The framework incorporates surrogate modeling to address limitations of proprietary APIs, multi-source data integration for better context, and dynamic calibration via continual and meta-learning. Explainability is embedded through uncertainty maps and confidence metrics to support user trust and clinical interpretability. Our approach supports transparent and ethical decision-making aligned with Responsible and Reflective AI principles. Philosophically, we advocate accepting controlled ambiguity instead of striving for absolute predictability, recognizing the inherent provisionality of medical knowledge.

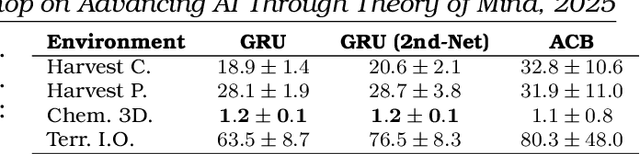

Online Learning of Temporal Dependencies for Sustainable Foraging Problem

Jul 01, 2024Abstract:The sustainable foraging problem is a dynamic environment testbed for exploring the forms of agent cognition in dealing with social dilemmas in a multi-agent setting. The agents need to resist the temptation of individual rewards through foraging and choose the collective long-term goal of sustainability. We investigate methods of online learning in Neuro-Evolution and Deep Recurrent Q-Networks to enable agents to attempt the problem one-shot as is often required by wicked social problems. We further explore if learning temporal dependencies with Long Short-Term Memory may be able to aid the agents in developing sustainable foraging strategies in the long term. It was found that the integration of Long Short-Term Memory assisted agents in developing sustainable strategies for a single agent, however failed to assist agents in managing the social dilemma that arises in the multi-agent scenario.

Reflective Artificial Intelligence

Jan 25, 2023Abstract:Artificial Intelligence (AI) is about making computers that do the sorts of things that minds can do, and as we progress towards this goal, we tend to increasingly delegate human tasks to machines. However, AI systems usually do these tasks with an unusual imbalance of insight and understanding: new, deeper insights are present, yet many important qualities that a human mind would have previously brought to the activity are utterly absent. Therefore, it is crucial to ask which features of minds have we replicated, which are missing, and if that matters. One core feature that humans bring to tasks, when dealing with the ambiguity, emergent knowledge, and social context presented by the world, is reflection. Yet this capability is utterly missing from current mainstream AI. In this paper we ask what reflective AI might look like. Then, drawing on notions of reflection in complex systems, cognitive science, and agents, we sketch an architecture for reflective AI agents, and highlight ways forward.

Can Bio-Inspired Swarm Algorithms Scale to Modern Societal Problems

May 20, 2019

Abstract:Taking inspiration from nature for meta-heuristics has proven popular and relatively successful. Many are inspired by the collective intelligence exhibited by insects, fish and birds. However, there is a question over their scalability to the types of complex problems experienced in the modern world. Natural systems evolved to solve simpler problems effectively, replicating these processes for complex problems may suffer from inefficiencies. Several causal factors can impact scalability; computational complexity, memory requirements or pure problem intractability. Supporting evidence is provided using a case study in Ant Colony Optimisation (ACO) regards tackling increasingly complex real-world fleet optimisation problems. This paper hypothesizes that contrary to common intuition, bio-inspired collective intelligence techniques by their very nature exhibit poor scalability in cases of high dimensionality when large degrees of decision making are required. Facilitating scaling of bio-inspired algorithms necessitates reducing this decision making. To support this hypothesis, an enhanced Partial-ACO technique is presented which effectively reduces ant decision making. Reducing the decision making required by ants by up to 90% results in markedly improved effectiveness and reduced runtimes for increasingly complex fleet optimisation problems. Reductions in traversal timings of 40-50% are achieved for problems with up to 45 vehicles and 437 jobs.

Applying Partial-ACO to Large-scale Vehicle Fleet Optimisation

Apr 16, 2019

Abstract:Optimisation of fleets of commercial vehicles with regards scheduling tasks from various locations to vehicles can result in considerably lower fleet traversal times. This has significant benefits including reduced expenses for the company and more importantly, a reduction in the degree of road use and hence vehicular emissions. Exact optimisation methods fail to scale to real commercial problem instances, thus meta-heuristics are more suitable. Ant Colony Optimisation (ACO) generally provides good solutions on small to medium problem sizes. However, commercial fleet optimisation problems are typically large and complex, in which ACO fails to scale well. Partial-ACO is a new ACO variant designed to scale to larger problem instances. Therefore this paper investigates the application of Partial-ACO on the problem of fleet optimisation, demonstrating the capacity of Partial-ACO to successfully scale to larger problems. Indeed, for real-world fleet optimisation problems supplied by a Birmingham based company with up to 298 jobs and 32 vehicles, Partial-ACO can improve upon their fleet traversal times by over 44%. Moreover, Partial-ACO demonstrates its ability to scale with considerably improved results over standard ACO and competitive results against a Genetic Algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge