Peter Bai

ApproxNet: Content and Contention Aware Video Analytics System for the Edge

Aug 28, 2019

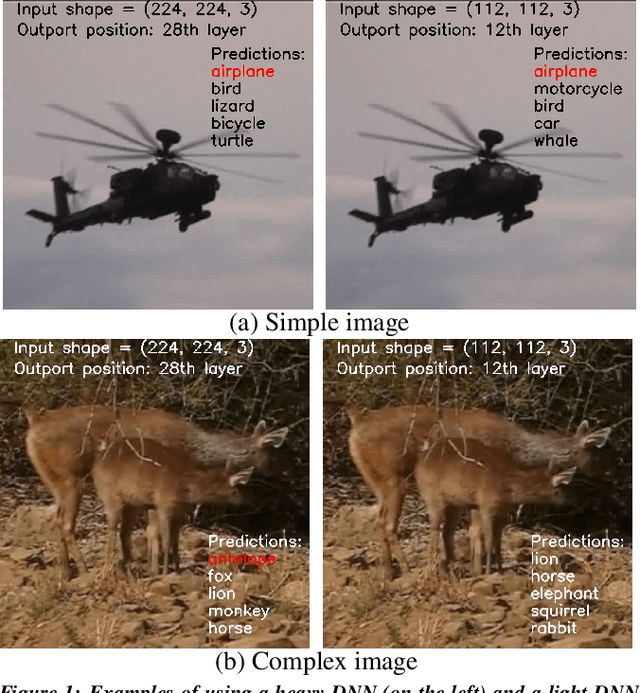

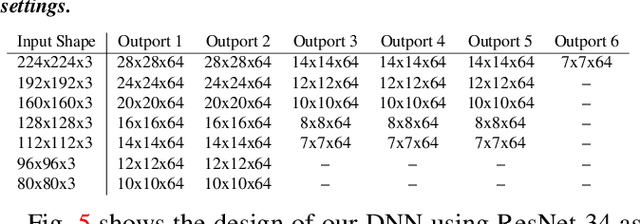

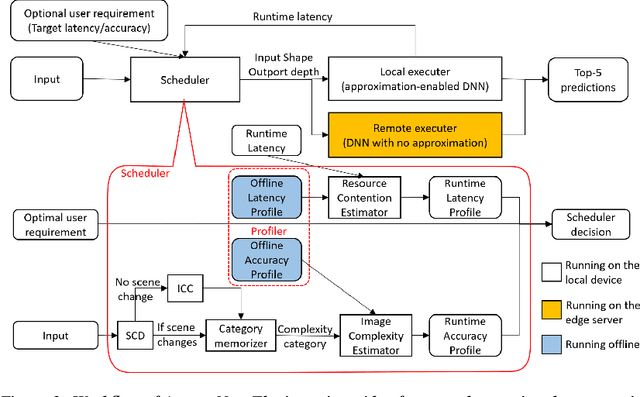

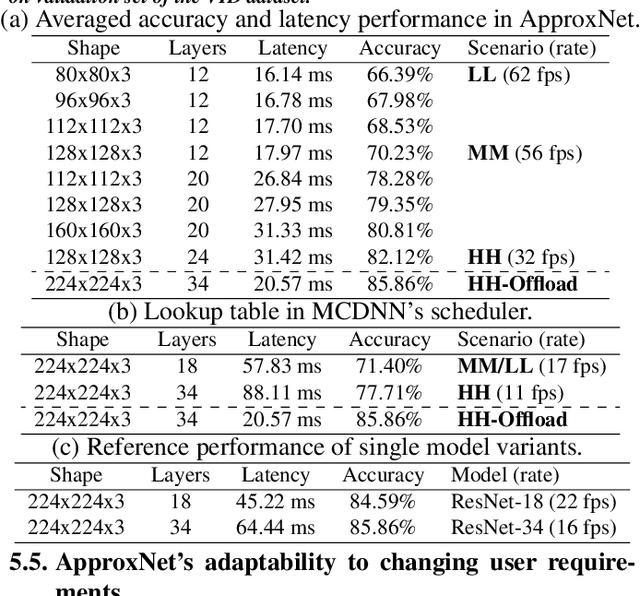

Abstract:Videos take lot of time to transport over the network, hence running analytics on live video at the edge devices, right where it was captured has become an important system driver. However these edge devices, e.g., IoT devices, surveillance cameras, AR/VR gadgets are resource constrained. This makes it impossible to run state-of-the-art heavy Deep Neural Networks (DNNs) on them and yet provide low and stable latency under various circumstances, such as, changes in the resource availability on the device, the content characteristics, or requirements from the user. In this paper we introduce ApproxNet, a video analytics system for the edge. It enables novel dynamic approximation techniques to achieve desired inference latency and accuracy trade-off under different system conditions and resource contentions, variations in the complexity of the video contents and user requirements. It achieves this by enabling two approximation knobs within a single DNN model, rather than creating and maintaining an ensemble of models (such as in MCDNN [Mobisys-16]). Ensemble models run into memory issues on the lightweight devices and incur large switching penalties among the models in response to runtime changes. We show that ApproxNet can adapt seamlessly at runtime to video content changes and changes in system dynamics to provide low and stable latency for object detection on a video stream. We compare the accuracy and the latency to ResNet [2015], MCDNN, and MobileNets [Google-2017].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge