Pedro A. Lopes

Human Activity Recognition with a 6.5 GHz Reconfigurable Intelligent Surface for Wi-Fi 6E

Jan 24, 2025

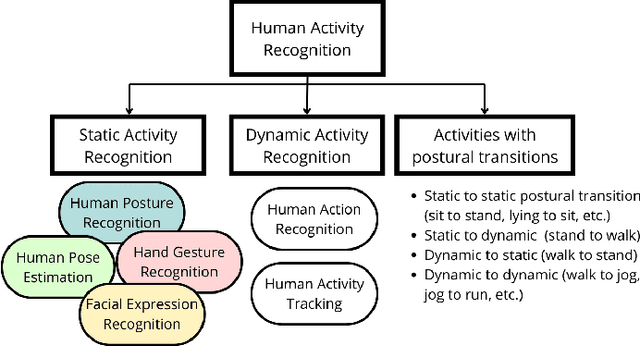

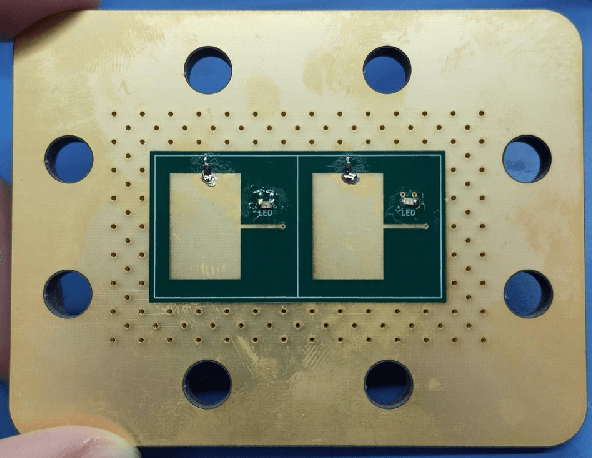

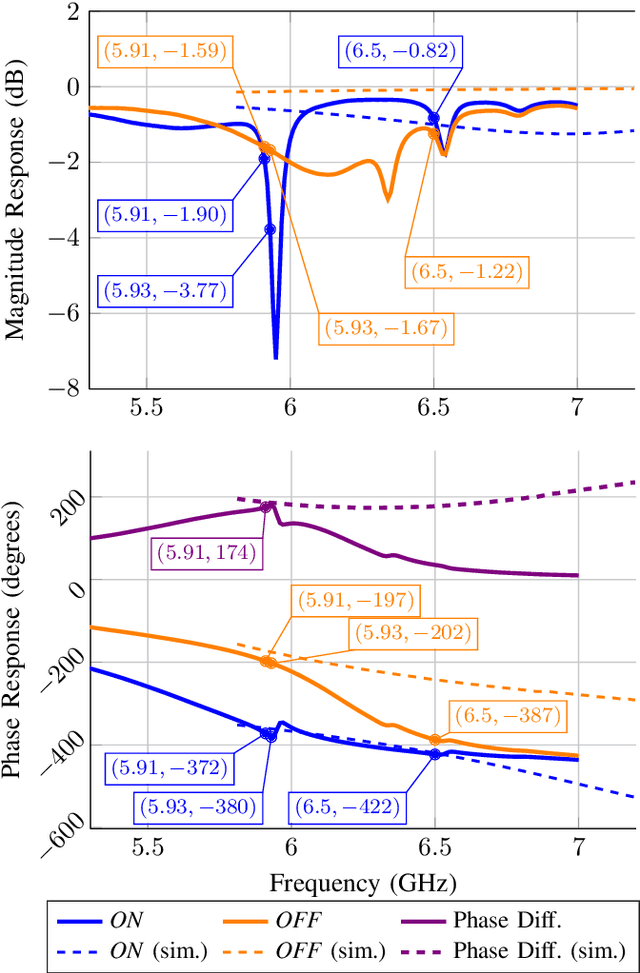

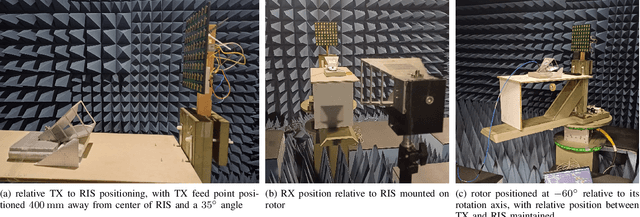

Abstract:Human Activity Recognition (HAR) is the identification and classification of static and dynamic human activities, which find applicability in domains like healthcare, entertainment, security, and cyber-physical systems. Traditional HAR approaches rely on wearable sensors, vision-based systems, or ambient sensing, each with inherent limitations such as privacy concerns or restricted sensing conditions. Recently, Radio Frequency (RF)-based HAR has emerged, relying on the interaction of RF signals with people to infer activities. Reconfigurable Intelligent Surfaces (RISs) offers significant potential in this domain by enabling dynamic control over the wireless environment, thus enhancing the information extracted from RF signals. We present an Hand Gesture Recognition (HGR) approach that employs our own 6.5 GHz RIS design to manipulate the RF medium in an area of interest. We validate the capability of our RIS to control the medium by characterizing its steering response, and further we gather and publish a dataset for HGR classification for three different hand gestures. By employing two Convolutional Neural Networks (CNNs) models trained on data gathered under random and optimized RIS configuration sequences, we achieved classification accuracies exceeding 90%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge