Pavlos Charalampidis

Evaluating Short-Term Forecasting of Multiple Time Series in IoT Environments

Jun 15, 2022

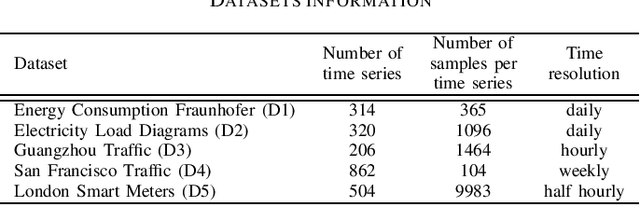

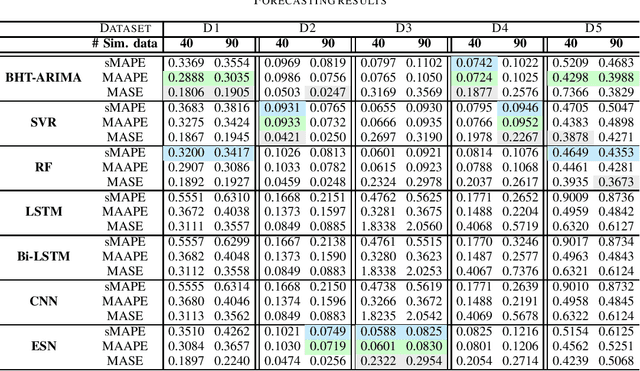

Abstract:Modern Internet of Things (IoT) environments are monitored via a large number of IoT enabled sensing devices, with the data acquisition and processing infrastructure setting restrictions in terms of computational power and energy resources. To alleviate this issue, sensors are often configured to operate at relatively low sampling frequencies, yielding a reduced set of observations. Nevertheless, this can hamper dramatically subsequent decision-making, such as forecasting. To address this problem, in this work we evaluate short-term forecasting in highly underdetermined cases, i.e., the number of sensor streams is much higher than the number of observations. Several statistical, machine learning and neural network-based models are thoroughly examined with respect to the resulting forecasting accuracy on five different real-world datasets. The focus is given on a unified experimental protocol especially designed for short-term prediction of multiple time series at the IoT edge. The proposed framework can be considered as an important step towards establishing a solid forecasting strategy in resource constrained IoT applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge