Paul R. Genssler

Modeling and Predicting Transistor Aging under Workload Dependency using Machine Learning

Jul 08, 2022

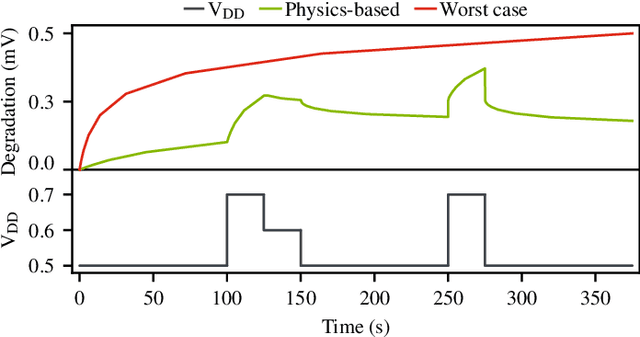

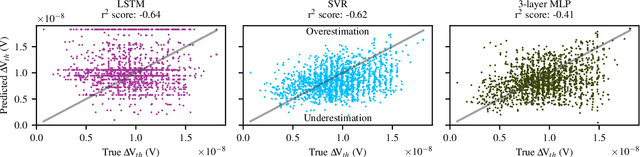

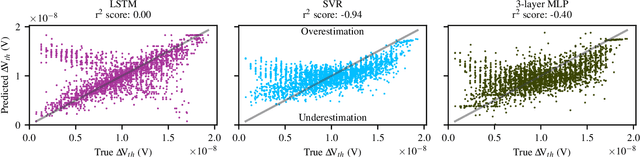

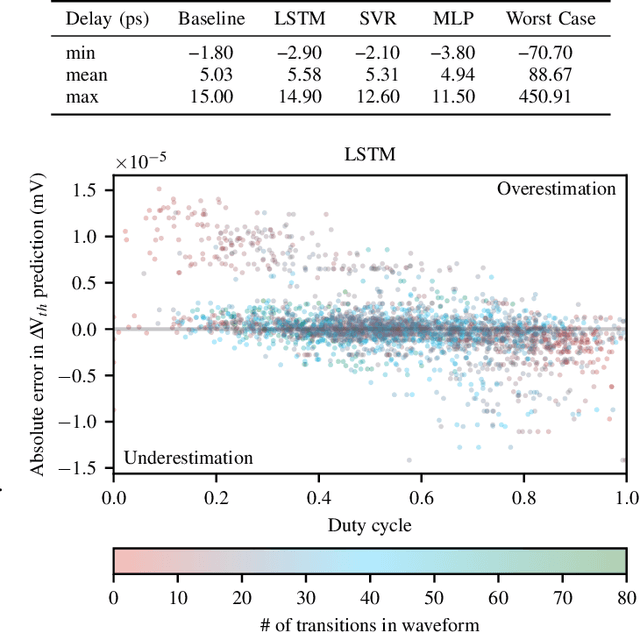

Abstract:The pivotal issue of reliability is one of colossal concern for circuit designers. The driving force is transistor aging, dependent on operating voltage and workload. At the design time, it is difficult to estimate close-to-the-edge guardbands that keep aging effects during the lifetime at bay. This is because the foundry does not share its calibrated physics-based models, comprised of highly confidential technology and material parameters. However, the unmonitored yet necessary overestimation of degradation amounts to a performance decline, which could be preventable. Furthermore, these physics-based models are exceptionally computationally complex. The costs of modeling millions of individual transistors at design time can be evidently exorbitant. We propose the revolutionizing prospect of a machine learning model trained to replicate the physics-based model, such that no confidential parameters are disclosed. This effectual workaround is fully accessible to circuit designers for the purposes of design optimization. We demonstrate the models' ability to generalize by training on data from one circuit and applying it successfully to a benchmark circuit. The mean relative error is as low as 1.7%, with a speedup of up to 20X. Circuit designers, for the first time ever, will have ease of access to a high-precision aging model, which is paramount for efficient designs. This work is a promising step in the direction of bridging the wide gulf between the foundry and circuit designers.

Brain-Inspired Hyperdimensional Computing: How Thermal-Friendly for Edge Computing?

Apr 05, 2022

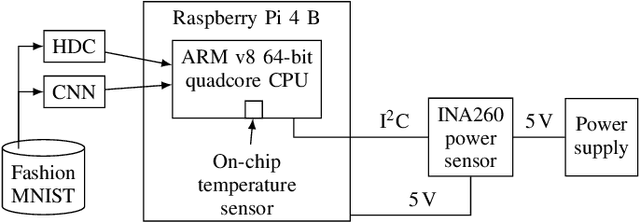

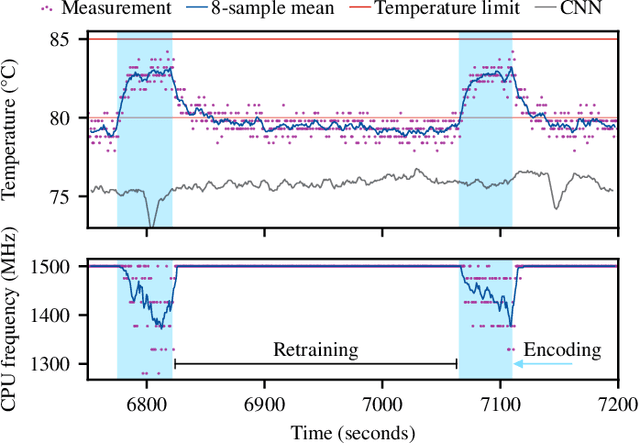

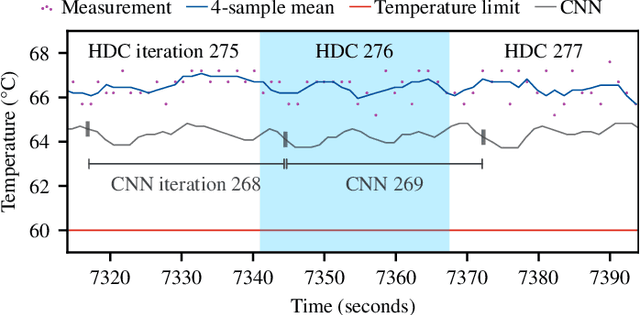

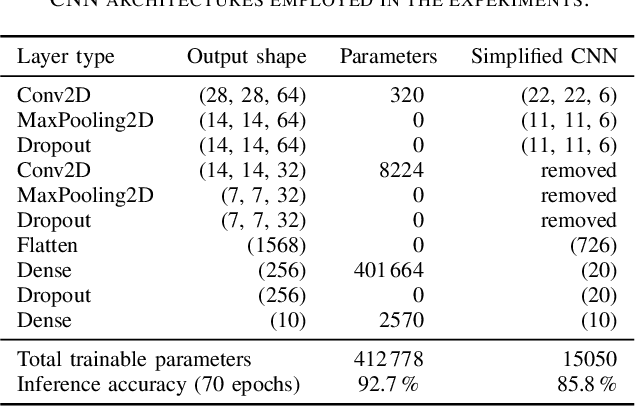

Abstract:Brain-inspired hyperdimensional computing (HDC) is an emerging machine learning (ML) methods. It is based on large vectors of binary or bipolar symbols and a few simple mathematical operations. The promise of HDC is a highly efficient implementation for embedded systems like wearables. While fast implementations have been presented, other constraints have not been considered for edge computing. In this work, we aim at answering how thermal-friendly HDC for edge computing is. Devices like smartwatches, smart glasses, or even mobile systems have a restrictive cooling budget due to their limited volume. Although HDC operations are simple, the vectors are large, resulting in a high number of CPU operations and thus a heavy load on the entire system potentially causing temperature violations. In this work, the impact of HDC on the chip's temperature is investigated for the first time. We measure the temperature and power consumption of a commercial embedded system and compare HDC with conventional CNN. We reveal that HDC causes up to 6.8{\deg}C higher temperatures and leads to up to 47% more CPU throttling. Even when both HDC and CNN aim for the same throughput (i.e., perform a similar number of classifications per second), HDC still causes higher on-chip temperatures due to the larger power consumption.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge