Paul Knoll

Animating NeRFs from Texture Space: A Framework for Pose-Dependent Rendering of Human Performances

Nov 06, 2023

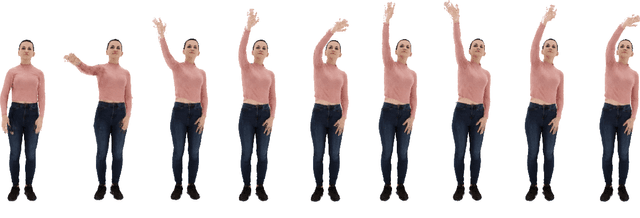

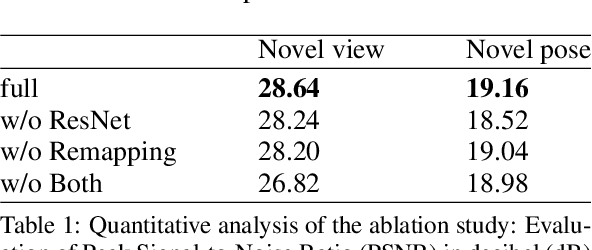

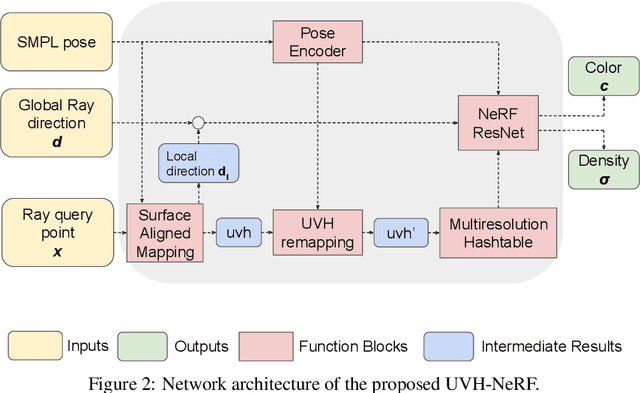

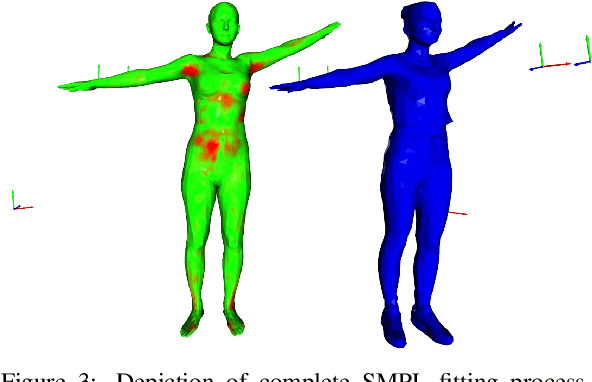

Abstract:Creating high-quality controllable 3D human models from multi-view RGB videos poses a significant challenge. Neural radiance fields (NeRFs) have demonstrated remarkable quality in reconstructing and free-viewpoint rendering of static as well as dynamic scenes. The extension to a controllable synthesis of dynamic human performances poses an exciting research question. In this paper, we introduce a novel NeRF-based framework for pose-dependent rendering of human performances. In our approach, the radiance field is warped around an SMPL body mesh, thereby creating a new surface-aligned representation. Our representation can be animated through skeletal joint parameters that are provided to the NeRF in addition to the viewpoint for pose dependent appearances. To achieve this, our representation includes the corresponding 2D UV coordinates on the mesh texture map and the distance between the query point and the mesh. To enable efficient learning despite mapping ambiguities and random visual variations, we introduce a novel remapping process that refines the mapped coordinates. Experiments demonstrate that our approach results in high-quality renderings for novel-view and novel-pose synthesis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge