Patrick Kenny

Generative Adversarial Speaker Embedding Networks for Domain Robust End-to-End Speaker Verification

Nov 07, 2018

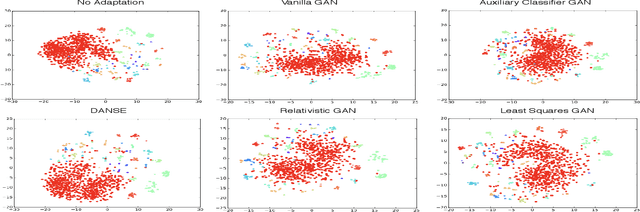

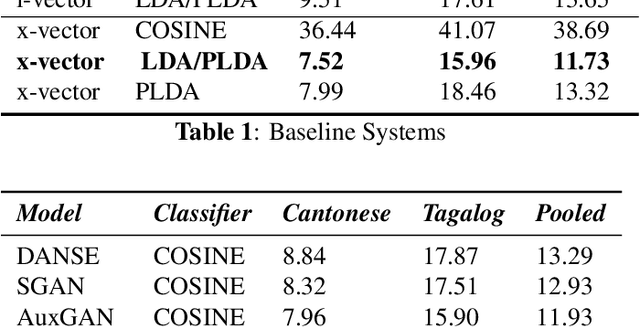

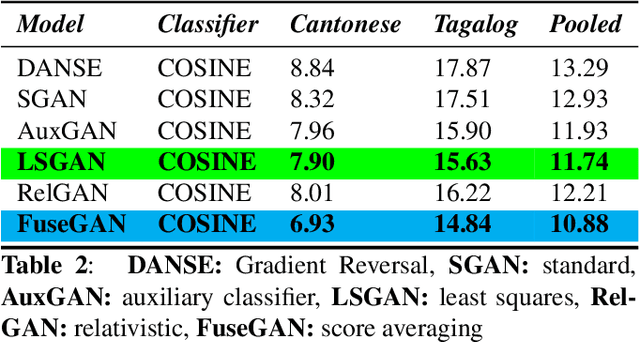

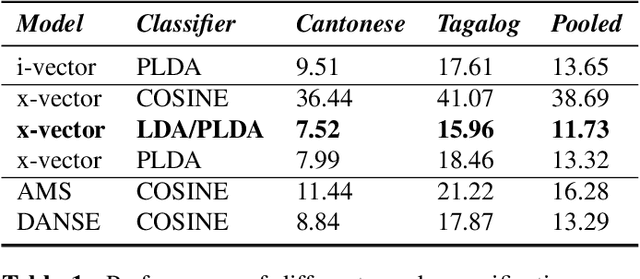

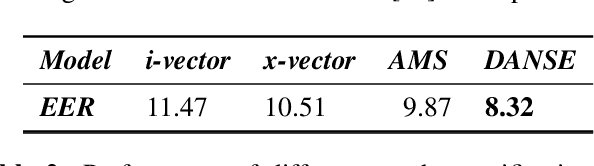

Abstract:This article presents a novel approach for learning domain-invariant speaker embeddings using Generative Adversarial Networks. The main idea is to confuse a domain discriminator so that is can't tell if embeddings are from the source or target domains. We train several GAN variants using our proposed framework and apply them to the speaker verification task. On the challenging NIST-SRE 2016 dataset, we are able to match the performance of a strong baseline x-vector system. In contrast to the the baseline systems which are dependent on dimensionality reduction (LDA) and an external classifier (PLDA), our proposed speaker embeddings can be scored using simple cosine distance. This is achieved by optimizing our models end-to-end, using an angular margin loss function. Furthermore, we are able to significantly boost verification performance by averaging our different GAN models at the score level, achieving a relative improvement of 7.2% over the baseline.

Adapting End-to-End Neural Speaker Verification to New Languages and Recording Conditions with Adversarial Training

Nov 07, 2018

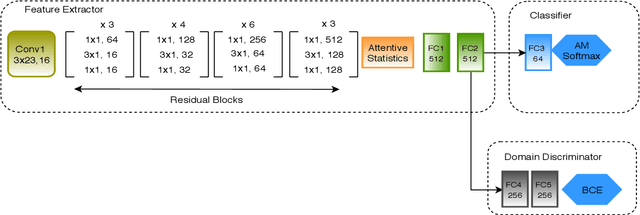

Abstract:In this article we propose a novel approach for adapting speaker embeddings to new domains based on adversarial training of neural networks. We apply our embeddings to the task of text-independent speaker verification, a challenging, real-world problem in biometric security. We further the development of end-to-end speaker embedding models by combing a novel 1-dimensional, self-attentive residual network, an angular margin loss function and adversarial training strategy. Our model is able to learn extremely compact, 64-dimensional speaker embeddings that deliver competitive performance on a number of popular datasets using simple cosine distance scoring. One the NIST-SRE 2016 task we are able to beat a strong i-vector baseline, while on the Speakers in the Wild task our model was able to outperform both i-vector and x-vector baselines, showing an absolute improvement of 2.19% over the latter. Additionally, we show that the integration of adversarial training consistently leads to a significant improvement over an unadapted model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge