Patrick J. Tighe

Multi-Task Prediction of Clinical Outcomes in the Intensive Care Unit using Flexible Multimodal Transformers

Nov 09, 2021

Abstract:Recent deep learning research based on Transformer model architectures has demonstrated state-of-the-art performance across a variety of domains and tasks, mostly within the computer vision and natural language processing domains. While some recent studies have implemented Transformers for clinical tasks using electronic health records data, they are limited in scope, flexibility, and comprehensiveness. In this study, we propose a flexible Transformer-based EHR embedding pipeline and predictive model framework that introduces several novel modifications of existing workflows that capitalize on data attributes unique to the healthcare domain. We showcase the feasibility of our flexible design in a case study in the intensive care unit, where our models accurately predict seven clinical outcomes pertaining to readmission and patient mortality over multiple future time horizons.

Posture Recognition in the Critical Care Settings using Wearable Devices

Oct 07, 2021

Abstract:Low physical activity levels in the intensive care units (ICU) patients have been linked to adverse clinical outcomes. Therefore, there is a need for continuous and objective measurement of physical activity in the ICU to quantify the association between physical activity and patient outcomes. This measurement would also help clinicians evaluate the efficacy of proposed rehabilitation and physical therapy regimens in improving physical activity. In this study, we examined the feasibility of posture recognition in an ICU population using data from wearable sensors.

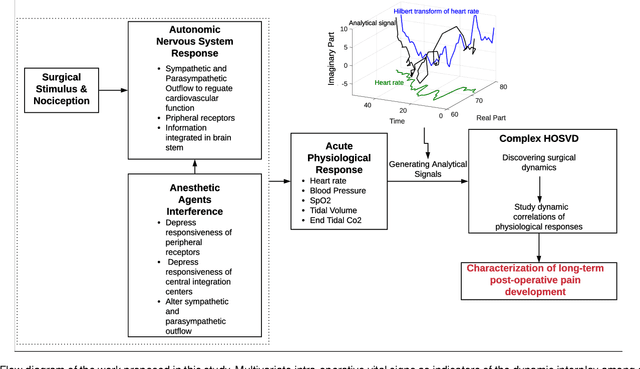

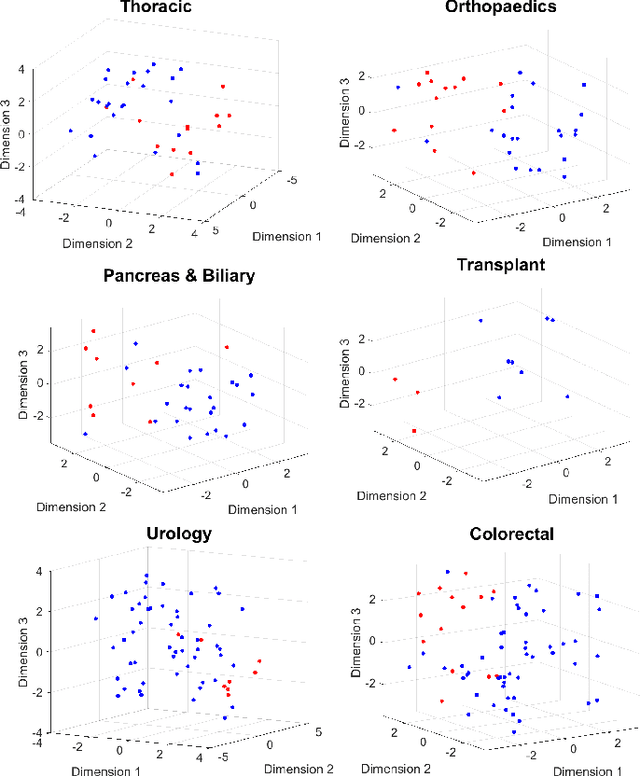

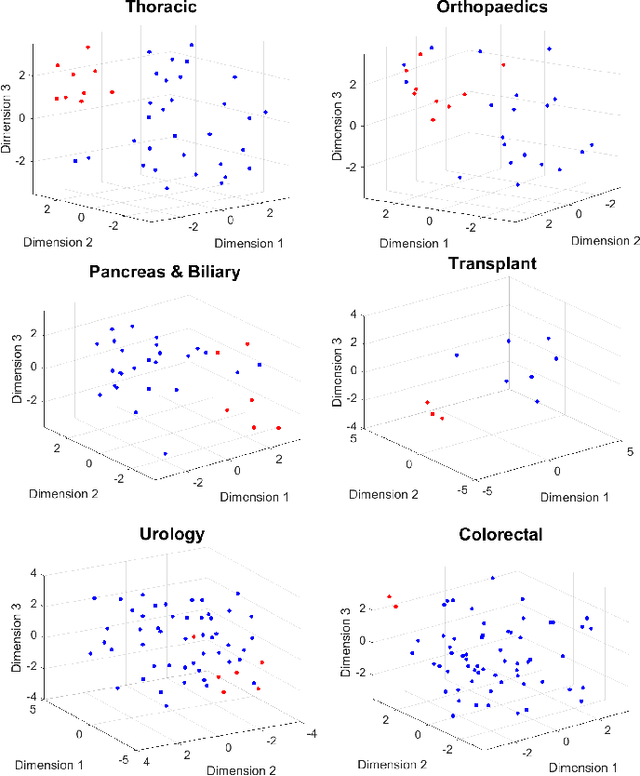

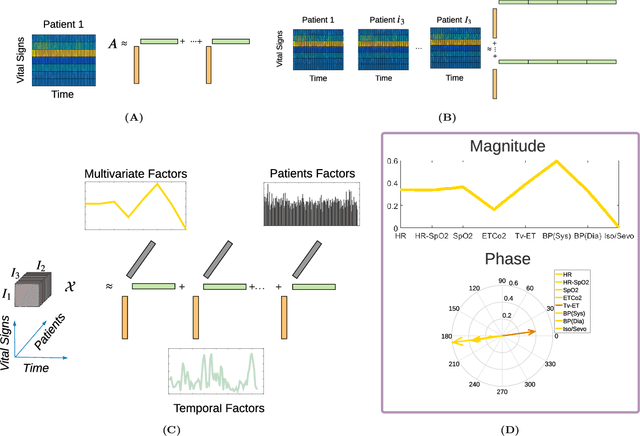

Analysis of Intra-Operative Physiological Responses Through Complex Higher-Order SVD for Long-Term Post-Operative Pain Prediction

Sep 02, 2021

Abstract:Long-term pain conditions after surgery and patients' responses to pain relief medications are not yet fully understood. While recent studies developed an index for nociception level of patients under general anesthesia, based on multiple physiological parameters, it remains unclear whether and how dynamics of these parameters indicate long-term post-operative pain (POP). To extract unbiased and interpretable descriptions of how physiological parameters dynamics change over time and across patients in response to surgical procedures, we employed a multivariate-temporal analysis. We demonstrate the main features of intra-operative physiological responses can be used to predict long-term POP. We propose to use a complex higher-order SVD method to accurately decompose the patients' physiological responses into multivariate structures evolving in time. We used intra-operative vital signs of 175 patients from a mixed surgical cohort to extract three interconnected, low-dimensional complex-valued descriptions of patients' physiological responses: multivariate factors, reflecting sub-physiological parameters; temporal factors reflecting common intra-surgery temporal dynamics; and patients factors, describing patient to patient changes in physiological responses. Adoption of complex-HOSVD allowed us to clarify the dynamic correlation structure included in intra-operative physiological responses. Instantaneous phases of the complex-valued physiological responses within the subspace of principal descriptors enabled us to discriminate between mild versus severe levels of long-term POP. By abstracting patients into different surgical groups, we identified significant surgery-related principal descriptors: each of them potentially encodes different surgical stimulation. The dynamics of patients' physiological responses to these surgical events are linked to long-term post-operative pain development.

The Intelligent ICU Pilot Study: Using Artificial Intelligence Technology for Autonomous Patient Monitoring

Sep 26, 2018

Abstract:Currently, many critical care indices are repetitively assessed and recorded by overburdened nurses, e.g. physical function or facial pain expressions of nonverbal patients. In addition, many essential information on patients and their environment are not captured at all, or are captured in a non-granular manner, e.g. sleep disturbance factors such as bright light, loud background noise, or excessive visitations. In this pilot study, we examined the feasibility of using pervasive sensing technology and artificial intelligence for autonomous and granular monitoring of critically ill patients and their environment in the Intensive Care Unit (ICU). As an exemplar prevalent condition, we also characterized delirious and non-delirious patients and their environment. We used wearable sensors, light and sound sensors, and a high-resolution camera to collected data on patients and their environment. We analyzed collected data using deep learning and statistical analysis. Our system performed face detection, face recognition, facial action unit detection, head pose detection, facial expression recognition, posture recognition, actigraphy analysis, sound pressure and light level detection, and visitation frequency detection. We were able to detect patient's face (Mean average precision (mAP)=0.94), recognize patient's face (mAP=0.80), and their postures (F1=0.94). We also found that all facial expressions, 11 activity features, visitation frequency during the day, visitation frequency during the night, light levels, and sound pressure levels during the night were significantly different between delirious and non-delirious patients (p-value<0.05). In summary, we showed that granular and autonomous monitoring of critically ill patients and their environment is feasible and can be used for characterizing critical care conditions and related environment factors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge